It seems like you do not have Pytorch installed with CUDA support.

Try checking your CUDA version using

nvcc --version

or

nvidia-smi

Install from the original pytorch distribution into your conda environment

https://pytorch.org/get-started/locally/

Configure Pytorch for Mac M1 chips

Step 1: Install Xcode

Install the Command Line Tools:

xcode-select --install

Step 2: Setup a new conda environment

conda create -n torch-gpu python=3.8

conda activate torch-gpu

Step 2: Install PyTorch packages

conda install pytorch torchvision torchaudio -c pytorch-nightly

Step 3: Install Jupyter notebook for validating installation

conda install -c conda-forge jupyter jupyterlab

jupter-notebook

Create new notebook file and execute this code

dtype = torch.float

device = torch.device("mps")

# Create random input and output data

x = torch.linspace(-math.pi, math.pi, 2000, device=device, dtype=dtype)

y = torch.sin(x)

# Randomly initialize weights

a = torch.randn((), device=device, dtype=dtype)

b = torch.randn((), device=device, dtype=dtype)

c = torch.randn((), device=device, dtype=dtype)

d = torch.randn((), device=device, dtype=dtype)

learning_rate = 1e-6

for t in range(2000):

# Forward pass: compute predicted y

y_pred = a + b * x + c * x ** 2 + d * x ** 3

# Compute and print loss

loss = (y_pred - y).pow(2).sum().item()

if t % 100 == 99:

print(t, loss)

# Backprop to compute gradients of a, b, c, d with respect to loss

grad_y_pred = 2.0 * (y_pred - y)

grad_a = grad_y_pred.sum()

grad_b = (grad_y_pred * x).sum()

grad_c = (grad_y_pred * x ** 2).sum()

grad_d = (grad_y_pred * x ** 3).sum()

# Update weights using gradient descent

a -= learning_rate * grad_a

b -= learning_rate * grad_b

c -= learning_rate * grad_c

d -= learning_rate * grad_d

print(f'Result: y = {a.item()} + {b.item()} x + {c.item()} x^2 + {d.item()} x^3')

If you don’t see any error, everything works as expected!

Ref:

https://towardsdatascience.com/installing-pytorch-on-apple-m1-chip-with-gpu-acceleration-3351dc44d67c

Keep in mind that we may have to run the command twice to confirm uninstallation.

Note: Once we see the

warning: skipping torch as it is not installed, we will know that we have completely uninstalled the torch.

Installing CUDA Toolkit

The next approach is to install the NVIDIA CUDA Toolkit before installing PyTorch with CUDA support.

To accomplish this, we need to check the compatibility of our GPU with CUDA before installing the CUDA Toolkit. This is to make sure that our GPU is compatible with CUDA. We can check the list of CUDA-compatible GPUs on the NVIDIA website.

Note: To learn more about CUDA installation on your machine, click here.

We can also install the CUDA Toolkit using a Python package manager like Miniconda on Linux. We start off by downloading the Miniconda installer script for Linux from the official website and running the following command.

I am trying to run code from this repo. I have disabled cuda by changing lines 39/40 in main.py from

parser.add_argument('--type', default='torch.cuda.FloatTensor', help='type of tensor - e.g torch.cuda.HalfTensor')

to

parser.add_argument('--type', default='torch.FloatTensor', help='type of tensor - e.g torch.HalfTensor')

Despite this, running the code gives me the following exception:

Traceback (most recent call last):

File "main.py", line 190, in <module>

main()

File "main.py", line 178, in main

model, train_data, training=True, optimizer=optimizer)

File "main.py", line 135, in forward

for i, (imgs, (captions, lengths)) in enumerate(data):

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/utils/data/dataloader.py", line 201, in __next__

return self._process_next_batch(batch)

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/utils/data/dataloader.py", line 221, in _process_next_batch

raise batch.exc_type(batch.exc_msg)

AssertionError: Traceback (most recent call last):

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/utils/data/dataloader.py", line 62, in _pin_memory_loop

batch = pin_memory_batch(batch)

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/utils/data/dataloader.py", line 123, in pin_memory_batch

return [pin_memory_batch(sample) for sample in batch]

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/utils/data/dataloader.py", line 123, in <listcomp>

return [pin_memory_batch(sample) for sample in batch]

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/utils/data/dataloader.py", line 117, in pin_memory_batch

return batch.pin_memory()

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/tensor.py", line 82, in pin_memory

return type(self)().set_(storage.pin_memory()).view_as(self)

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/storage.py", line 83, in pin_memory

allocator = torch.cuda._host_allocator()

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/cuda/__init__.py", line 220, in _host_allocator

_lazy_init()

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/cuda/__init__.py", line 84, in _lazy_init

_check_driver()

File "/Users/lakshay/anaconda/lib/python3.6/site-packages/torch/cuda/__init__.py", line 51, in _check_driver

raise AssertionError("Torch not compiled with CUDA enabled")

AssertionError: Torch not compiled with CUDA enabled

Spent some time looking through the issues in the Pytorch github, to no avail. Help, please?

AssertionError: torch not compiled with Cuda enabled error occurs because of using cuda GPU enable syntax over normal PyTorch (CPU only ). There are multiple scenarios where you can get this error. Sometimes CUDA enablement is clear and visible. This is easy to fix by making it false or removing the same. But in some scenarios, It is indirectly calling Cuda which is explicitly not visible. Hence There we need to understand the internal working of such parameter or function which is causing the issue. Anyways in this article, we will go throw the most common reasons.

Quick Solution for the AssertionError: torch not compiled with cuda enabled

If you want a quick solution for this AssertionError then below are the solutions.

Solution 1:

from torch import nn

net = nn.Sequential(

nn.Linear(18*18, 80),

nn.ReLU(),

nn.Linear(80, 80),

nn.ReLU(),

nn.Linear(80, 10),

nn.LogSoftmax()

).cuda()Solution 2:

conda –

conda install pytorch==1.11.0 torchvision==0.12.0 torchaudio==0.11.0 cudatoolkit=11.3 -c pytorchpip –

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0 --extra-index-url https://download.pytorch.org/whl/cu113What is Pytorch ?

Pytorch is a machine learning library framework used for building applications like Computer Vision and Natural Language Processing. It is developed by Meta Company and is open source. Using it you can easily build deep learning models and make application like image recognition and language processing. If you compare it with Tensorflow then the main difference between them is that TensorFlow allows better visualization than Pytorch.

Solution 1: Switching from CUDA to normal version –

Usually while compiling any neural network in PyTorch, we can pass cuda enable. If we simply remove the same it will remove the error. Refer to the below example, If you are using a similar syntax pattern then remove Cuda while compiling the neural network.

from torch import nn

net = nn.Sequential(

nn.Linear(18*18, 80),

nn.ReLU(),

nn.Linear(80, 80),

nn.ReLU(),

nn.Linear(80, 10),

nn.LogSoftmax()

).cuda()The correct way is –

Solution 2: Installing cuda supported Pytorch –

See the bottom line is that if you are facing such an incompatibility issue either you adjust your code according to available libraries in the system. Or we install the compatible libraries in our system to get rid of the same error.

You may any package managers to install cuda supported pytorch. Use any of the below commands –

conda –

conda install pytorch==1.11.0 torchvision==0.12.0 torchaudio==0.11.0 cudatoolkit=11.3 -c pytorchpip –

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0 --extra-index-url https://download.pytorch.org/whl/cu113Solution 3: set pin_memory=False –

This is one of the same categories where CUDA is not visible directly. But Internally if it is True then it copies the tensors into CUDA space for processing. To Avoid the same we have to make it False. Once more thing, By Default it is True. Hence we have to explicitly make it False while using the get_iterator function in DataLoader class.

torch not compiled with cuda enabled ( Similar Error )-

There are so many errors that have similar solutions but because of the specification added it looks a bit different. Hence to avoid confusion, Here are some variations:

- Platform specifications: This error has the generic solution with most platforms like Win10, Mac, Linux, etc.

- Addition Modules: Sometimes we get this error in intermediate modules like detectron2 etc. But the solution will be generic in all the cases.

- Hardware Specifications: Not Only the Platform but the Underlying hardware like processors like AMD, Jetson, etc have the same impact and solution.

Benefits of CUDA with Torch –

CUDA is a parallel processing framework that provides an application interface to deal with the graphic card utility of the system. In complex operations like deep learning model training where we have to run operations like backpropagation, we need multiprocessing. GPU provides great support for multiprocessing for that we need CUDA (NVIDIA). PyTorch or Tensorflow or any other deep learning framework required GPU handling for high performance. However, it works fine with the CPU in case of small datasets, fewer epochs, etc. But Typically the dataset for any state of art algorithm is usually large in volume. Hence we need CUDA with PyTorch ( Python binding of Torch).

In the end, Let’s understand the assertion error basic fundamental. Assertionerror is the basic check we perform as of prerequisite before the final code run to avoid run time failure. Suppose we are creating some mid output that is consumable by some other service and the system fails there Assertion can avoid wrong data or control flow before the run. Here is an example of another assertionerror.

AssertionError: no inf checks were recorded for this optimizer ( Fix )

Thanks

Data Science Learner Team

Join our list

Subscribe to our mailing list and get interesting stuff and updates to your email inbox.

We respect your privacy and take protecting it seriously

Thank you for signup. A Confirmation Email has been sent to your Email Address.

Something went wrong.

To fix the AssertionError: torch not compiled with cuda enabled error, “install the CUDA toolkit on the local machine and upgrade the version of our current PyTorch library.” It is crucial to install the version of Pytorch with CUDA support.

AssertionError: torch not compiled with cuda enabled error occurs when “CUDA toolkit is not installed in our Python environment.“

Here are the steps to fix the AssertionError: torch not compiled with cuda enabled error.

Step 1: Checking Your PyTorch Installation

Check your PyTorch installation using the below code:

import torch

print(torch.version.cuda)

print(torch.cuda.is_available())

Output

You can see that the output shows that CUDA is not available. You may need to reinstall PyTorch with CUDA support.

Step 2: Uninstall your current PyTorch installation by running the following command

It will give you the below output.

Step 3: Install the correct version of CUDA for your system. You can download CUDA from the NVIDIA website.

To fix the “AssertionError: Torch not compiled with CUDA enabled” error, you need to reinstall PyTorch with CUDA support.

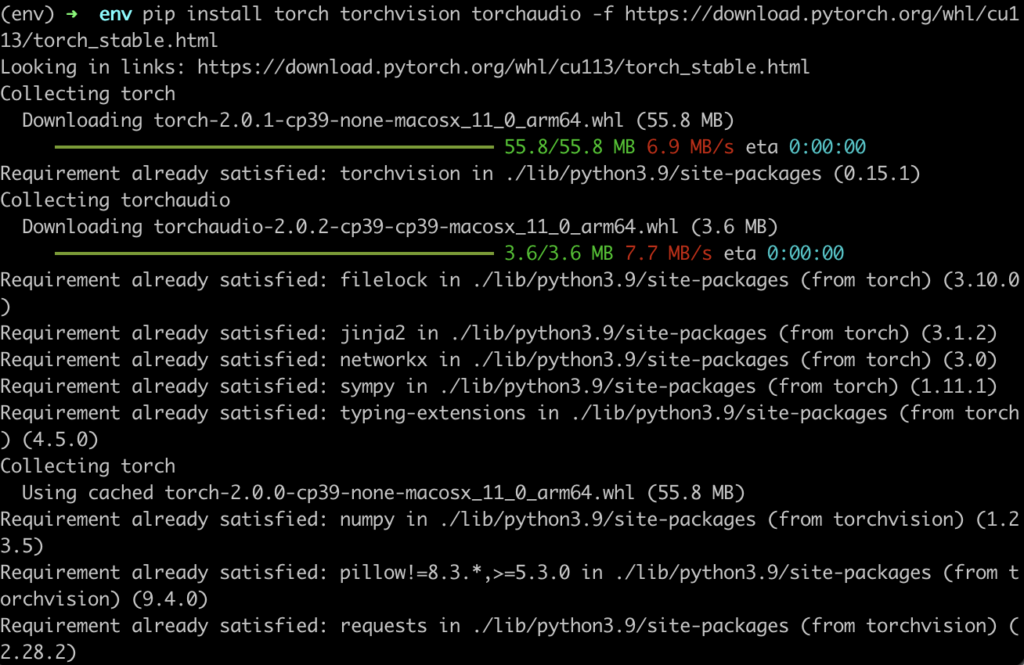

pip install torch torchvision torchaudio -f https://download.pytorch.org/whl/cu113/torch_stable.htmlOutput

Now, you can run the below code and make sure that GPU is available.

import torch

print(torch.__version__)

my_tensor = torch.tensor([[1, 2, 3], [4, 5, 6]], dtype=torch.float32, device="cpu")

print(my_tensor)

torch.cuda.is_available()The output of torch.cuda.is_available() method will be a boolean value indicating whether CUDA is available on the system (True if it is available, False otherwise).

Output

We verified the installation of CUDA by using the torch.cuda.is_available() function is not installed on my machine because it returns False.

If the issue persists, then you can try installing the following command.

You can also install the CUDA Toolkit and its dependencies by using the conda:

conda install cudatoolkit

We can also verify the installation by running the command shown below.

I hope these solutions will resolve the issues you are having.

Related posts

RuntimeError: Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same

RuntimeError: cuda error: an illegal memory access was encountered

RuntimeError: cuda error: invalid device ordinal

RuntimeError: cudnn error: cudnn_status_not_initialized