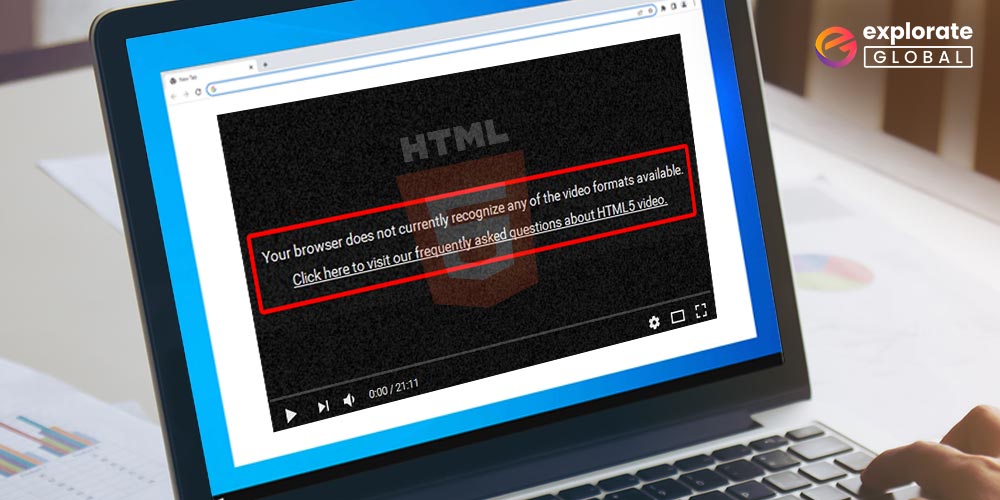

Благодаря неустанному развитию технологий сравнительно недавно появился стандарт HTML5, являющийся новой версией языка разметки веб-страниц, который позволяет структурировать и отображать их содержимое. Инновации позволили усовершенствовать процесс создания и управления графическими и мультимедийными элементами, упростив работу с объектами. Стандарт HTML5 наделён множеством плюсов и предоставляет большие возможности в организации веб-среды, но и здесь не обошлось без сбоев в работе. Пользователи при просмотре видео с интернет ресурсов могут сталкиваться с принудительной остановкой воспроизведения, которую вызвала HTML5 ошибка. Обычно обновление страницы с повторной загрузкой контента решает проблему, но не всегда. К тому же такие сбои особенно неприятны в случае с лимитированным интернетом.

Устранение ошибок в работе HTML5.

Что значит ошибка HTML5

С внедрением HTML5 необходимость использования специальных плагинов, таких как Adobe Flash, Quick Time и прочих надстроек, являющих собой преобразователи цифрового контента в видео и звук, полностью отпала. Больше не нужно скачивать подобные расширения к браузерам или кодеки для просмотра медиаконтента. Обозреватель способен справиться с воспроизведением роликов собственными средствами без использования каких-либо дополнений. Это обусловлено реализацией в HTML5 симбиоза HTML, CSS и JavaScript, где медиаконтент является частью кода веб-страницы. Теперь размещение медиафайлов выполняется стандартными тэгами, при этом элементы могут быть различных форматов и использовать разные кодеки. С приходом новой версии языка разметки, с 2013 года под него велись разработки приложений, постепенно HTML5 стал применяться на большинстве популярных ресурсах и на сегодняшний день является основным стандартом. Технология считается намного усовершенствованной, чем используемая ранее, и сбои для неё не характерны. При этом пользователей нередко беспокоит проблема невозможности просмотра контента в сети и многим уже знаком сбой «Uppod HTML5: Ошибка загрузки» в плеере с поддержкой стандарта или «HTML5: файл видео не найден». Такие неполадки возникают по разным причинам, среди которых чаще всего виновниками являются следующие:

- Устаревшая версия интернет-обозревателя;

- Случайный сбой в работе браузера;

- Неполадки, проведение технических работ на сервере;

- Негативное воздействие сторонних расширений или приложений.

Современные видеоплееры с поддержкой технологии внедрены сегодня на большинстве веб-сайтов, но проблема всё ещё актуальна, поскольку на полный переход к новому стандарту видимо требуется больше времени. Так, на данный момент решать вопрос придётся своими силами.

Как исправить ошибку HTML5 в видеоплеере

Устранить проблему достаточно просто, для этого нужно избавиться от причины, провоцирующей сбой. Рассмотрим, как исправить ошибку HTML5 несколькими способами:

- В первую очередь следует обновить страницу, при случайных сбоях эффективен именно этот вариант решения;

- Можно также изменить качество воспроизводимого видео (выбрать другое разрешение в настройках плеера);

- Стоит попробовать обновить браузер. Когда на сайте стоит плеер HTML5, а версия обозревателя не поддерживает стандарт, возникает данная ошибка и тогда решение очевидно. Посмотреть наличие обновлений для вашего браузера можно в его настройках. По понятным причинам скачивать свежие обновления рекомендуется с официального сайта. Иногда для корректной работы программы с новой технологией может потребоваться переустановить браузер вручную (полное удаление с последующей установкой последней версии);

- Обозреватель следует время от времени чистить от накопившего мусора. На разных браузерах кэш и cookies очищаются по-разному, как правило, опция находится в настройках программы. Есть возможность также выбрать временной период, за который будут удалены данные, лучше чистить за весь период.

Для проверки, в браузере ли дело или же присутствует другая причина ошибки HTML5, нужно попробовать запустить то же видео посредством иного обозревателя. Это может также стать временной мерой по решению проблемы, но если отказываться от привычной программы нет желания, а сбой проявляется на постоянной основе, помочь сможет обновление или переустановка софта.

Дополнительные способы устранения ошибки HTML5

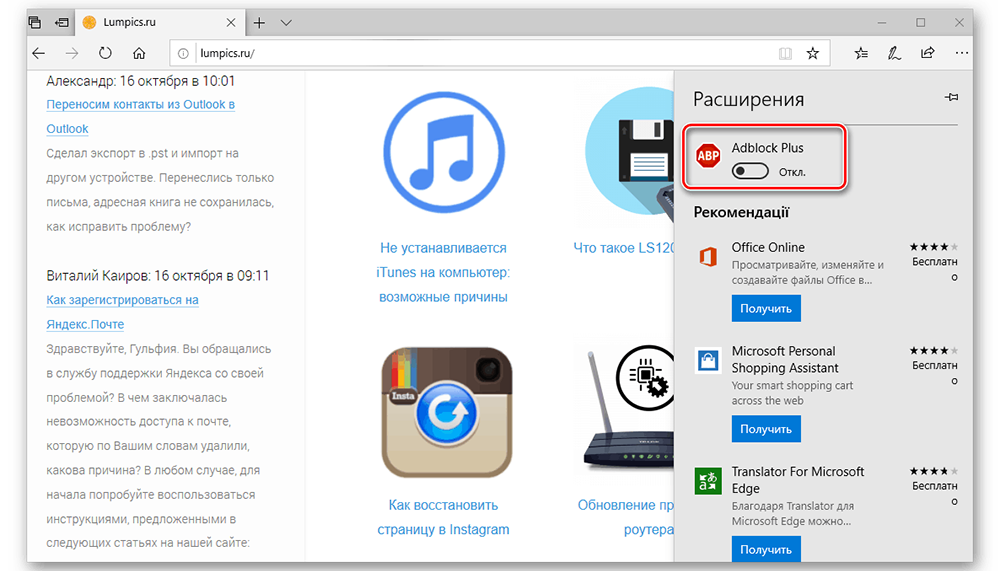

Корректному воспроизведению видео в плеере с поддержкой стандарта могут препятствовать и расширения, присутствующие в браузере. В особенности нередко блокирует медиаконтент инструмент Adbloker. Чтобы деактивировать сторонние плагины нужно перейти в настройках браузера в раздел Дополнения, где вы увидите полный список имеющихся расширений, которые могут помешать воспроизведению файлов, и остановить их работу. В некоторых случаях проблему способен спровоцировать чрезмерно бдительный антивирус или FireWall, ведущие активную защиту при работе с ресурсами сети. Блокировка нежелательного, по мнению программы, трафика приводит к прекращению загрузки контента. Временное отключение софта, блокирующего подключение, решает проблему.

Иногда возможны и проблемы с ресурсами (возникли неполадки с хостингом, ведутся технические работы, обрушилась DDOS атака и т. д.). Тогда придётся просто выждать немного времени, поскольку, когда ошибки возникают на стороне сервера, вы с этим поделать ничего не можете, разве что, сообщить об ошибке администрации сайта. В качестве варианта временного исправления ошибки HTML5, можно переключиться на Adobe Flash, если ресурсом поддерживается сия возможность. Некоторые сайты могут выполнить это автоматически в случае отсутствия поддержки браузером современного стандарта. Рассмотренные способы решения проблемы достаточно эффективны и обязательно помогут в зависимости от причины возникших неудобств с просмотром медиаконтента.

-

Описание плеера

-

Как исправить ошибку?

С каждым днем поисковые машины обрабатывают тысячи запросов с просьбами исправить ошибку HTML5 при просмотре видео. И не удивительно, ведь данная технология относительно новая и распространяется высокими темпами. Как решить эту проблему мы расскажем ниже.

Описание плеера

Для многих не секрет, что для просмотра разного рода видеороликов, игры в онлайн-приложения и совершения прочих полезных действий нужны специальные расширения для браузера. Среди них Adobe Flashplayer, Microsoft Silverlight, Ace Stream и Quicktime. Доля веб-элементов, поддерживающих вышеприведенные технологии, превышает 90%.

Но это было до недавнего времени. После презентации нового стандарта HTML5 появилась возможность проигрывать видеоролики и прочий медиаконтент средствами браузера, без участия сторонних плагинов и дополнительных кодеков. Плеер поддерживает все современные расширения файлов, среди которых OGG, WebM, MP4 и прочие.

Если новинка столь хороша, почему возникают проблемы? На этот и сопутствующие вопросы ответ расположен ниже.

Как исправить ошибку?

В подавляющем большинстве случаев проблема заключается в устаревшей версии браузера, который не поддерживает стандарт HTML5. Для обновления вашего интернет-обозревателя перейдите в «Параметры» – «О программе» и нажмите кнопку «Проверить наличие обновлений».

Если приложение сообщает, что вы используете последнюю версию, но при этом дата предыдущего обновления указана более чем полугодичной давности – удалите браузер вручную и скачайте новый с официального сайта.

Но обновления приложения может не хватить. В качестве альтернативных действий выполните следующее:

- Попробуйте открыть страницу с видеороликом в другом браузере. Возможно, плеер с сайта конфликтует с вашим ПО и не может воспроизвести медиафайл.

- Обновите страницу, измените настройки качества, перемотайте ролик немного вперед – так вы повторно обратитесь к серверу с видео, который мог временно не отвечать.

- Попробуйте просмотреть клип через некоторое время. Возможно, ошибка вызвана внутренними проблемами на сайте или проведением регламентных технических работ.

- Отключите временно AdBlock и другие блокирующие рекламу расширения. Они могут влиять на возможность воспроизведения видео. Инструкцию по их деактивации найдете по этой ссылке.

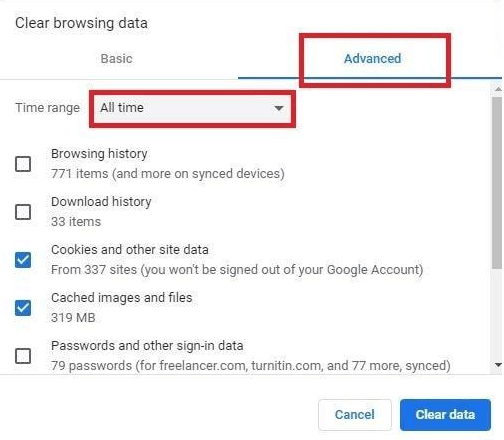

- Очистите кэш и cookie браузера. Для этого перейдите в настройки своего интернет-обозревателя, зайдите в историю посещений и выберите «Очистка журнала». В появившемся окне отметьте кэш, файлы cookie и подтвердите действие. Также можно воспользоваться приложением CCleaner.

На этом все. Теперь вы знаете, как исправить ошибку HTML5 при просмотре видео. Если знаете другие способы решения проблемы – поделитесь им, пожалуйста, в комментариях.

При просмотре различных видеороликов в сети пользователь может столкнуться с ошибкой HTML5, при появлении которой просмотр нужного видео становится невозможен. Как избавиться от данной проблемы пользователь не знает, часто обращаясь к поисковым системам за помощью в данном вопросе. В данном материале я постараюсь помочь таким пользователям и расскажу, что это за ошибка, когда она возникает, и как устранить ошибку HTML5 на ваших ПК.

Содержание

- Особенности HTML5

- Причины ошибки

- Как исправить ошибку HyperText Markup Language, version 5

- Заключение

Особенности HTML5

HTML5 являет собой современный цифровой язык для структурирования и демонстрации информации в всемирной паутине (World Wide Web). Как следует из его названия, он являет собой пятую версию HTML, которая активно используется в работе браузеров ещё с 2013 года.

Цель разработки ХТМЛ5 – улучшение работы с мультимедийным контентом. В частности, благодаря ей можно вставлять видео на страницах различных сайтов, и проигрывать данное видео в браузерах без привлечения сторонних плагинов и расширений (Flash Player, Silverlight, Quicktime).

Данная технология поддерживает множество видеоформатов, отличается довольно стабильной работой и качеством воспроизведения, потому некоторые разработчики (например, компания Google) практически отказались от Adobe Flash в пользу ХТМЛ5. Узнать об отличиях HTML5 от HTML4 можно здесь.

Причины ошибки

Но, как известно, ничто не бывает идеальным, потому некоторые из пользователей при попытке воспроизвести какое-либо видео в сети могут встретиться с ошибкой языка разметки гипертекста. Обычно она возникает при сбое в функционале ХТПЛ5 и может быть вызвана следующими причинами:

- Некорректная работа браузера (случайный сбой в его работе);

- Устаревшая версия браузера (браузер своевременно не обновляется);

- Неполадки на требуемом сетевом ресурсе (сбой работы ресурса, технические работы на нём и так далее);

- Влияние сторонних расширений браузера, блокирующих нормальную работу последнего с ХТМЛ5 (к примеру, Adblock).

После того, как мы разобрались, что значит ошибка с html5 и каковы её причины, перейдём к вопросу о том, что делать с ней делать.

Как исправить ошибку HyperText Markup Language, version 5

Чтобы избавиться от ошибки в пятой версии HTML рекомендую выполнить следующее:

- Для начала попробуйте просто обновить страницу браузера (нажав, к примеру, на F5). Сбой в просмотре видео может иметь случайную природу, и обновление страницы может помочь исправить ошибку;

- Попробуйте уменьшить разрешение видео в его настройках, иногда это может оказаться эффективным;

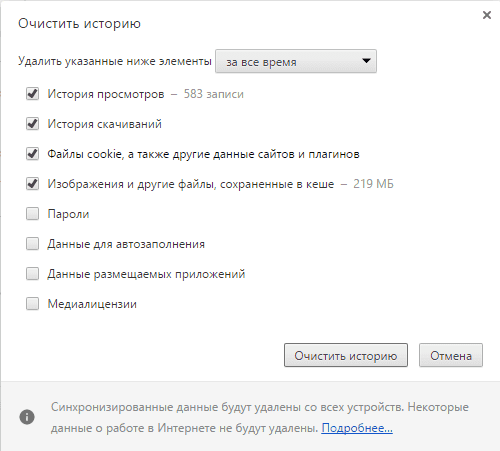

- Очистите кэш и куки вашего браузера. К примеру, в браузере Мозилла это делается переходом в настройки браузера, затем во вкладку «Приватность» и нажатием на «Удалить вашу недавнюю историю» (в определении временного периода нужно выбрать «всё»);

Чистим кэш и куки в браузере - Установите самую свежую версию браузера. Обычное обновление браузера с помощью встроенного функционала не всегда оказывалось эффективным, потому рекомендую скачать самую свежую версию браузера с сайта разработчика, и инсталлировать данный продукт на ваш ПК;

- Попробуйте просмотреть нужный видеоролик на другом браузере. Возможно, ваш базовый браузер работает некорректно;

- Попробуйте отключить на время сторонние расширения браузера (в частности, Adblock). Некоторые из них могут препятствовать корректному воспроизводству видео вХТМЛ5;

- Просто подождите некоторое время. На проблемном сайте могут проходить технические работы, потому для нормализации функционала ресурса может понадобиться какое-то время.

Заключение

Решить ошибку в HTML5, вам поможет комплекс советов, перечисленных мной выше. Стоит обратить наиболее пристальное внимание на установку самой свежей версии вашего браузера, а также временную деактивацию сторонних браузерных расширений что позволит устранить ошибку на ваших ПК.

Опубликовано Обновлено

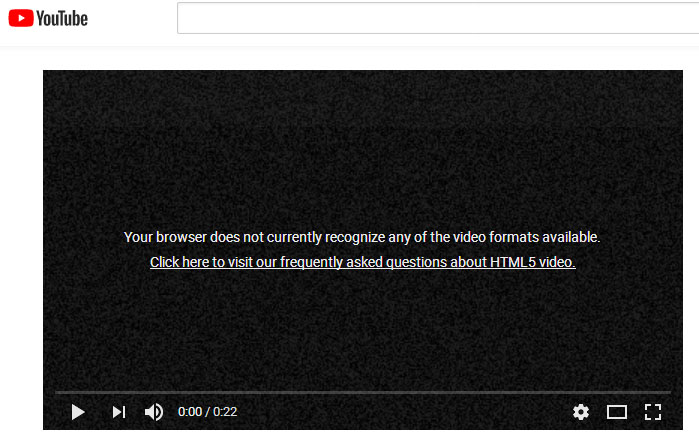

A video is not loaded when you try to play it, and you see the message HTML5 video file not found. Quite annoying. Right? Your mood is impacted, and you are now looking for the cause. It may be a backend browser error or a problem with the HTML5 video capability in your browser. You might be wondering, though, which browsers support HTML5 video.

Concerning the HTML5 not found problem, don’t panic. You can resolve the browser error by using this article, along with providing explanations for why Firefox and Opera display the message ” HTML5 video not properly encoded,” this article also addresses how to recover and fix videos.

What Is An HTML5 Video File?

An enhanced video component for playing videos on a web browser is HTML5 video. The earlier technique of playing videos on the web that used the Adobe Flash plugin is really very bothersome and challenging. Thus, it is now the new standard approach to embed a video on a web page. All browsers support it, and with its control features like autoplay, stops, pause, volume, and others, it aids in managing video playback. So what does HTML5 video not found actually mean?

HTML5 is a brand-new kind of video element created for web browsers to play videos. When playing a video on a website, if the warning HTML5 video file not Found in browser appears, it is either incompatible with the HTML5 codecs format or missing some video codecs.

Additionally, it indicates that there is a problem with the website’s backend, making it impossible for the browser to change the video’s path, or that you are using an outdated version of the browser, and the video is incompatible with the flash player type. The message HTML5 video not properly encoded in Chrome or other browsers appears as a result.

The error HTML5 video not properly encoded in Android devices browsers has a variety of causes.

- The website’s backend problem: This is a problem with the website’s coding. Occasionally, a code error happens. For instance, the Developer or web admin can fix it if the video file cannot be viewed.

- HTML5 format support in browsers: This problem is brought on by HTML5 format support in browsers. The “HTML5 video file not Found” problem may occur because earlier browser versions do not support HTML5 formats or because the browser itself occasionally does not support HTML5 codecs. Some folks had this problem as a result of not updating their browsers.

- Browser Compatibility: This error may occur due to problems with a particular browser’s compatibility. Like when a video fails to play in Chrome and displays a problem “HTML5 video file not Found,” but it plays just fine in Firefox.

- Older videos: It happens when a user asks to play an old video that is compatible with the flash player but not the HTML5 format.

- Cookies & Caches: Because certain websites’ cookies include malicious bugs, occasionally cookies and caches cause issues that prevent websites from playing videos. You thus verify it by opening a web page in Incognito mode.

Therefore, there are a number of causes for the “HTML5 video not properly encoded” problem.

How To Solve “HTML5 Video Not Properly Encoded” Error?

Here, we’ll explain several methods for resolving the error HTML5 file not found in Firefox and other browsers. Therefore, use the methods listed below to resolve the “HTML5 video not found” issue.

1. Use The Most Recent Browser Version

Check to see if your browser has been updated because not all browser versions are compatible with HTML5 videos. Although browsers occasionally update themselves, you should examine it manually if you’re having problems with HTML5.

Follow the instructions below to see if your Chrome browser has any updates;

- Click the three dots in the top right corner of Google Chrome once it has loaded.

- Select Help from the drop-down menu, then select About Google Chrome.

- Click the “Update Google Chrome” link if your web browser is not already updated. If it’s not displayed, your browser is current.

Also know: Top Fastest Browsers for Windows

2. Delete Cookies and Caches

To help in the speedy operation of the browser, temporary storage such as caches and cookies is used to store a tiny amount of user-specific data. However, they accumulate storage over time and can result in a slow browsing issue or an error when you try to play video files. If you encounter the HTML5 video not found issue, clear your cache and cookies before attempting to view the video again.

- Launch Google Chrome and click the three dots in the upper right corner.

- Select Settings from the displayed menu.

- After opening Settings, select “Advanced,” “Privacy and Security,” and then “Clear Browsing Data” from the left-hand menu.

- A little box will now open. Select what you wish to erase and click “Clear Data” after clicking the “Advanced” tab. You can select the “Time Range” option to erase data from the most recent day, last week, or ever.

Be cautious when clearing caches and cookies to prevent losing crucial data.

3. Switch Hardware Acceleration off.

Thanks to hardware acceleration, the browser benefits from graphics-intensive media, such as films and games. If you disable hardware acceleration, your computer’s graphics processing unit will be fully loaded with all graphically demanding material (GPU). The HTML5 video file not Found problem may be fixed due to the delayed loading of videos in the browser.

Please follow the procedures below to disable hardware acceleration;

- Launch Google Chrome and click the three dots in the upper right corner. Click settings now.

- Next, select “Advanced” from the menu on the left. Click “System” under the advanced settings. Turn off the “Use hardware acceleration when available” option that you can now see.

4. Launch your browser in safe Mode

The best way to analyze a browser issue is in Safe Mode, which also fixes certain common problems but does not address HTML5 concerns. Safe Mode allows you to diagnose browser issues so you can immediately fix them swiftly. Therefore, if the HTML5 video played without interruption in safe Mode and with no changes to your browser settings, it is likely that certain websites, extensions, or plugins are to blame.

- Safe Mode disabled the hardware acceleration feature automatically.

- Some settings were reset in Safe Mode.

- The add-ons and plugins were all disabled in Safe Mode.

5. Download the HTML5 Supporting Codecs.

If you receive the “HTML5 video file not found” error message from your browser, either your browser is out-of-date, or the website pages lack an appropriate video codec.

If you spoke with the Developer, you could resolve the problem and install all the necessary codecs.

HTML5 Video Not Properly Encoded – Fixed

Now that HTML5 is available, streaming videos on websites are quite simple. However, sometimes developers make mistakes when embedding videos in websites, which prevents the HTML5 video file from detecting errors. HTML5 video not found problem can disturb any device, including Android smartphones, iPhones, PC, and prevent you from watching your favorite video. To avoid such an issue, you need to update your browser regularly and remove cache and cookies browsing history from your browser so that it will not interrupt your watching time. We hope the abovementioned solutions will help you resolve the HTML5 video not properly encoded issue.

← 4.8.2 The iframe element –

Table of contents –

4.8.11 The canvas element →

-

-

- 4.8.6 The

videoelement - 4.8.7 The

audioelement - 4.8.8 The

sourceelement - 4.8.9 The

trackelement - 4.8.10 Media elements

- 4.8.10.1 Error codes

- 4.8.10.2 Location of the media resource

- 4.8.10.3 MIME types

- 4.8.10.4 Network states

- 4.8.10.5 Loading the media resource

- 4.8.10.6 Offsets into the media resource

- 4.8.10.7 The ready states

- 4.8.10.8 Playing the media resource

- 4.8.10.9 Seeking

- 4.8.10.10 Timed tracks

- 4.8.10.10.1 Timed track model

- 4.8.10.10.2 Sourcing in-band timed tracks

- 4.8.10.10.3 Sourcing out-of-band timed tracks

- 4.8.10.10.4 Guidelines for exposing cues in various formats as

timed track cues - 4.8.10.10.5 Timed track API

- 4.8.10.10.6 Event definitions

- 4.8.10.11 User interface

- 4.8.10.12 Time ranges

- 4.8.10.13 Event summary

- 4.8.10.14 Security and privacy considerations

- 4.8.6 The

-

4.8.6 The video element

ISSUE-9 (video-accessibility) blocks progress to Last Call

- Categories

- Flow content.

- Phrasing content.

- Embedded content.

- If the element has a

controlsattribute: Interactive content. - Contexts in which this element can be used:

- Where embedded content is expected.

- Content model:

- If the element has a

srcattribute:zero or more

trackelements, thentransparent, but with no media element descendants.

- If the element does not have a

srcattribute: one or moresourceelements, thenzero or more

trackelements, thentransparent, but with no media element descendants.

- Content attributes:

- Global attributes

srcposterpreloadautoplayloopaudiocontrolswidthheight- DOM interface:

-

interface HTMLVideoElement : HTMLMediaElement { attribute unsigned long width; attribute unsigned long height; readonly attribute unsigned long videoWidth; readonly attribute unsigned long videoHeight; attribute DOMString poster; attribute boolean audio; };

A video element is used for playing videos or

movies.

Content may be provided inside the video

element. User agents should not show this content

to the user; it is intended for older Web browsers which do

not support video, so that legacy video plugins can be

tried, or to show text to the users of these older browsers informing

them of how to access the video contents.

In particular, this content is not intended to

address accessibility concerns. To make video content accessible to

the blind, deaf, and those with other physical or cognitive

disabilities, authors are expected to provide alternative media

streams and/or to embed accessibility aids (such as caption or

subtitle tracks, audio description tracks, or sign-language

overlays) into their media streams.

The video element is a media element

whose media data is ostensibly video data, possibly

with associated audio data.

The src, preload, autoplay, loop, and controls attributes are the attributes common to all media

elements. The audio

attribute controls the audio

channel.

The poster

attribute gives the address of an image file that the user agent can

show while no video data is available. The attribute, if present,

must contain a valid non-empty URL potentially surrounded by

spaces. If the specified resource is to be

used, then, when the element is created or when the poster attribute is set, if its

value is not the empty string, its value must be resolved relative to the element, and

if that is successful, the resulting absolute URL must

be fetched, from the element’s

Document‘s origin; this must delay

the load event of the element’s document. The poster

frame is then the image obtained from that resource, if

any.

The image given by the poster attribute, the poster

frame, is intended to be a representative frame of the video

(typically one of the first non-blank frames) that gives the user an

idea of what the video is like.

When no video data is available (the element’s readyState attribute is either

HAVE_NOTHING, or HAVE_METADATA but no video

data has yet been obtained at all), the video element

represents either the poster frame, or

nothing.

When a video element is paused and the current playback position is the first

frame of video, the element represents either the frame

of video corresponding to the current playback position or the poster

frame, at the discretion of the user agent.

Notwithstanding the above, the poster frame should

be preferred over nothing, but the poster frame should

not be shown again after a frame of video has been shown.

When a video element is paused at any other position, the

element represents the frame of video corresponding to

the current playback

position, or, if that is not yet available (e.g. because the

video is seeking or buffering), the last frame of the video to have

been rendered.

When a video element is potentially

playing, it represents the frame of video at the

continuously increasing «current» position. When the current playback

position changes such that the last frame rendered is no

longer the frame corresponding to the current playback

position in the video, the new frame must be

rendered. Similarly, any audio associated with the video must, if

played, be played synchronized with the current playback

position, at the specified volume with the specified mute state.

When a video element is neither potentially

playing nor paused

(e.g. when seeking or stalled), the element represents

the last frame of the video to have been rendered.

Which frame in a video stream corresponds to a

particular playback position is defined by the video stream’s

format.

The video element also represents any

timed track cues whose

timed track cue active flag is set and whose

timed track is in the showing mode.

In addition to the above, the user agent may provide messages to

the user (such as «buffering», «no video loaded», «error», or more

detailed information) by overlaying text or icons on the video or

other areas of the element’s playback area, or in another

appropriate manner.

User agents that cannot render the video may instead make the

element represent a link to an

external video playback utility or to the video data itself.

- video .

videoWidth - video .

videoHeight -

These attributes return the intrinsic dimensions of the video,

or zero if the dimensions are not known.

The intrinsic

width and intrinsic height of the

media resource are the dimensions of the resource in

CSS pixels after taking into account the resource’s dimensions,

aspect ratio, clean aperture, resolution, and so forth, as defined

for the format used by the resource. If an anamorphic format does

not define how to apply the aspect ratio to the video data’s

dimensions to obtain the «correct» dimensions, then the user agent

must apply the ratio by increasing one dimension and leaving the

other unchanged.

The videoWidth IDL

attribute must return the intrinsic width of the

video in CSS pixels. The videoHeight IDL

attribute must return the intrinsic height of

the video in CSS pixels. If the element’s readyState attribute is HAVE_NOTHING, then the

attributes must return 0.

The video element supports dimension

attributes.

Video content should be rendered inside the element’s playback

area such that the video content is shown centered in the playback

area at the largest possible size that fits completely within it,

with the video content’s aspect ratio being preserved. Thus, if the

aspect ratio of the playback area does not match the aspect ratio of

the video, the video will be shown letterboxed or pillarboxed. Areas

of the element’s playback area that do not contain the video

represent nothing.

The intrinsic width of a video element’s playback

area is the intrinsic

width of the video resource, if that is available; otherwise

it is the intrinsic width of the poster frame, if that

is available; otherwise it is 300 CSS pixels.

The intrinsic height of a video element’s playback

area is the intrinsic

height of the video resource, if that is available; otherwise

it is the intrinsic height of the poster frame, if that

is available; otherwise it is 150 CSS pixels.

User agents should provide controls to enable or disable the

display of closed captions, audio description tracks, and other

additional data associated with the video stream, though such

features should, again, not interfere with the page’s normal

rendering.

User agents may allow users to view the video content in manners

more suitable to the user (e.g. full-screen or in an independent

resizable window). As for the other user interface features,

controls to enable this should not interfere with the page’s normal

rendering unless the user agent is exposing a user interface. In such an

independent context, however, user agents may make full user

interfaces visible, with, e.g., play, pause, seeking, and volume

controls, even if the controls attribute is absent.

User agents may allow video playback to affect system features

that could interfere with the user’s experience; for example, user

agents could disable screensavers while video playback is in

progress.

The poster IDL

attribute must reflect the poster content attribute.

The audio IDL

attribute must reflect the audio content attribute.

This example shows how to detect when a video has failed to play

correctly:

<script>

function failed(e) {

// video playback failed - show a message saying why

switch (e.target.error.code) {

case e.target.error.MEDIA_ERR_ABORTED:

alert('You aborted the video playback.');

break;

case e.target.error.MEDIA_ERR_NETWORK:

alert('A network error caused the video download to fail part-way.');

break;

case e.target.error.MEDIA_ERR_DECODE:

alert('The video playback was aborted due to a corruption problem or because the video used features your browser did not support.');

break;

case e.target.error.MEDIA_ERR_SRC_NOT_SUPPORTED:

alert('The video could not be loaded, either because the server or network failed or because the format is not supported.');

break;

default:

alert('An unknown error occurred.');

break;

}

}

</script>

<p><video src="tgif.vid" autoplay controls onerror="failed(event)"></video></p>

<p><a href="tgif.vid">Download the video file</a>.</p>

4.8.7 The audio element

- Categories

- Flow content.

- Phrasing content.

- Embedded content.

- If the element has a

controlsattribute: Interactive content. - Contexts in which this element can be used:

- Where embedded content is expected.

- Content model:

- If the element has a

srcattribute:zero or more

trackelements, thentransparent, but with no media element descendants.

- If the element does not have a

srcattribute: one or moresourceelements, thenzero or more

trackelements, thentransparent, but with no media element descendants.

- Content attributes:

- Global attributes

srcpreloadautoplayloopcontrols- DOM interface:

-

[NamedConstructor=Audio(), NamedConstructor=Audio(in DOMString src)] interface HTMLAudioElement : HTMLMediaElement {};

An audio element represents a sound or

audio stream.

Content may be provided inside the audio

element. User agents should not show this content

to the user; it is intended for older Web browsers which do

not support audio, so that legacy audio plugins can be

tried, or to show text to the users of these older browsers informing

them of how to access the audio contents.

In particular, this content is not intended to

address accessibility concerns. To make audio content accessible to

the deaf or to those with other physical or cognitive disabilities,

authors are expected to provide alternative media streams and/or to

embed accessibility aids (such as transcriptions) into their media

streams.

The audio element is a media element

whose media data is ostensibly audio data.

The src, preload, autoplay, loop, and controls attributes are the attributes common to all media

elements.

When an audio element is potentially

playing, it must have its audio data played synchronized with

the current playback position, at the specified volume with the specified mute state.

When an audio element is not potentially

playing, audio must not play for the element.

- audio = new

Audio( [ url ] ) -

Returns a new

audioelement, with thesrcattribute set to the value

passed in the argument, if applicable.

Two constructors are provided for creating

HTMLAudioElement objects (in addition to the factory

methods from DOM Core such as createElement()): Audio() and Audio(src). When invoked as constructors,

these must return a new HTMLAudioElement object (a new

audio element). The element must have its preload attribute set to the

literal value «auto«. If the src argument is present, the object created must have

its src content attribute set to

the provided value, and the user agent must invoke the object’s

resource selection

algorithm before returning. The element’s document must be

the active document of the browsing

context of the Window object on which the

interface object of the invoked constructor is found.

4.8.8 The source element

- Categories

- None.

- Contexts in which this element can be used:

- As a child of a media element, before any flow content

or

trackelements. - Content model:

- Empty.

- Content attributes:

- Global attributes

srctypemedia- DOM interface:

-

interface HTMLSourceElement : HTMLElement { attribute DOMString src; attribute DOMString type; attribute DOMString media; };

The source element allows authors to specify

multiple alternative media

resources for media

elements. It does not represent anything on its own.

The src attribute

gives the address of the media resource. The value must

be a valid non-empty URL potentially surrounded by

spaces. This attribute must be present.

Dynamically modifying a source element

and its attribute when the element is already inserted in a

video or audio element will have no

effect. To change what is playing, either just use the src attribute on the media

element directly, or call the load() method on the media

element after manipulating the source

elements.

The type

attribute gives the type of the media resource, to help

the user agent determine if it can play this media

resource before fetching it. If specified, its value must be

a valid MIME type. The codecs

parameter, which certain MIME types define, might be necessary to

specify exactly how the resource is encoded. [RFC4281]

The following list shows some examples of how to use the codecs= MIME parameter in the type attribute.

- H.264 Constrained baseline profile video (main and extended video compatible) level 3 and Low-Complexity AAC audio in MP4 container

-

<source src='video.mp4' type='video/mp4; codecs="avc1.42E01E, mp4a.40.2"'>

- H.264 Extended profile video (baseline-compatible) level 3 and Low-Complexity AAC audio in MP4 container

-

<source src='video.mp4' type='video/mp4; codecs="avc1.58A01E, mp4a.40.2"'>

- H.264 Main profile video level 3 and Low-Complexity AAC audio in MP4 container

-

<source src='video.mp4' type='video/mp4; codecs="avc1.4D401E, mp4a.40.2"'>

- H.264 ‘High’ profile video (incompatible with main, baseline, or extended profiles) level 3 and Low-Complexity AAC audio in MP4 container

-

<source src='video.mp4' type='video/mp4; codecs="avc1.64001E, mp4a.40.2"'>

- MPEG-4 Visual Simple Profile Level 0 video and Low-Complexity AAC audio in MP4 container

-

<source src='video.mp4' type='video/mp4; codecs="mp4v.20.8, mp4a.40.2"'>

- MPEG-4 Advanced Simple Profile Level 0 video and Low-Complexity AAC audio in MP4 container

-

<source src='video.mp4' type='video/mp4; codecs="mp4v.20.240, mp4a.40.2"'>

- MPEG-4 Visual Simple Profile Level 0 video and AMR audio in 3GPP container

-

<source src='video.3gp' type='video/3gpp; codecs="mp4v.20.8, samr"'>

- Theora video and Vorbis audio in Ogg container

-

<source src='video.ogv' type='video/ogg; codecs="theora, vorbis"'>

- Theora video and Speex audio in Ogg container

-

<source src='video.ogv' type='video/ogg; codecs="theora, speex"'>

- Vorbis audio alone in Ogg container

-

<source src='audio.ogg' type='audio/ogg; codecs=vorbis'>

- Speex audio alone in Ogg container

-

<source src='audio.spx' type='audio/ogg; codecs=speex'>

- FLAC audio alone in Ogg container

-

<source src='audio.oga' type='audio/ogg; codecs=flac'>

- Dirac video and Vorbis audio in Ogg container

-

<source src='video.ogv' type='video/ogg; codecs="dirac, vorbis"'>

- Theora video and Vorbis audio in Matroska container

-

<source src='video.mkv' type='video/x-matroska; codecs="theora, vorbis"'>

The media

attribute gives the intended media type of the media

resource, to help the user agent determine if this

media resource is useful to the user before fetching

it. Its value must be a valid media query.

The default, if the media attribute is omitted, is

«all«, meaning that by default the media

resource is suitable for all media.

If a source element is inserted as a child of a

media element that has no src attribute and whose networkState has the value

NETWORK_EMPTY, the user

agent must invoke the media element’s resource selection

algorithm.

The IDL attributes src, type, and media must

reflect the respective content attributes of the same

name.

If the author isn’t sure if the user agents will all be able to

render the media resources provided, the author can listen to the

error event on the last

source element and trigger fallback behavior:

<script>

function fallback(video) {

// replace <video> with its contents

while (video.hasChildNodes()) {

if (video.firstChild instanceof HTMLSourceElement)

video.removeChild(video.firstChild);

else

video.parentNode.insertBefore(video.firstChild, video);

}

video.parentNode.removeChild(video);

}

</script>

<video controls autoplay>

<source src='video.mp4' type='video/mp4; codecs="avc1.42E01E, mp4a.40.2"'>

<source src='video.ogv' type='video/ogg; codecs="theora, vorbis"'

onerror="fallback(parentNode)">

...

</video>

4.8.9 The track element

ISSUE-9 (video-accessibility) blocks progress to Last Call

- Categories

- None.

- Contexts in which this element can be used:

- As a child of a media element, before any flow content.

- Content model:

- Empty.

- Content attributes:

- Global attributes

kindsrccharsetsrclanglabel- DOM interface:

-

interface HTMLTrackElement : HTMLElement { attribute DOMString kind; attribute DOMString src; attribute DOMString charset; attribute DOMString srclang; attribute DOMString label; readonly attribute TimedTrack track; };

The track element allows authors to specify explicit

external timed tracks for media elements. It does not represent anything on its own.

The kind

attribute is an enumerated attribute. The following

table lists the keywords defined for this attribute. The keyword

given in the first cell of each row maps to the state given in the

second cell.

| Keyword | State | Brief description |

|---|---|---|

subtitles

|

Subtitles | Transcription or translation of the dialogue, suitable for when the sound is available but not understood (e.g. because the user does not understand the language of the media resource’s soundtrack). |

captions

|

Captions | Transcription or translation of the dialogue, sound effects, relevant musical cues, and other relevant audio information, suitable for when the soundtrack is unavailable (e.g. because it is muted or because the user is deaf). |

descriptions

|

Descriptions | Textual descriptions of the video component of the media resource, intended for audio synthesis when the visual component is unavailable (e.g. because the user is interacting with the application without a screen while driving, or because the user is blind). |

chapters

|

Chapters | Chapter titles, intended to be used for navigating the media resource. |

metadata

|

Metadata | Tracks intended for use from script. |

The attribute may be omitted. The missing value default is

the subtitles state.

The src attribute

gives the address of the timed track data. The value must be a

valid non-empty URL potentially surrounded by

spaces. This attribute must be present.

If the element has a src

attribute whose value is not the empty string and whose value, when

the attribute was set, could be successfully resolved relative to the element, then the element’s

track URL is the resulting absolute

URL. Otherwise, the element’s track URL is the

empty string.

If the elements’s track URL identifies a

WebSRT resource, and the element’s kind attribute is not in the metadata state, then the

WebSRT file must be a WebSRT file using cue

text.

If the elements’s track URL identifies a

WebSRT resource, then the charset attribute may

be specified. If the attribute is set, its value must be a valid

character encoding name, must be an ASCII

case-insensitive match for the preferred MIME

name for that encoding, and must match the character encoding

of the WebSRT file. [IANACHARSET]

The srclang

attribute gives the language of the timed track data. The value must

be a valid BCP 47 language tag. This attribute must be present if

the element’s kind attribute is

in the subtitles

state. [BCP47]

If the element has a srclang attribute whose value is

not the empty string, then the element’s track language

is the value of the attribute. Otherwise, the element has no

track language.

The label

attribute gives a user-readable title for the track. This title is

used by user agents when listing subtitle, caption, and audio description tracks

in their user interface.

The value of the label

attribute, if the attribute is present, must not be the empty

string. Furthermore, there must not be two track

element children of the same media element whose kind attributes are in the same

state, whose srclang

attributes are both missing or have values that represent the same

language, and whose label

attributes are again both missing or both have the same value.

If the element has a label

attribute whose value is not the empty string, then the element’s

track label is the value of the attribute. Otherwise, the

element’s track label is a user-agent defined string

(e.g. the string «untitled» in the user’s locale, or a value

automatically generated from the other attributes).

- track .

track -

Returns the

TimedTrackobject corresponding to the timed track of thetrackelement.

The track IDL

attribute must, on getting, return the track element’s

timed track’s corresponding TimedTrack

object.

The src, charset, srclang, and label IDL attributes must

reflect the respective content attributes of the same

name. The kind IDL

attributemust reflect the content attribute of the same

name, limited to only known values.

This video has subtitles in several languages:

<video src="brave.webm"> <track kind=subtitles src=brave.en.srt srclang=en label="English"> <track kind=captions src=brave.en.srt srclang=en label="English for the Hard of Hearing"> <track kind=subtitles src=brave.fr.srt srclang=fr label="Français"> <track kind=subtitles src=brave.de.srt srclang=de label="Deutsch"> </video>

4.8.10 Media elements

Media elements

(audio and video, in this specification)

implement the following interface:

interface HTMLMediaElement : HTMLElement {

// error state

readonly attribute MediaError error;

// network state

attribute DOMString src;

readonly attribute DOMString currentSrc;

const unsigned short NETWORK_EMPTY = 0;

const unsigned short NETWORK_IDLE = 1;

const unsigned short NETWORK_LOADING = 2;

const unsigned short NETWORK_NO_SOURCE = 3;

readonly attribute unsigned short networkState;

attribute DOMString preload;

readonly attribute TimeRanges buffered;

void load();

DOMString canPlayType(in DOMString type);

// ready state

const unsigned short HAVE_NOTHING = 0;

const unsigned short HAVE_METADATA = 1;

const unsigned short HAVE_CURRENT_DATA = 2;

const unsigned short HAVE_FUTURE_DATA = 3;

const unsigned short HAVE_ENOUGH_DATA = 4;

readonly attribute unsigned short readyState;

readonly attribute boolean seeking;

// playback state

attribute double currentTime;

readonly attribute double initialTime;

readonly attribute double duration;

readonly attribute Date startOffsetTime;

readonly attribute boolean paused;

attribute double defaultPlaybackRate;

attribute double playbackRate;

readonly attribute TimeRanges played;

readonly attribute TimeRanges seekable;

readonly attribute boolean ended;

attribute boolean autoplay;

attribute boolean loop;

void play();

void pause();

// controls

attribute boolean controls;

attribute double volume;

attribute boolean muted;

// timed tracks

readonly attribute TimedTrack[] tracks;

MutableTimedTrack addTrack(in DOMString kind, in optional DOMString label, in optional DOMString language);

};

The media element attributes, src, preload, autoplay, loop, and controls, apply to all media elements. They are defined in

this section.

Media elements are used to

present audio data, or video and audio data, to the user. This is

referred to as media data in this section, since this

section applies equally to media

elements for audio or for video. The term media

resource is used to refer to the complete set of media data,

e.g. the complete video file, or complete audio file.

Except where otherwise specified, the task source

for all the tasks queued in this

section and its subsections is the media element event task

source.

4.8.10.1 Error codes

- media .

error -

Returns a

MediaErrorobject representing the

current error state of the element.Returns null if there is no error.

All media elements have an

associated error status, which records the last error the element

encountered since its resource selection

algorithm was last invoked. The error attribute, on

getting, must return the MediaError object created for

this last error, or null if there has not been an error.

interface MediaError {

const unsigned short MEDIA_ERR_ABORTED = 1;

const unsigned short MEDIA_ERR_NETWORK = 2;

const unsigned short MEDIA_ERR_DECODE = 3;

const unsigned short MEDIA_ERR_SRC_NOT_SUPPORTED = 4;

readonly attribute unsigned short code;

};

- media .

error.code -

Returns the current error’s error code, from the list below.

The code

attribute of a MediaError object must return the code

for the error, which must be one of the following:

MEDIA_ERR_ABORTED(numeric value 1)- The fetching process for the media resource was

aborted by the user agent at the user’s request. MEDIA_ERR_NETWORK(numeric value 2)- A network error of some description caused the user agent to

stop fetching the media resource, after the resource

was established to be usable. MEDIA_ERR_DECODE(numeric value 3)- An error of some description occurred while decoding the

media resource, after the resource was established to

be usable. MEDIA_ERR_SRC_NOT_SUPPORTED(numeric value 4)- The media resource indicated by the

srcattribute was not suitable.

4.8.10.2 Location of the media resource

The src content

attribute on media elements gives

the address of the media resource (video, audio) to show. The

attribute, if present, must contain a valid non-empty

URL potentially surrounded by spaces.

If a src attribute of a

media element is set or changed, the user agent must

invoke the media element’s media element load

algorithm. (Removing the src attribute does not do this, even

if there are source elements present.)

The src IDL

attribute on media elements must

reflect the content attribute of the same name.

- media .

currentSrc -

Returns the address of the current media resource.

Returns the empty string when there is no media resource.

The currentSrc IDL

attribute is initially the empty string. Its value is changed by the

resource selection

algorithm defined below.

There are two ways to specify a media

resource, the src

attribute, or source elements. The attribute overrides

the elements.

4.8.10.3 MIME types

A media resource can be described in terms of its

type, specifically a MIME type, in some cases

with a codecs parameter. (Whether the codecs parameter is allowed or not depends on the

MIME type.) [RFC4281]

Types are usually somewhat incomplete descriptions; for example

«video/mpeg» doesn’t say anything except what

the container type is, and even a type like «video/mp4; codecs="avc1.42E01E,» doesn’t include information like the actual

mp4a.40.2"

bitrate (only the maximum bitrate). Thus, given a type, a user agent

can often only know whether it might be able to play

media of that type (with varying levels of confidence), or whether

it definitely cannot play media of that type.

A type that the user agent knows it cannot render is

one that describes a resource that the user agent definitely does

not support, for example because it doesn’t recognize the container

type, or it doesn’t support the listed codecs.

The MIME type

«application/octet-stream» with no parameters is never

a type that the user agent knows it cannot render. User

agents must treat that type as equivalent to the lack of any

explicit Content-Type metadata

when it is used to label a potential media

resource.

In the absence of a

specification to the contrary, the MIME type

«application/octet-stream» when used with

parameters, e.g.

«application/octet-stream;codecs=theora«, is

a type that the user agent knows it cannot render,

since that parameter is not defined for that type.

- media .

canPlayType(type) -

Returns the empty string (a negative response), «maybe», or

«probably» based on how confident the user agent is that it can

play media resources of the given type.

The canPlayType(type) method must return the empty

string if type is a type that the user

agent knows it cannot render or is the type

«application/octet-stream«; it must return «probably» if the user agent is confident that the

type represents a media resource that it can render if

used in with this audio or video element;

and it must return «maybe» otherwise.

Implementors are encouraged to return «maybe»

unless the type can be confidently established as being supported or

not. Generally, a user agent should never return «probably» for a type that allows the codecs parameter if that parameter is not

present.

This script tests to see if the user agent supports a

(fictional) new format to dynamically decide whether to use a

video element or a plugin:

<section id="video">

<p><a href="playing-cats.nfv">Download video</a></p>

</section>

<script>

var videoSection = document.getElementById('video');

var videoElement = document.createElement('video');

var support = videoElement.canPlayType('video/x-new-fictional-format;codecs="kittens,bunnies"');

if (support != "probably" && "New Fictional Video Plug-in" in navigator.plugins) {

// not confident of browser support

// but we have a plugin

// so use plugin instead

videoElement = document.createElement("embed");

} else if (support == "") {

// no support from browser and no plugin

// do nothing

videoElement = null;

}

if (videoElement) {

while (videoSection.hasChildNodes())

videoSection.removeChild(videoSection.firstChild);

videoElement.setAttribute("src", "playing-cats.nfv");

videoSection.appendChild(videoElement);

}

</script>

The type

attribute of the source element allows the user agent

to avoid downloading resources that use formats it cannot

render.

4.8.10.4 Network states

- media .

networkState -

Returns the current state of network activity for the element,

from the codes in the list below.

As media elements interact

with the network, their current network activity is represented by

the networkState

attribute. On getting, it must return the current network state of

the element, which must be one of the following values:

NETWORK_EMPTY(numeric value 0)- The element has not yet been initialized. All attributes are in

their initial states. NETWORK_IDLE(numeric value 1)- The element‘s resource selection

algorithm is active and has selected a resource, but it is not actually

using the network at this time. NETWORK_LOADING(numeric value 2)- The user agent is actively trying to download data.

NETWORK_NO_SOURCE(numeric value 3)- The element‘s resource selection

algorithm is active, but it has so not yet found a

resource to use.

The resource selection

algorithm defined below describes exactly when the networkState attribute changes

value and what events fire to indicate changes in this state.

4.8.10.5 Loading the media resource

- media .

load() -

Causes the element to reset and start selecting and loading a

new media resource from scratch.

All media elements have an

autoplaying flag, which must begin in the true state, and

a delaying-the-load-event flag, which must begin in the

false state. While the delaying-the-load-event flag is

true, the element must delay the load event of its

document.

When the load()

method on a media element is invoked, the user agent

must run the media element load algorithm.

The media element load algorithm consists of the

following steps.

-

Abort any already-running instance of the resource selection

algorithm for this element. -

If there are any tasks from

the media element’s media element event task

source in one of the task

queues, then remove those tasks.Basically, pending events and callbacks for the

media element are discarded when the media element starts loading

a new resource. -

If the media element’s

networkStateis set toNETWORK_LOADINGorNETWORK_IDLE, queue a

task to fire a simple event namedabortat the media

element. -

If the media element’s

networkStateis not set to

NETWORK_EMPTY, then

run these substeps:-

If a fetching process is in progress for the media

element, the user agent should stop it. -

Set the

networkStateattribute to

NETWORK_EMPTY. -

Forget the media element’s media-resource-specific

timed tracks. -

If

readyStateis

not set toHAVE_NOTHING, then set it

to that state. -

If the

paused

attribute is false, then set to true. -

If

seekingis true,

set it to false. -

Set the current playback position to 0.

If this changed the current playback position,

then queue a task to fire a simple

event namedtimeupdateat the

media element. -

Set the initial playback position to

0. -

Set the timeline offset to Not-a-Number

(NaN). -

Update the

duration

attribute to Not-a-Number (NaN).The user agent will

not fire adurationchangeevent

for this particular change of the duration. -

Queue a task to fire a simple

event namedemptiedat the media

element.

-

-

Set the

playbackRateattribute to the

value of thedefaultPlaybackRate

attribute. -

Set the

errorattribute

to null and the autoplaying flag to true. -

Invoke the media element’s resource selection

algorithm. -

Playback of any previously playing media

resource for this element stops.

The resource selection

algorithm for a media element is as follows. This

algorithm is always invoked synchronously, but one of the first

steps in the algorithm is to return and continue running the

remaining steps asynchronously, meaning that it runs in the

background with scripts and other tasks running in parallel. In addition,

this algorithm interacts closely with the event loop

mechanism; in particular, it has synchronous sections (which are triggered as part of

the event loop algorithm). Steps in such sections are

marked with ⌛.

-

Set the

networkStatetoNETWORK_NO_SOURCE. -

Asynchronously await a stable state, allowing

the task that invoked this

algorithm to continue. The synchronous section

consists of all the remaining steps of this algorithm until the

algorithm says the synchronous section has

ended. (Steps in synchronous

sections are marked with ⌛.) -

⌛ If the media element has a

srcattribute, then let mode be attribute.⌛ Otherwise, if the media element does not

have asrcattribute but has a

sourceelement child, then let mode be children and let candidate be the first suchsource

element child in tree order.⌛ Otherwise the media element has neither a

srcattribute nor a

sourceelement child: set thenetworkStatetoNETWORK_EMPTY, and abort

these steps; the synchronous section ends. -

⌛ Set the media element’s

delaying-the-load-event flag to true (this delays the load event), and set

itsnetworkStateto

NETWORK_LOADING. -

⌛ Queue a task to fire a simple

event namedloadstartat the media

element. -

If mode is attribute, then

run these substeps:-

⌛ Process candidate: If the

srcattribute’s value is the empty

string, then end the synchronous section, and jump

down to the failed step below. -

⌛ Let absolute URL be the

absolute URL that would have resulted from resolving the URL

specified by thesrc

attribute’s value relative to the media element when

thesrcattribute was last

changed. -

⌛ If absolute URL was obtained

successfully, set thecurrentSrcattribute to absolute URL. -

End the synchronous section, continuing the

remaining steps asynchronously. -

If absolute URL was obtained

successfully, run the resource fetch

algorithm with absolute URL. If that

algorithm returns without aborting this one, then the

load failed. -

Failed: Reaching this step indicates that the media

resource failed to load or that the given URL could

not be resolved. In one

atomic operation, run the following steps:-

Set the

error

attribute to a newMediaErrorobject whosecodeattribute is set to

MEDIA_ERR_SRC_NOT_SUPPORTED. -

Forget the media element’s media-resource-specific

timed tracks. -

Set the element’s

networkStateattribute to

the NETWORK_NO_SOURCE

value.

-

-

Queue a task to fire a simple

event namederror

at the media element. -

Set the element’s delaying-the-load-event flag

to false. This stops delaying

the load event. -

Abort these steps. Until the

load()method is invoked or the

srcattribute is changed, the

element won’t attempt to load another resource.

Otherwise, the

sourceelements will be used; run

these substeps:-

⌛ Let pointer be a position

defined by two adjacent nodes in the media

element’s child list, treating the start of the list

(before the first child in the list, if any) and end of the list

(after the last child in the list, if any) as nodes in their own

right. One node is the node before pointer,

and the other node is the node after pointer. Initially, let pointer be the position between the candidate node and the next node, if there are

any, or the end of the list, if it is the last node.As nodes are inserted and removed into the media

element, pointer must be updated as

follows:- If a new node is inserted between the two nodes that

define pointer - Let pointer be the point between the

node before pointer and the new node. In

other words, insertions at pointer go after

pointer. - If the node before pointer is removed

- Let pointer be the point between the

node after pointer and the node before the

node after pointer. In other words, pointer doesn’t move relative to the remaining

nodes. - If the node after pointer is removed

- Let pointer be the point between the

node before pointer and the node after the

node before pointer. Just as with the

previous case, pointer doesn’t move

relative to the remaining nodes.

Other changes don’t affect pointer.

- If a new node is inserted between the two nodes that

-

⌛ Process candidate: If candidate does not have a

srcattribute, or if itssrcattribute’s value is the empty

string, then end the synchronous section, and jump

down to the failed step below. -

⌛ Let absolute URL be the

absolute URL that would have resulted from resolving the URL

specified by candidate‘ssrcattribute’s value relative to

the candidate when thesrcattribute was last

changed. -

⌛ If absolute URL was not

obtained successfully, then end the synchronous

section, and jump down to the failed step

below. -

⌛ If candidate has a

typeattribute whose value, when

parsed as a MIME type (including any codecs

described by thecodecsparameter, for

types that define that parameter), represents a type that

the user agent knows it cannot render, then end the

synchronous section, and jump down to the failed step below. -

⌛ If candidate has a

mediaattribute whose value does

not match the

environment, then end the synchronous

section, and jump down to the failed step

below. -

⌛ Set the

currentSrcattribute to absolute URL. -

End the synchronous section, continuing the

remaining steps asynchronously. -

Run the resource

fetch algorithm with absolute URL. If

that algorithm returns without aborting this one, then

the load failed. -

Failed: Queue a task to

fire a simple event namederrorat the candidate element, in the context of the fetching process that was used to try to

obtain candidate‘s corresponding media

resource in the resource fetch

algorithm. -

Asynchronously await a stable state. The

synchronous section consists of all the remaining

steps of this algorithm until the algorithm says the

synchronous section has ended. (Steps in synchronous sections are

marked with ⌛.) -

⌛ Forget the media element’s

media-resource-specific timed tracks. -

⌛ Find next candidate: Let candidate be null.

-

⌛ Search loop: If the node after

pointer is the end of the list, then jump to

the waiting step below. -

⌛ If the node after pointer is

asourceelement, let candidate

be that element. -

⌛ Advance pointer so that the

node before pointer is now the node that was

after pointer, and the node after pointer is the node after the node that used to be

after pointer, if any. -

⌛ If candidate is null, jump

back to the search loop step. Otherwise, jump

back to the process candidate step. -

⌛ Waiting: Set the element’s

networkStateattribute to

the NETWORK_NO_SOURCE

value. -

⌛ Set the element’s delaying-the-load-event

flag to false. This stops delaying the load event. -

End the synchronous section, continuing the

remaining steps asynchronously. -

Wait until the node after pointer is a

node other than the end of the list. (This step might wait

forever.) -

Asynchronously await a stable state. The

synchronous section consists of all the remaining

steps of this algorithm until the algorithm says the

synchronous section has ended. (Steps in synchronous sections are

marked with ⌛.) -

⌛ Set the element’s delaying-the-load-event

flag back to true (this delays the load event again, in case it hasn’t been

fired yet). -

⌛ Set the

networkStateback toNETWORK_LOADING. -

⌛ Jump back to the find next

candidate step above.

-

The resource fetch

algorithm for a media element and a given

absolute URL is as follows:

-

Let the current media resource be the

resource given by the absolute URL passed to this

algorithm. This is now the element’s media

resource. -

Begin to fetch the current media

resource, from the media element’s

Document‘s origin, with the force

same-origin flag set.Every 350ms (±200ms) or for every byte received, whichever

is least frequent, queue a task to

fire a simple event namedprogressat the element.The stall timeout is a user-agent defined length of

time, which should be about three seconds. When a media

element that is actively attempting to obtain media

data has failed to receive any data for a duration equal to

the stall timeout, the user agent must queue a

task to fire a simple event namedstalledat the element.User agents may allow users to selectively block or slow

media data downloads. When a media

element’s download has been blocked altogether, the user

agent must act as if it was stalled (as opposed to acting as if

the connection was closed). The rate of the download may also be

throttled automatically by the user agent, e.g. to balance the

download with other connections sharing the same bandwidth.User agents may decide to not download more content at any

time, e.g. after buffering five minutes of a one hour media

resource, while waiting for the user to decide whether to play the

resource or not, or while waiting for user input in an interactive

resource. When a media element’s download has been

suspended, the user agent must set thenetworkStatetoNETWORK_IDLEand queue

a task to fire a simple event namedsuspendat the element. If and

when downloading of the resource resumes, the user agent must set

thenetworkStateto

NETWORK_LOADING.The

preloadattribute provides a

hint regarding how much buffering the author thinks is advisable,

even in the absence of theautoplayattribute.When a user agent decides to completely stall a download,

e.g. if it is waiting until the user starts playback before

downloading any further content, the element’s

delaying-the-load-event flag must be set to

false. This stops delaying the

load event.The user agent may use whatever means necessary to fetch the

resource (within the constraints put forward by this and other

specifications); for example, reconnecting to the server in the

face of network errors, using HTTP range retrieval requests, or

switching to a streaming protocol. The user agent must consider a

resource erroneous only if it has given up trying to fetch it.The networking task source tasks to process the data as it is

being fetched must, when appropriate, include the relevant

substeps from the following list:- If the media data cannot be fetched at all, due

to network errors, causing the user agent to give up trying to

fetch the resource - If the media resource is found to have Content-Type metadata that, when

parsed as a MIME type (including any codecs

described by thecodecsparameter, if the

parameter is defined for that type), represents a type that

the user agent knows it cannot render (even if the actual

media data is in a supported format) - If the media data can be fetched but is found by

inspection to be in an unsupported format, or can otherwise not

be rendered at all -

DNS errors, HTTP 4xx and 5xx errors (and equivalents in

other protocols), and other fatal network errors that occur

before the user agent has established whether the current media resource is usable, as well as

the file using an unsupported container format, or using

unsupported codecs for all the data, must cause the user agent

to execute the following steps:-

The user agent should cancel the fetching

process. -

Abort this subalgorithm, returning to the resource selection

algorithm.

-

- Once enough of the media

data has been fetched to determine the duration of the

media resource, its dimensions, and other

metadata,and once the timed tracks are ready

-

This indicates that the resource is usable. The user agent

must follow these substeps:-

Establish the media timeline for the purposes

of the current playback position, the

earliest possible position, and the initial

playback position, based on the media

data. -

Update the timeline offset to the date and

time that corresponds to the zero time in the media

timeline established in the previous step, if any. If

no explicit time and date is given by the media

resource, the timeline offset must be set

to Not-a-Number (NaN). -

Set the current playback position to the

earliest possible position. -

Update the

duration

attribute with the time of the last frame of the resource, if

known, on the media timeline established above.

If it is not known (e.g. a stream that is in principle

infinite), update thedurationattribute to the

value positive Infinity.The user agent will queue a task to

fire a simple event nameddurationchangeat the

element at this point. -

Set the

readyStateattribute to

HAVE_METADATA. -

For

videoelements, set thevideoWidthandvideoHeight

attributes. -

Queue a task to fire a simple

event namedloadedmetadataat the

element.Before this task is run, as part of the event

loop mechanism, the rendering will have been updated to resize

thevideoelement if appropriate. -

If either the media resource or the address of

the current media resource indicate a

particular start time, then set the initial playback

position to that time and then seek seek to that time. Ignore any

resulting exceptions (if the position is out of range, it is

effectively ignored).For example, a fragment identifier could be

used to indicate a start position. -

Once the

readyStateattribute

reachesHAVE_CURRENT_DATA,

after theloadeddataevent has been

fired, set the element’s delaying-the-load-event

flag to false. This stops delaying the load event.A user agent that is attempting to reduce

network usage while still fetching the metadata for each

media resource would also stop buffering at this

point, causing thenetworkStateattribute

to switch to theNETWORK_IDLEvalue.

The user agent is required to

determine the duration of the media resource and

go through this step before playing. -

- Once the entire media resource has been fetched (but potentially before any of it

has been decoded) -

Queue a task to fire a simple event

namedprogressat the

media element. - If the connection is interrupted, causing the user agent to

give up trying to fetch the resource -

Fatal network errors that occur after the user agent has

established whether the current media

resource is usable must cause the user agent to execute

the following steps:-

The user agent should cancel the fetching

process. -

Set the

error

attribute to a newMediaErrorobject whosecodeattribute is set to

MEDIA_ERR_NETWORK. -

Queue a task to fire a simple

event namederror

at the media element. -

If the media element’s

readyStateattribute has a

value equal toHAVE_NOTHING, set the

element’snetworkStateattribute to

theNETWORK_EMPTY

value and queue a task to fire a simple

event namedemptied

at the element. Otherwise, set the element’snetworkStateattribute to

theNETWORK_IDLE

value. -

Set the element’s delaying-the-load-event

flag to false. This stops delaying the load event. -

Abort the overall resource selection

algorithm.

-

- If the media data is

corrupted -

Fatal errors in decoding the media data that

occur after the user agent has established whether the current media resource is usable must cause the

user agent to execute the following steps:-

The user agent should cancel the fetching

process. -

Set the

error

attribute to a newMediaErrorobject whosecodeattribute is set to

MEDIA_ERR_DECODE. -

Queue a task to fire a simple

event namederror

at the media element. -

If the media element’s

readyStateattribute has a

value equal toHAVE_NOTHING, set the

element’snetworkStateattribute to

theNETWORK_EMPTY

value and queue a task to fire a simple

event namedemptied

at the element. Otherwise, set the element’snetworkStateattribute to

theNETWORK_IDLE

value. -

Set the element’s delaying-the-load-event

flag to false. This stops delaying the load event. -

Abort the overall resource selection

algorithm.

-

- If the media data fetching process is aborted by

the user -

The fetching process is aborted by the user, e.g. because the

user navigated the browsing context to another page, the user

agent must execute the following steps. These steps are not

followed if theload()

method itself is invoked while these steps are running, as the

steps above handle that particular kind of abort.-

The user agent should cancel the fetching

process. -

Set the

error

attribute to a newMediaErrorobject whosecodeattribute is set to

MEDIA_ERR_ABORTED. -

Queue a task to fire a simple

event namedabort

at the media element. -

If the media element’s

readyStateattribute has a

value equal toHAVE_NOTHING, set the

element’snetworkStateattribute to

theNETWORK_EMPTY

value and queue a task to fire a simple

event namedemptied

at the element. Otherwise, set the element’snetworkStateattribute to

theNETWORK_IDLE

value. -

Set the element’s delaying-the-load-event

flag to false. This stops delaying the load event. -

Abort the overall resource selection

algorithm.

-

- If the media data can

be fetched but has non-fatal errors or uses, in part, codecs that

are unsupported, preventing the user agent from rendering the

content completely correctly but not preventing playback

altogether -

The server returning data that is partially usable but cannot

be optimally rendered must cause the user agent to render just

the bits it can handle, and ignore the rest. - If the media resource is found to declare a media-resource-specific timed track that the user agent supports

-

If the media resource’s origin is

the same origin as the media element’s

Document‘s origin, queue a

task to run the steps to expose a

media-resource-specific timed track with the relevant

data.Cross-origin files do not expose their subtitles

in the DOM, for security reasons. However, user agents may still

provide the user with access to such data in their user

interface.

When the networking task source has queued the last task as part of fetching the media resource

(i.e. once the download has completed), if the fetching process

completes without errors, including decoding the media data, and