I needed to parse a site, but I got a 403 Forbidden error.

Here is the code:

url = 'http://worldagnetwork.com/'

result = requests.get(url)

print(result.content.decode())

The output is:

<html>

<head><title>403 Forbidden</title></head>

<body bgcolor="white">

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx</center>

</body>

</html>

What is the problem?

Gino Mempin

25.7k29 gold badges98 silver badges138 bronze badges

asked Jul 20, 2016 at 19:36

Толкачёв ИванТолкачёв Иван

1,7593 gold badges12 silver badges13 bronze badges

2

It seems the page rejects GET requests that do not identify a User-Agent. I visited the page with a browser (Chrome) and copied the User-Agent header of the GET request (look in the Network tab of the developer tools):

import requests

url = 'http://worldagnetwork.com/'

headers = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36'}

result = requests.get(url, headers=headers)

print(result.content.decode())

# <!doctype html>

# <!--[if lt IE 7 ]><html class="no-js ie ie6" lang="en"> <![endif]-->

# <!--[if IE 7 ]><html class="no-js ie ie7" lang="en"> <![endif]-->

# <!--[if IE 8 ]><html class="no-js ie ie8" lang="en"> <![endif]-->

# <!--[if (gte IE 9)|!(IE)]><!--><html class="no-js" lang="en"> <!--<![endif]-->

# ...

answered Jul 20, 2016 at 19:48

2

Just adding to Alberto’s answer:

If you still get a 403 Forbidden error after adding a user-agent, you may need to add more headers, such as referer:

headers = {

'User-Agent': '...',

'referer': 'https://...'

}

The headers can be found in the Network > Headers > Request Headers of the Developer Tools. (Press F12 to toggle it.)

Gino Mempin

25.7k29 gold badges98 silver badges138 bronze badges

answered Jul 9, 2019 at 5:44

5

If You are the server’s owner/admin, and the accepted solution didn’t work for You, then try disabling CSRF protection (link to an SO answer).

I am using Spring (Java), so the setup requires You to make a SecurityConfig.java file containing:

@Configuration

@EnableWebSecurity

public class SecurityConfig extends WebSecurityConfigurerAdapter {

@Override

protected void configure (HttpSecurity http) throws Exception {

http.csrf().disable();

}

// ...

}

answered May 26, 2018 at 11:31

AleksandarAleksandar

3,5881 gold badge39 silver badges42 bronze badges

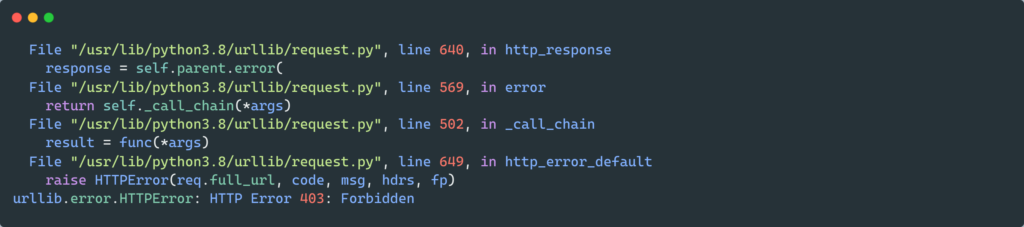

The urllib module can be used to make an HTTP request from a site, unlike the requests library, which is a built-in library. This reduces dependencies. In the following article, we will discuss why urllib.error.httperror: http error 403: forbidden occurs and how to resolve it.

What is a 403 error?

The 403 error pops up when a user tries to access a forbidden page or, in other words, the page they aren’t supposed to access. 403 is the HTTP status code that the webserver uses to denote the kind of problem that has occurred on the user or the server end. For instance, 200 is the status code for – ‘everything has worked as expected, no errors’. You can go through the other HTTP status code from here.

ModSecurity is a module that protects websites from foreign attacks. It checks whether the requests are being made from a user or from an automated bot. It blocks requests from known spider/bot agents who are trying to scrape the site. Since the urllib library uses something like python urllib/3.3.0 hence, it is easily detected as non-human and therefore gets blocked by mod security.

from urllib import request from urllib.request import Request, urlopen url = "https://www.gamefaqs.com" request_site = Request(url) webpage = urlopen(request_site).read() print(webpage[:200])

ModSecurity blocks the request and returns an HTTP error 403: forbidden error if the request was made without a valid user agent. A user-agent is a header that permits a specific string which in turn allows network protocol peers to identify the following:

- The Operating System, for instance, Windows, Linux, or macOS.

- Websserver’s browser

Moreover, the browser sends the user agent to each and every website that you get connected to. The user-Agent field is included in the HTTP header when the browser gets connected to a website. The header field data differs for each browser.

Why do sites use security that sends 403 responses?

According to a survey, more than 50% of internet traffic comes from automated sources. Automated sources can be scrapers or bots. Therefore it gets necessary to prevent these attacks. Moreover, scrapers tend to send multiple requests, and sites have some rate limits. The rate limit dictates how many requests a user can make. If the user(here scraper) exceeds it, it gets some kind of error, for instance, urllib.error.httperror: http error 403: forbidden.

Resolving urllib.error.httperror: http error 403: forbidden?

This error is caused due to mod security detecting the scraping bot of the urllib and blocking it. Therefore, in order to resolve it, we have to include user-agent/s in our scraper. This will ensure that we can safely scrape the website without getting blocked and running across an error. Let’s take a look at two ways to avoid urllib.error.httperror: http error 403: forbidden.

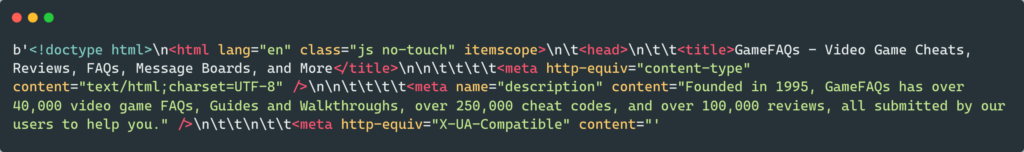

Method 1: using user-agent

from urllib import request

from urllib.request import Request, urlopen

url = "https://www.gamefaqs.com"

request_site = Request(url, headers={"User-Agent": "Mozilla/5.0"})

webpage = urlopen(request_site).read()

print(webpage[:500])

- In the code above, we have added a new parameter called headers which has a user-agent Mozilla/5.0. Details about the user’s device, OS, and browser are given by the webserver by the user-agent string. This prevents the bot from being blocked by the site.

- For instance, the user agent string gives information to the server that you are using Brace browser and Linux OS on your computer. Thereafter, the server accordingly sends the information.

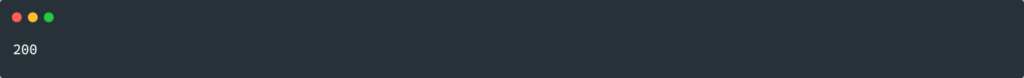

Method 2: using Session object

There are times when even using user-agent won’t prevent the urllib.error.httperror: http error 403: forbidden. Then we can use the Session object of the request module. For instance:

from random import seed

import requests

url = "https://www.gamefaqs.com"

session_obj = requests.Session()

response = session_obj.get(url, headers={"User-Agent": "Mozilla/5.0"})

print(response.status_code)

The site could be using cookies as a defense mechanism against scraping. It’s possible the site is setting and requesting cookies to be echoed back as a defense against scraping, which might be against its policy.

The Session object is compatible with cookies.

Catching urllib.error.httperror

urllib.error.httperror can be caught using the try-except method. Try-except block can capture any exception, which can be hard to debug. For instance, it can capture exceptions like SystemExit and KeyboardInterupt. Let’s see how can we do this, for instance:

from urllib.request import Request, urlopen

from urllib.error import HTTPError

url = "https://www.gamefaqs.com"

try:

request_site = Request(url)

webpage = urlopen(request_site).read()

print(webpage[:500])

except HTTPError as e:

print("Error occured!")

print(e)

FAQs

How to fix the 403 error in the browser?

You can try the following steps in order to resolve the 403 error in the browser try refreshing the page, rechecking the URL, clearing the browser cookies, check your user credentials.

Why do scraping modules often get 403 errors?

Scrapers often don’t use headers while requesting information. This results in their detection by the mod security. Hence, scraping modules often get 403 errors.

Conclusion

In this article, we covered why and when urllib.error.httperror: http error 403: forbidden it can occur. Moreover, we looked at the different ways to resolve the error. We also covered the handling of this error.

Trending Now

-

[Solved] typeerror: unsupported format string passed to list.__format__

●May 31, 2023

-

Solving ‘Remote End Closed Connection’ in Python!

by Namrata Gulati●May 31, 2023

-

[Fixed] io.unsupportedoperation: not Writable in Python

by Namrata Gulati●May 31, 2023

-

[Fixing] Invalid ISOformat Strings in Python!

by Namrata Gulati●May 31, 2023

HTTP Error 403 is a common error encountered while web scraping using Python 3. It indicates that the server is refusing to fulfill the request made by the client, as the request lacks sufficient authorization or the server considers the request to be invalid. This error can be encountered for a variety of reasons, including the presence of IP blocking, CAPTCHAs, or rate limiting restrictions. In order to resolve the issue, there are several methods that can be implemented, including changing the User Agent, using proxies, and implementing wait time between requests.

Method 1: Changing the User Agent

If you encounter HTTP error 403 while web scraping with Python 3, it means that the server is denying you access to the webpage. One common solution to this problem is to change the user agent of your web scraper. The user agent is a string that identifies the web scraper to the server. By changing the user agent, you can make your web scraper appear as a regular web browser to the server.

Here is an example code that shows how to change the user agent of your web scraper using the requests library:

import requests

url = 'https://example.com'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36'}

response = requests.get(url, headers=headers)

print(response.content)In this example, we set the User-Agent header to a string that mimics the user agent of the Google Chrome web browser. You can find the user agent string of your favorite web browser by searching for «my user agent» on Google.

By setting the User-Agent header, we can make our web scraper appear as a regular web browser to the server. This can help us bypass HTTP error 403 and access the webpage we want to scrape.

That’s it! By changing the user agent of your web scraper, you should be able to fix the problem of HTTP error 403 in Python 3 web scraping.

Method 2: Using Proxies

If you are encountering HTTP error 403 while web scraping with Python 3, it is likely that the website is blocking your IP address due to frequent requests. One way to solve this problem is by using proxies. Proxies allow you to make requests to the website from different IP addresses, making it difficult for the website to block your requests. Here is how you can fix HTTP error 403 in Python 3 web scraping with proxies:

Step 1: Install Required Libraries

You need to install the requests and bs4 libraries to make HTTP requests and parse HTML respectively. You can install them using pip:

pip install requests

pip install bs4Step 2: Get a List of Proxies

You need to get a list of proxies that you can use to make requests to the website. There are many websites that provide free proxies, such as https://free-proxy-list.net/. You can scrape the website to get a list of proxies:

import requests

from bs4 import BeautifulSoup

url = 'https://free-proxy-list.net/'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

table = soup.find('table', {'id': 'proxylisttable'})

rows = table.tbody.find_all('tr')

proxies = []

for row in rows:

cols = row.find_all('td')

if cols[6].text == 'yes':

proxy = cols[0].text + ':' + cols[1].text

proxies.append(proxy)This code scrapes the website and gets a list of HTTP proxies that support HTTPS. The proxies are stored in the proxies list.

Step 3: Make Requests with Proxies

You can use the requests library to make requests to the website with a proxy. Here is an example code that makes a request to https://www.example.com with a random proxy from the proxies list:

import random

url = 'https://www.example.com'

proxy = random.choice(proxies)

response = requests.get(url, proxies={'https': proxy})

if response.status_code == 200:

print(response.text)

else:

print('Request failed with status code:', response.status_code)This code selects a random proxy from the proxies list and makes a request to https://www.example.com with the proxy. If the request is successful, it prints the response text. Otherwise, it prints the status code of the failed request.

Step 4: Handle Exceptions

You need to handle exceptions that may occur while making requests with proxies. Here is an example code that handles exceptions and retries the request with a different proxy:

import requests

import random

from requests.exceptions import ProxyError, ConnectionError, Timeout

url = 'https://www.example.com'

while True:

proxy = random.choice(proxies)

try:

response = requests.get(url, proxies={'https': proxy}, timeout=5)

if response.status_code == 200:

print(response.text)

break

else:

print('Request failed with status code:', response.status_code)

except (ProxyError, ConnectionError, Timeout):

print('Proxy error. Retrying with a different proxy...')This code uses a while loop to keep retrying the request with a different proxy until it succeeds. It handles ProxyError, ConnectionError, and Timeout exceptions that may occur while making requests with proxies.

Method 3: Implementing Wait Time between Requests

When you are scraping a website, you might encounter an HTTP error 403, which means that the server is denying your request. This can happen when the server detects that you are sending too many requests in a short period of time, and it wants to protect itself from being overloaded.

One way to fix this problem is to implement wait time between requests. This means that you will wait a certain amount of time before sending the next request, which will give the server time to process the previous request and prevent it from being overloaded.

Here is an example code that shows how to implement wait time between requests using the time module:

import requests

import time

url = 'https://example.com'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36'}

for i in range(5):

response = requests.get(url, headers=headers)

print(response.status_code)

time.sleep(1)In this example, we are sending a request to https://example.com with headers that mimic a browser request. We are then using a for loop to send 5 requests with a 1 second delay between requests using the time.sleep() function.

Another way to implement wait time between requests is to use a random delay. This will make your requests less predictable and less likely to be detected as automated. Here is an example code that shows how to implement a random delay using the random module:

import requests

import random

import time

url = 'https://example.com'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36'}

for i in range(5):

response = requests.get(url, headers=headers)

print(response.status_code)

time.sleep(random.randint(1, 5))In this example, we are sending a request to https://example.com with headers that mimic a browser request. We are then using a for loop to send 5 requests with a random delay between 1 and 5 seconds using the random.randint() function.

By implementing wait time between requests, you can prevent HTTP error 403 and ensure that your web scraping code runs smoothly.

- the

urllibModule in Python - Check

robots.txtto PreventurllibHTTP Error 403 Forbidden Message - Adding Cookie to the Request Headers to Solve

urllibHTTP Error 403 Forbidden Message - Use Session Object to Solve

urllibHTTP Error 403 Forbidden Message

Today’s article explains how to deal with an error message (exception), urllib.error.HTTPError: HTTP Error 403: Forbidden, produced by the error class on behalf of the request classes when it faces a forbidden resource.

the urllib Module in Python

The urllib Python module handles URLs for python via different protocols. It is famous for web scrapers who want to obtain data from a particular website.

The urllib contains classes, methods, and functions that perform certain operations such as reading, parsing URLs, and robots.txt. There are four classes, request, error, parse, and robotparser.

Check robots.txt to Prevent urllib HTTP Error 403 Forbidden Message

When using the urllib module to interact with clients or servers via the request class, we might experience specific errors. One of those errors is the HTTP 403 error.

We get urllib.error.HTTPError: HTTP Error 403: Forbidden error message in urllib package while reading a URL. The HTTP 403, the Forbidden Error, is an HTTP status code that indicates that the client or server forbids access to a requested resource.

Therefore, when we see this kind of error message, urllib.error.HTTPError: HTTP Error 403: Forbidden, the server understands the request but decides not to process or authorize the request that we sent.

To understand why the website we are accessing is not processing our request, we need to check an important file, robots.txt. Before web scraping or interacting with a website, it is often advised to review this file to know what to expect and not face any further troubles.

To check it on any website, we can follow the format below.

https://<website.com>/robots.txt

For example, check YouTube, Amazon, and Google robots.txt files.

https://www.youtube.com/robots.txt

https://www.amazon.com/robots.txt

https://www.google.com/robots.txt

Checking YouTube robots.txt gives the following result.

# robots.txt file for YouTube

# Created in the distant future (the year 2000) after

# the robotic uprising of the mid-'90s wiped out all humans.

User-agent: Mediapartners-Google*

Disallow:

User-agent: *

Disallow: /channel/*/community

Disallow: /comment

Disallow: /get_video

Disallow: /get_video_info

Disallow: /get_midroll_info

Disallow: /live_chat

Disallow: /login

Disallow: /results

Disallow: /signup

Disallow: /t/terms

Disallow: /timedtext_video

Disallow: /user/*/community

Disallow: /verify_age

Disallow: /watch_ajax

Disallow: /watch_fragments_ajax

Disallow: /watch_popup

Disallow: /watch_queue_ajax

Sitemap: https://www.youtube.com/sitemaps/sitemap.xml

Sitemap: https://www.youtube.com/product/sitemap.xml

We can notice a lot of Disallow tags there. This Disallow tag shows the website’s area, which is not accessible. Therefore, any request to those areas will not be processed and is forbidden.

In other robots.txt files, we might see an Allow tag. For example, http://youtube.com/comment is forbidden to any external request, even with the urllib module.

Let’s write code to scrape data from a website that returns an HTTP 403 error when accessed.

Example Code:

import urllib.request

import re

webpage = urllib.request.urlopen('https://www.cmegroup.com/markets/products.html?redirect=/trading/products/#cleared=Options&sortField=oi').read()

findrows = re.compile('<tr class="- banding(?:On|Off)>(.*?)</tr>')

findlink = re.compile('<a href =">(.*)</a>')

row_array = re.findall(findrows, webpage)

links = re.findall(findlink, webpage)

print(len(row_array))

Output:

Traceback (most recent call last):

File "c:\Users\akinl\Documents\Python\index.py", line 7, in <module>

webpage = urllib.request.urlopen('https://www.cmegroup.com/markets/products.html?redirect=/trading/products/#cleared=Options&sortField=oi').read()

File "C:\Python310\lib\urllib\request.py", line 216, in urlopen

return opener.open(url, data, timeout)

File "C:\Python310\lib\urllib\request.py", line 525, in open

response = meth(req, response)

File "C:\Python310\lib\urllib\request.py", line 634, in http_response

response = self.parent.error(

File "C:\Python310\lib\urllib\request.py", line 563, in error

return self._call_chain(*args)

File "C:\Python310\lib\urllib\request.py", line 496, in _call_chain

result = func(*args)

File "C:\Python310\lib\urllib\request.py", line 643, in http_error_default

raise HTTPError(req.full_url, code, msg, hdrs, fp)

urllib.error.HTTPError: HTTP Error 403: Forbidden

The reason is that we are forbidden from accessing the website. However, if we check the robots.txt file, we will notice that https://www.cmegroup.com/markets/ is not with a Disallow tag. However, if we go down the robots.txt file for the website we wanted to scrape, we will find the below.

User-agent: Python-urllib/1.17

Disallow: /

The above text means that the user agent named Python-urllib is not allowed to crawl any URL within the site. That means using the Python urllib module is not allowed to crawl the site.

Therefore, check or parse the robots.txt to know what resources we have access to. we can parse robots.txt file using the robotparser class. These can prevent our code from experiencing an urllib.error.HTTPError: HTTP Error 403: Forbidden error message.

Passing a valid user agent as a header parameter will quickly fix the problem. The website may use cookies as an anti-scraping measure.

The website may set and ask for cookies to be echoed back to prevent scraping, which is maybe against its policy.

from urllib.request import Request, urlopen

def get_page_content(url, head):

req = Request(url, headers=head)

return urlopen(req)

url = 'https://example.com'

head = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.84 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Charset': 'ISO-8859-1,utf-8;q=0.7,*;q=0.3',

'Accept-Encoding': 'none',

'Accept-Language': 'en-US,en;q=0.8',

'Connection': 'keep-alive',

'refere': 'https://example.com',

'cookie': """your cookie value ( you can get that from your web page) """

}

data = get_page_content(url, head).read()

print(data)

Output:

<!doctype html>\n<html>\n<head>\n <title>Example Domain</title>\n\n <meta

'

'

'

<p><a href="https://www.iana.org/domains/example">More information...</a></p>\n</div>\n</body>\n</html>\n'

Passing a valid user agent as a header parameter will quickly fix the problem.

Use Session Object to Solve urllib HTTP Error 403 Forbidden Message

Sometimes, even using a user agent won’t stop this error from occurring. The Session object of the requests module can then be used.

from random import seed

import requests

url = "https://stackoverflow.com/search?q=html+error+403"

session_obj = requests.Session()

response = session_obj.get(url, headers={"User-Agent": "Mozilla/5.0"})

print(response.status_code)

Output:

The above article finds the cause of the urllib.error.HTTPError: HTTP Error 403: Forbidden and the solution to handle it. mod_security basically causes this error as different web pages use different security mechanisms to differentiate between human and automated computers (bots).

I am a bit of a Python Newbie, and I’ve just been trying to get some code working.

Below is the code, and also the nasty error I keep getting.

import pywapi import string google_result = pywapi.get_weather_from_google('Brisbane') print google_result def getCurrentWather(): city = google_result['forecast_information']['city'].split(',')[0] print "It is " + string.lower(google_result['current_conditions']['condition']) + " and " + google_result['current_conditions']['temp_c'] + " degree centigrade now in "+ city+".\n\n" return "It is " + string.lower(google_result['current_conditions']['condition']) + " and " + google_result['current_conditions']['temp_c'] + " degree centigrade now in "+ city def getDayOfWeek(dayOfWk): #dayOfWk = dayOfWk.encode('ascii', 'ignore') return dayOfWk.lower() def getWeatherForecast(): #need to translate from sun/mon to sunday/monday dayName = {'sun': 'Sunday', 'mon': 'Monday', 'tue': 'Tuesday', 'wed': 'Wednesday', ' thu': 'Thursday', 'fri': 'Friday', 'sat': 'Saturday', 'sun': 'Sunday'} forcastall = [] for forecast in google_result['forecasts']: dayOfWeek = getDayOfWeek(forecast['day_of_week']); print " Highest is " + forecast['high'] + " and "+ "Lowest is " + forecast['low'] + " on " + dayName[dayOfWeek] forcastall.append(" Highest is " + forecast['high'] + " and "+ "Lowest is " + forecast['low'] + " on " + dayName[dayOfWeek]) return forcastall

Now is the error:

Traceback (most recent call last): File "C:\Users\Alex\Desktop\JAVIS\JAISS-master\first.py", line 5, in <module> import Weather File "C:\Users\Alex\Desktop\JAVIS\JAISS-master\Weather.py", line 4, in <module> google_result = pywapi.get_weather_from_google('Brisbane') File "C:\Python27\lib\site-packages\pywapi.py", line 51, in get_weather_from_google handler = urllib2.urlopen(url) File "C:\Python27\lib\urllib2.py", line 126, in urlopen return _opener.open(url, data, timeout) File "C:\Python27\lib\urllib2.py", line 400, in open response = meth(req, response) File "C:\Python27\lib\urllib2.py", line 513, in http_response 'http', request, response, code, msg, hdrs) File "C:\Python27\lib\urllib2.py", line 438, in error return self._call_chain(*args) File "C:\Python27\lib\urllib2.py", line 372, in _call_chain result = func(*args) File "C:\Python27\lib\urllib2.py", line 521, in http_error_default raise HTTPError(req.get_full_url(), code, msg, hdrs, fp) HTTPError: HTTP Error 403: Forbidden

Thanks for any help I can get!

![[Fixed] io.unsupportedoperation: not Writable in Python](https://www.pythonpool.com/wp-content/uploads/2023/05/io.unsupportedoperation-not-writable-300x157.webp)

![[Solved] typeerror: unsupported format string passed to list.__format__](https://www.pythonpool.com/wp-content/uploads/2023/05/typeerror-unsupported-format-string-passed-to-list.__format__-300x157.webp)

![[Fixing] Invalid ISOformat Strings in Python!](https://www.pythonpool.com/wp-content/uploads/2023/05/invalid-isoformat-string-300x157.webp)