I got the Error Code: 2013. Lost connection to MySQL server during query error when I tried to add an index to a table using MySQL Workbench.

I noticed also that it appears whenever I run long query.

Is there away to increase the timeout value?

asked May 12, 2012 at 12:14

user836026user836026

10.6k15 gold badges73 silver badges129 bronze badges

New versions of MySQL WorkBench have an option to change specific timeouts.

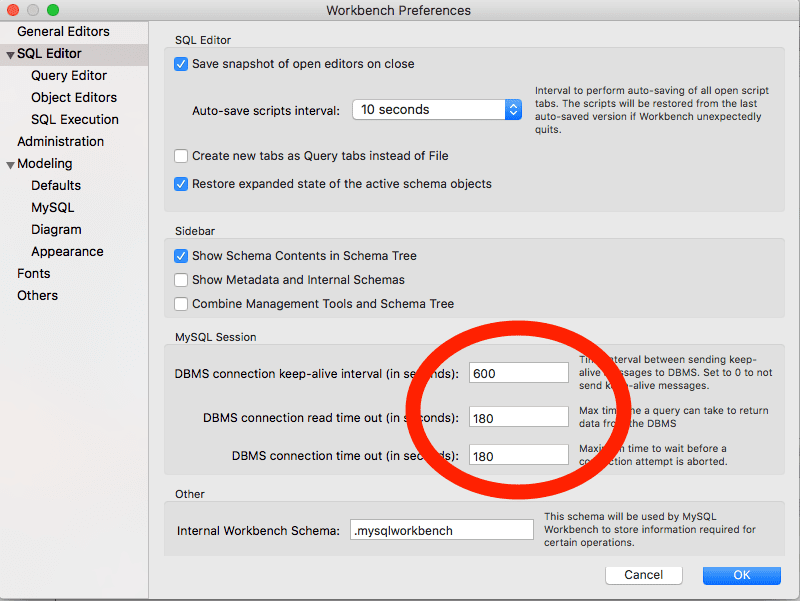

For me it was under Edit → Preferences → SQL Editor → DBMS connection read time out (in seconds): 600

Changed the value to 6000.

Also unchecked limit rows as putting a limit in every time I want to search the whole data set gets tiresome.

Marko

20.4k13 gold badges49 silver badges64 bronze badges

answered Oct 8, 2012 at 22:49

15

If your query has blob data, this issue can be fixed by applying a my.ini change as proposed in this answer:

[mysqld]

max_allowed_packet=16M

By default, this will be 1M (the allowed maximum value is 1024M). If the supplied value is not a multiple of 1024K, it will automatically be rounded to the nearest multiple of 1024K.

While the referenced thread is about the MySQL error 2006, setting the max_allowed_packet from 1M to 16M did fix the 2013 error that showed up for me when running a long query.

For WAMP users: you’ll find the flag in the [wampmysqld] section.

answered Jul 3, 2014 at 13:36

4

Start the DB server with the comandline option net_read_timeout / wait_timeout and a suitable value (in seconds) — for example: --net_read_timeout=100.

For reference see here and here.

answered May 12, 2012 at 12:17

YahiaYahia

69.7k9 gold badges116 silver badges144 bronze badges

2

SET @@local.net_read_timeout=360;

Warning: The following will not work when you are applying it in remote connection:

SET @@global.net_read_timeout=360;

Edit: 360 is the number of seconds

Vince V.

3,1153 gold badges30 silver badges45 bronze badges

answered Apr 20, 2015 at 3:58

user1313024user1313024

2894 silver badges3 bronze badges

2

Add the following into /etc/mysql/my.cnf file:

innodb_buffer_pool_size = 64M

example:

key_buffer = 16M

max_allowed_packet = 16M

thread_stack = 192K

thread_cache_size = 8

innodb_buffer_pool_size = 64M

Umair Ayub

19.5k14 gold badges72 silver badges147 bronze badges

answered Apr 17, 2015 at 15:11

MysqlManMysqlMan

2272 silver badges2 bronze badges

2

In my case, setting the connection timeout interval to 6000 or something higher didn’t work.

I just did what the workbench says I can do.

The maximum amount of time the query can take to return data from the DBMS.Set 0 to skip the read timeout.

On Mac

Preferences -> SQL Editor -> Go to MySQL Session -> set connection read timeout interval to 0.

And it works 😄

answered Nov 26, 2019 at 3:55

Thet HtunThet Htun

4715 silver badges13 bronze badges

There are three likely causes for this error message

- Usually it indicates network connectivity trouble and you should check the condition of your network if this error occurs frequently

- Sometimes the “during query” form happens when millions of rows are being sent as part of one or more queries.

- More rarely, it can happen when the client is attempting the initial connection to the server

For more detail read >>

Cause 2 :

SET GLOBAL interactive_timeout=60;

from its default of 30 seconds to 60 seconds or longer

Cause 3 :

SET GLOBAL connect_timeout=60;

answered Dec 8, 2016 at 6:30

Nanhe KumarNanhe Kumar

15.5k5 gold badges79 silver badges71 bronze badges

1

You should set the ‘interactive_timeout’ and ‘wait_timeout’ properties in the mysql config file to the values you need.

answered May 12, 2012 at 12:19

Maksym PolshchaMaksym Polshcha

18k8 gold badges52 silver badges77 bronze badges

1

On the basis of what I have understood this error was caused due to read timeout and max allowed packet default is 4M. if your query file more than 4Mb then you get an error. this worked for me

- change the read timeout. For changing go to

Workbench Edit → Preferences → SQL Editor

2. change the max_allowed_packet manually by editing the file my.ini. for editing go to "C:\ProgramData\MySQL\MySQL Server 8.0\my.ini". The folder ProgramData folder is hidden so if you did not see then select show hidden file in view settings. set the max_allowed_packet = 16M in my.ini file.

3. Restart MySQL. for restarting go to win+ R -> services.msc and restart MySQL.

answered Mar 24, 2022 at 6:15

AvinashAvinash

3593 silver badges5 bronze badges

0

If you experience this problem during the restore of a big dump-file and can rule out the problem that it has anything to do with network (e.g. execution on localhost) than my solution could be helpful.

My mysqldump held at least one INSERT that was too big for mysql to compute. You can view this variable by typing show variables like "net_buffer_length"; inside your mysql-cli.

You have three possibilities:

- increase net_buffer_length inside mysql -> this would need a server restart

- create dump with

--skip-extended-insert, per insert one line is used -> although these dumps are much nicer to read this is not suitable for big dumps > 1GB because it tends to be very slow - create dump with extended inserts (which is the default) but limit the net-buffer_length e.g. with

--net-buffer_length NR_OF_BYTESwhere NR_OF_BYTES is smaller than the server’s net_buffer_length -> I think this is the best solution, although slower no server restart is needed.

I used following mysqldump command:

mysqldump --skip-comments --set-charset --default-character-set=utf8 --single-transaction --net-buffer_length 4096 DBX > dumpfile

answered Jan 8, 2016 at 11:07

Matt VMatt V

1131 silver badge5 bronze badges

Just perform a MySQL upgrade that will re-build innoDB engine along with rebuilding of many tables required for proper functioning of MySQL such as performance_schema, information_schema, etc.

Issue the below command from your shell:

sudo mysql_upgrade -u root -p

Jamal

7647 gold badges22 silver badges32 bronze badges

answered May 19, 2014 at 20:16

Shoaib KhanShoaib Khan

89914 silver badges26 bronze badges

2

Sometimes your SQL-Server gets into deadlocks, I’ve been in to this problem like 100 times. You can either restart your computer/laptop to restart server (easy way) OR you can go to task-manager>services>YOUR-SERVER-NAME(for me , it was MySQL785 something like this). And right-click > restart.

Try executing query again.

answered Feb 10, 2021 at 13:28

I know its old but on mac

1. Control-click your connection and choose Connection Properties.

2. Under Advanced tab, set the Socket Timeout (sec) to a larger value.

answered Mar 27, 2015 at 6:53

Aamir MahmoodAamir Mahmood

2,7043 gold badges27 silver badges47 bronze badges

1

Change «read time out» time in Edit->Preferences->SQL editor->MySQL session

answered Apr 21, 2016 at 9:25

Try please to uncheck limit rows in in Edit → Preferences →SQL Queries

because You should set the ‘interactive_timeout’ and ‘wait_timeout’ properties in the mysql config file to the values you need.

answered Jul 24, 2014 at 9:59

user2586714user2586714

1491 gold badge1 silver badge7 bronze badges

I got the same issue when loading a .csv file.

Converted the file to .sql.

Using below command I manage to work around this issue.

mysql -u <user> -p -D <DB name> < file.sql

Hope this would help.

answered Sep 8, 2016 at 6:19

VinRockaVinRocka

2994 silver badges15 bronze badges

Go to Workbench Edit → Preferences → SQL Editor → DBMS connections read time out : Up to 3000.

The error no longer occurred.

answered Sep 1, 2018 at 2:50

I faced this same issue. I believe it happens when you have foreign keys to larger tables (which takes time).

I tried to run the create table statement again without the foreign key declarations and found it worked.

Then after creating the table, I added the foreign key constrains using ALTER TABLE query.

Hope this will help someone.

answered Dec 23, 2016 at 7:22

Nimeshka SrimalNimeshka Srimal

8,0525 gold badges42 silver badges57 bronze badges

This happened to me because my innodb_buffer_pool_size was set to be larger than the RAM size available on the server. Things were getting interrupted because of this and it issues this error. The fix is to update my.cnf with the correct setting for innodb_buffer_pool_size.

answered Feb 26, 2017 at 15:35

Go to:

Edit -> Preferences -> SQL Editor

In there you can see three fields in the «MySQL Session» group, where you can now set the new connection intervals (in seconds).

answered May 5, 2017 at 13:23

Turns out our firewall rule was blocking my connection to MYSQL. After the firewall policy is lifted to allow the connection i was able to import the schema successfully.

answered May 11, 2017 at 15:38

I had the same problem — but for me the solution was a DB user with too strict permissions.

I had to allow the Execute ability on the mysql table. After allowing that I had no dropping connections anymore

answered Aug 31, 2017 at 17:35

naabsternaabster

1,49412 silver badges14 bronze badges

Check if the indexes are in place first.

SELECT *

FROM INFORMATION_SCHEMA.STATISTICS

WHERE TABLE_SCHEMA = '<schema>'

answered Sep 22, 2017 at 3:58

Gayan DasanayakeGayan Dasanayake

1,9432 gold badges17 silver badges22 bronze badges

I ran into this while running a stored proc- which was creating lots of rows into a table in the database.

I could see the error come right after the time crossed the 30 sec boundary.

I tried all the suggestions in the other answers. I am sure some of it helped , however- what really made it work for me was switching to SequelPro from Workbench.

I am guessing it was some client side connection that I could not spot in Workbench.

Maybe this will help someone else as well ?

answered Dec 19, 2017 at 21:19

RN.RN.

9974 gold badges14 silver badges31 bronze badges

If you are using SQL Work Bench, you can try using Indexing, by adding an index to your tables, to add an index, click on the wrench(spanner) symbol on the table, it should open up the setup for the table, below, click on the index view, type an index name and set the type to index, In the index columns, select the primary column in your table.

Do the same step for other primary keys on other tables.

answered Jun 25, 2018 at 8:21

Matthew EMatthew E

6256 silver badges6 bronze badges

There seems to be an answer missing here for those using SSH to connect to their MySQL database. You need to check two places not 1 as suggested by other answers:

Workbench Edit → Preferences → SQL Editor → DBMS

Workbench Edit → Preferences → SSH → Timeouts

My default SSH Timeouts were set very low and causing some (but apparently not all) of my timeout issues. After, don’t forget to restart MySQL Workbench!

Last, it may be worth contacting your DB Admin and asking them to increase wait_timeout & interactive_timeout properties in mysql itself via my.conf + mysql restart or doing a global set if restarting mysql is not an option.

Hope this helps!

answered May 6, 2019 at 17:36

Three things to be followed and make sure:

- Whether multiple queries show lost connection?

- how you use set query in MySQL?

- how delete + update query simultaneously?

Answers:

- Always try to remove definer as MySQL creates its own definer and if multiple tables involved for updation try to make a single query as sometimes multiple query shows lost connection

- Always SET value at the top but after DELETE if its condition doesn’t involve SET value.

- Use DELETE FIRST THEN UPDATE IF BOTH OF THEM OPERATIONS ARE PERFORMED ON DIFFERENT TABLES

RalfFriedl

1,1343 gold badges11 silver badges12 bronze badges

answered Sep 22, 2019 at 16:10

I had this error message due to a problem after of upgrade Mysql. The error appeared immediately after I tried to do any query

Check mysql error log files in path /var/log/mysql (linux)

In my case reassigning Mysql owner to the Mysql system folder worked for me

chown -R mysql:mysql /var/lib/mysql

answered Jan 23, 2021 at 19:29

Establish connection first

mysql --host=host.com --port=3306 -u username -p

then select your db use dbname

then source dumb source C:\dumpfile.sql.

After it’s done \q

answered Oct 29, 2021 at 5:32

I got the Error Code: 2013. Lost connection to MySQL server during query error when I tried to add an index to a table using MySQL Workbench.

I noticed also that it appears whenever I run long query.

Is there away to increase the timeout value?

asked May 12, 2012 at 12:14

user836026user836026

10.6k15 gold badges73 silver badges129 bronze badges

New versions of MySQL WorkBench have an option to change specific timeouts.

For me it was under Edit → Preferences → SQL Editor → DBMS connection read time out (in seconds): 600

Changed the value to 6000.

Also unchecked limit rows as putting a limit in every time I want to search the whole data set gets tiresome.

Marko

20.4k13 gold badges49 silver badges64 bronze badges

answered Oct 8, 2012 at 22:49

15

If your query has blob data, this issue can be fixed by applying a my.ini change as proposed in this answer:

[mysqld]

max_allowed_packet=16M

By default, this will be 1M (the allowed maximum value is 1024M). If the supplied value is not a multiple of 1024K, it will automatically be rounded to the nearest multiple of 1024K.

While the referenced thread is about the MySQL error 2006, setting the max_allowed_packet from 1M to 16M did fix the 2013 error that showed up for me when running a long query.

For WAMP users: you’ll find the flag in the [wampmysqld] section.

answered Jul 3, 2014 at 13:36

4

Start the DB server with the comandline option net_read_timeout / wait_timeout and a suitable value (in seconds) — for example: --net_read_timeout=100.

For reference see here and here.

answered May 12, 2012 at 12:17

YahiaYahia

69.7k9 gold badges116 silver badges144 bronze badges

2

SET @@local.net_read_timeout=360;

Warning: The following will not work when you are applying it in remote connection:

SET @@global.net_read_timeout=360;

Edit: 360 is the number of seconds

Vince V.

3,1153 gold badges30 silver badges45 bronze badges

answered Apr 20, 2015 at 3:58

user1313024user1313024

2894 silver badges3 bronze badges

2

Add the following into /etc/mysql/my.cnf file:

innodb_buffer_pool_size = 64M

example:

key_buffer = 16M

max_allowed_packet = 16M

thread_stack = 192K

thread_cache_size = 8

innodb_buffer_pool_size = 64M

Umair Ayub

19.5k14 gold badges72 silver badges147 bronze badges

answered Apr 17, 2015 at 15:11

MysqlManMysqlMan

2272 silver badges2 bronze badges

2

In my case, setting the connection timeout interval to 6000 or something higher didn’t work.

I just did what the workbench says I can do.

The maximum amount of time the query can take to return data from the DBMS.Set 0 to skip the read timeout.

On Mac

Preferences -> SQL Editor -> Go to MySQL Session -> set connection read timeout interval to 0.

And it works 😄

answered Nov 26, 2019 at 3:55

Thet HtunThet Htun

4715 silver badges13 bronze badges

There are three likely causes for this error message

- Usually it indicates network connectivity trouble and you should check the condition of your network if this error occurs frequently

- Sometimes the “during query” form happens when millions of rows are being sent as part of one or more queries.

- More rarely, it can happen when the client is attempting the initial connection to the server

For more detail read >>

Cause 2 :

SET GLOBAL interactive_timeout=60;

from its default of 30 seconds to 60 seconds or longer

Cause 3 :

SET GLOBAL connect_timeout=60;

answered Dec 8, 2016 at 6:30

Nanhe KumarNanhe Kumar

15.5k5 gold badges79 silver badges71 bronze badges

1

You should set the ‘interactive_timeout’ and ‘wait_timeout’ properties in the mysql config file to the values you need.

answered May 12, 2012 at 12:19

Maksym PolshchaMaksym Polshcha

18k8 gold badges52 silver badges77 bronze badges

1

On the basis of what I have understood this error was caused due to read timeout and max allowed packet default is 4M. if your query file more than 4Mb then you get an error. this worked for me

- change the read timeout. For changing go to

Workbench Edit → Preferences → SQL Editor

2. change the max_allowed_packet manually by editing the file my.ini. for editing go to "C:\ProgramData\MySQL\MySQL Server 8.0\my.ini". The folder ProgramData folder is hidden so if you did not see then select show hidden file in view settings. set the max_allowed_packet = 16M in my.ini file.

3. Restart MySQL. for restarting go to win+ R -> services.msc and restart MySQL.

answered Mar 24, 2022 at 6:15

AvinashAvinash

3593 silver badges5 bronze badges

0

If you experience this problem during the restore of a big dump-file and can rule out the problem that it has anything to do with network (e.g. execution on localhost) than my solution could be helpful.

My mysqldump held at least one INSERT that was too big for mysql to compute. You can view this variable by typing show variables like "net_buffer_length"; inside your mysql-cli.

You have three possibilities:

- increase net_buffer_length inside mysql -> this would need a server restart

- create dump with

--skip-extended-insert, per insert one line is used -> although these dumps are much nicer to read this is not suitable for big dumps > 1GB because it tends to be very slow - create dump with extended inserts (which is the default) but limit the net-buffer_length e.g. with

--net-buffer_length NR_OF_BYTESwhere NR_OF_BYTES is smaller than the server’s net_buffer_length -> I think this is the best solution, although slower no server restart is needed.

I used following mysqldump command:

mysqldump --skip-comments --set-charset --default-character-set=utf8 --single-transaction --net-buffer_length 4096 DBX > dumpfile

answered Jan 8, 2016 at 11:07

Matt VMatt V

1131 silver badge5 bronze badges

Just perform a MySQL upgrade that will re-build innoDB engine along with rebuilding of many tables required for proper functioning of MySQL such as performance_schema, information_schema, etc.

Issue the below command from your shell:

sudo mysql_upgrade -u root -p

Jamal

7647 gold badges22 silver badges32 bronze badges

answered May 19, 2014 at 20:16

Shoaib KhanShoaib Khan

89914 silver badges26 bronze badges

2

Sometimes your SQL-Server gets into deadlocks, I’ve been in to this problem like 100 times. You can either restart your computer/laptop to restart server (easy way) OR you can go to task-manager>services>YOUR-SERVER-NAME(for me , it was MySQL785 something like this). And right-click > restart.

Try executing query again.

answered Feb 10, 2021 at 13:28

I know its old but on mac

1. Control-click your connection and choose Connection Properties.

2. Under Advanced tab, set the Socket Timeout (sec) to a larger value.

answered Mar 27, 2015 at 6:53

Aamir MahmoodAamir Mahmood

2,7043 gold badges27 silver badges47 bronze badges

1

Change «read time out» time in Edit->Preferences->SQL editor->MySQL session

answered Apr 21, 2016 at 9:25

Try please to uncheck limit rows in in Edit → Preferences →SQL Queries

because You should set the ‘interactive_timeout’ and ‘wait_timeout’ properties in the mysql config file to the values you need.

answered Jul 24, 2014 at 9:59

user2586714user2586714

1491 gold badge1 silver badge7 bronze badges

I got the same issue when loading a .csv file.

Converted the file to .sql.

Using below command I manage to work around this issue.

mysql -u <user> -p -D <DB name> < file.sql

Hope this would help.

answered Sep 8, 2016 at 6:19

VinRockaVinRocka

2994 silver badges15 bronze badges

Go to Workbench Edit → Preferences → SQL Editor → DBMS connections read time out : Up to 3000.

The error no longer occurred.

answered Sep 1, 2018 at 2:50

I faced this same issue. I believe it happens when you have foreign keys to larger tables (which takes time).

I tried to run the create table statement again without the foreign key declarations and found it worked.

Then after creating the table, I added the foreign key constrains using ALTER TABLE query.

Hope this will help someone.

answered Dec 23, 2016 at 7:22

Nimeshka SrimalNimeshka Srimal

8,0525 gold badges42 silver badges57 bronze badges

This happened to me because my innodb_buffer_pool_size was set to be larger than the RAM size available on the server. Things were getting interrupted because of this and it issues this error. The fix is to update my.cnf with the correct setting for innodb_buffer_pool_size.

answered Feb 26, 2017 at 15:35

Go to:

Edit -> Preferences -> SQL Editor

In there you can see three fields in the «MySQL Session» group, where you can now set the new connection intervals (in seconds).

answered May 5, 2017 at 13:23

Turns out our firewall rule was blocking my connection to MYSQL. After the firewall policy is lifted to allow the connection i was able to import the schema successfully.

answered May 11, 2017 at 15:38

I had the same problem — but for me the solution was a DB user with too strict permissions.

I had to allow the Execute ability on the mysql table. After allowing that I had no dropping connections anymore

answered Aug 31, 2017 at 17:35

naabsternaabster

1,49412 silver badges14 bronze badges

Check if the indexes are in place first.

SELECT *

FROM INFORMATION_SCHEMA.STATISTICS

WHERE TABLE_SCHEMA = '<schema>'

answered Sep 22, 2017 at 3:58

Gayan DasanayakeGayan Dasanayake

1,9432 gold badges17 silver badges22 bronze badges

I ran into this while running a stored proc- which was creating lots of rows into a table in the database.

I could see the error come right after the time crossed the 30 sec boundary.

I tried all the suggestions in the other answers. I am sure some of it helped , however- what really made it work for me was switching to SequelPro from Workbench.

I am guessing it was some client side connection that I could not spot in Workbench.

Maybe this will help someone else as well ?

answered Dec 19, 2017 at 21:19

RN.RN.

9974 gold badges14 silver badges31 bronze badges

If you are using SQL Work Bench, you can try using Indexing, by adding an index to your tables, to add an index, click on the wrench(spanner) symbol on the table, it should open up the setup for the table, below, click on the index view, type an index name and set the type to index, In the index columns, select the primary column in your table.

Do the same step for other primary keys on other tables.

answered Jun 25, 2018 at 8:21

Matthew EMatthew E

6256 silver badges6 bronze badges

There seems to be an answer missing here for those using SSH to connect to their MySQL database. You need to check two places not 1 as suggested by other answers:

Workbench Edit → Preferences → SQL Editor → DBMS

Workbench Edit → Preferences → SSH → Timeouts

My default SSH Timeouts were set very low and causing some (but apparently not all) of my timeout issues. After, don’t forget to restart MySQL Workbench!

Last, it may be worth contacting your DB Admin and asking them to increase wait_timeout & interactive_timeout properties in mysql itself via my.conf + mysql restart or doing a global set if restarting mysql is not an option.

Hope this helps!

answered May 6, 2019 at 17:36

Three things to be followed and make sure:

- Whether multiple queries show lost connection?

- how you use set query in MySQL?

- how delete + update query simultaneously?

Answers:

- Always try to remove definer as MySQL creates its own definer and if multiple tables involved for updation try to make a single query as sometimes multiple query shows lost connection

- Always SET value at the top but after DELETE if its condition doesn’t involve SET value.

- Use DELETE FIRST THEN UPDATE IF BOTH OF THEM OPERATIONS ARE PERFORMED ON DIFFERENT TABLES

RalfFriedl

1,1343 gold badges11 silver badges12 bronze badges

answered Sep 22, 2019 at 16:10

I had this error message due to a problem after of upgrade Mysql. The error appeared immediately after I tried to do any query

Check mysql error log files in path /var/log/mysql (linux)

In my case reassigning Mysql owner to the Mysql system folder worked for me

chown -R mysql:mysql /var/lib/mysql

answered Jan 23, 2021 at 19:29

Establish connection first

mysql --host=host.com --port=3306 -u username -p

then select your db use dbname

then source dumb source C:\dumpfile.sql.

After it’s done \q

answered Oct 29, 2021 at 5:32

November 4, 2017 | Posted in SQL

This is kind of a silly and duplicative post, but I spent too much time searching for the right answer, so maybe this will help the right course of action bubble to the top faster in the future.

The Issue

I was trying to run a query on my local SQL install (whatever MAMP manages and provisions) using MySQL Workbench 6.3 for Mac but kept getting a timeout error.

The query itself wasn’t overly complex, but I was using aggregate functions, group by, and a join to consolidate a dataset. I’m working with distance education reporting data for all U.S. colleges and universities from 2012-2015, so this join involved a 7K row table and another with 25K rows, so not inconsequential but also not BIG data level.

SELECT STABBR as State, EFDELEV as Level , SUM(EFDETOT) as Total_Distance, SUM(EFDEEXC) as Exclusive_Distance, SUM(EFDESOM) as Some_Distance, SUM(EFDENON) as None_Distance FROM hd2012 LEFT JOIN ef2012a_dist_rv ON hd2012.UNITID = ef2012a_dist_rv.UNITID GROUP BY State, Level;

I did some initial googling on the error code, but it is a pretty general error code, so it was difficult to be sure whether this was a limitation of SQL or the Workbench DBMS. I read a few posts that suggested manipulating some of the .conf files for the underlying MySQL install, and I went too long down this road before trying something in Workbench itself.

It turns out there are timeout settings for the DBMS that you extend to make sure that it waits a sufficient amount of time for your query to return data. Thanks to this specific answer on StackOverflow, but the description of “how-to” it links to is no longer valid, hence this blog post.

The Fix

There is a quick setting in Preferences that helped me. As you might expect, the DBMS has settings to manage its connection to the SQL server. In my case, those were just too short for my long running queries.

I changed the 30 second defaults to 180, and returned the data I needed. However, I’d imagine that some things would call for a much higher timeout, especially if you wanted to do a lot of transactions.

Another Fix

As of 08/27/2018, I did some additional noodling around with the queries that produced this slow result and realized some simple indexing reduced the query time from ~50 seconds to .227 seconds. You can find a more detailed post about that here.

If you are looking for a way to stop the timeout error, now you have two options. However, now I realize that most of my issue had nothing to do with MySQL Workbench and everything to do with the way I constructed the underlying database : ) However, options are always good, so good luck!

If you spend time running lots of MySQL queries, you might come across the Error Code: 2013. Lost connection to MySQL server during query. This article offers some suggestions on how to avoid or fix the problem.

Why this happens

This error appears when the connection between your MySQL client and database server times out. Essentially, it took too long for the query to return data so the connection gets dropped.

Most of my work involves content migrations. These projects usually involve running complex MySQL queries that take a long time to complete. I’ve found the WordPress wp_postmeta table especially troublesome because a site with tens of thousands of posts can easily have several hundred thousand postmeta entries. Joins of large datasets from these types of tables can be especially intensive.

Avoid the problem by refining your queries

In many cases, you can avoid the problem entirely by refining your SQL queries. For example, instead of joining all the contents of two very large tables, try filtering out the records you don’t need. Where possible, try reducing the number of joins in a single query. This should have the added benefit of making your query easier to read. For my purposes, I’ve found that denormalizing content into working tables can improve the read performance. This avoids time-outs.

Re-writing the queries isn’t always option so you can try the following server-side and client-side workarounds.

Server-side solution

If you’re an administrator for your MySQL server, try changing some values. The MySQL documentation suggests increasing the net_read_timeout or connect_timeout values on the server.

Client-side solution

You can increase your MySQL client’s timeout values if you don’t have administrator access to the MySQL server.

MySQL Workbench

You can edit the SQL Editor preferences in MySQL Workbench:

- In the application menu, select Edit > Preferences > SQL Editor.

- Look for the MySQL Session section and increase the DBMS connection read time out value.

- Save the settings, quite MySQL Workbench and reopen the connection.

Navicat

How to edit Navicat preferences:

- Control-click on a connection item and select Connection Properties > Edit Connection.

- Select the Advanced tab and increase the Socket Timeout value.

Command line

On the command line, use the connect_timeout variable.

Python script

If you’re running a query from a Python script, use the connection argument:

con.query('SET GLOBAL connect_timeout=6000')

I have a table called products2 with about 830,000 rows in it. When I had about 500,000 rows, the query runs slow but fine. And now when I try to query something, it almost doesnt return anything in Mysql Workbench. Showing the following error:

>**strong text**Error Code: 2013. Lost connection to MySQL server during query 44.515 sec

I thought it has something to do with my Workbench connection to my database on Go-daddy host, but same thing happens even if I go straight to PHPadmin online tool. I have Product ID as primary key, unique index, not null and auto increment. The full table structure below:

- ProductID int(11) AI PK

- SupplierPartNum varchar(100)

- OemPartNum varchar(100)

- UPC varchar(45)

- CostPrice decimal(10,2)

- CompatibleWith text

- ProductName varchar(500)

- Brand varchar(45)

- Manufacturer varchar(45)

- Supplier int(11)

- Category varchar(45)

- SubCategory varchar(100)

- Notes text

- Notes2 varchar(500)

- Quantity int(11)

- PageYield varchar(45)

- Condition varchar(45)

- Color varchar(45)

- Description varchar(21844)

- IsTruckShip varchar(45)

- UnitOfMeasure varchar(45)

- MinQty int(11)

- Width decimal(8,2)

- Length decimal(8,2)

- Height decimal(8,2)

- Weight decimal(8,2)

- Returnable varchar(5)

- Warranty varchar(100)

- SubCategory2 varchar(100)

- ImageUrl1 varchar(150)

Queries failed (pretty much any query now fails):

select * from Products2 where Supplier = 15 and Description is not null and ImageUrl1 is not null

and flag2 = 1;select *from Products2 where Supplier = 10;

Did some research and increase the timeout. But doesn’t help. I worked with Microsoft Sql before in Management studio and dealed with millions of rows before in mssql, it was fast and easy. I just dont get why doesn’t it work in mysql, I am a newbie. I dont think this problem can be solve if my database is on localhost. The internet should be fast enough.

The server is sitting at godaddy’s server as I have a hosting plan with them. I dont see any options on their website to set any configuration for the server.

Please help me. Thanks.