- sklearn.metrics.confusion_matrix(y_true, y_pred, *, labels=None, sample_weight=None, normalize=None)[source]¶

-

Compute confusion matrix to evaluate the accuracy of a classification.

By definition a confusion matrix \(C\) is such that \(C_{i, j}\)

is equal to the number of observations known to be in group \(i\) and

predicted to be in group \(j\).Thus in binary classification, the count of true negatives is

\(C_{0,0}\), false negatives is \(C_{1,0}\), true positives is

\(C_{1,1}\) and false positives is \(C_{0,1}\).Read more in the User Guide.

- Parameters:

-

- y_truearray-like of shape (n_samples,)

-

Ground truth (correct) target values.

- y_predarray-like of shape (n_samples,)

-

Estimated targets as returned by a classifier.

- labelsarray-like of shape (n_classes), default=None

-

List of labels to index the matrix. This may be used to reorder

or select a subset of labels.

IfNoneis given, those that appear at least once

iny_trueory_predare used in sorted order. - sample_weightarray-like of shape (n_samples,), default=None

-

Sample weights.

New in version 0.18.

- normalize{‘true’, ‘pred’, ‘all’}, default=None

-

Normalizes confusion matrix over the true (rows), predicted (columns)

conditions or all the population. If None, confusion matrix will not be

normalized.

- Returns:

-

- Cndarray of shape (n_classes, n_classes)

-

Confusion matrix whose i-th row and j-th

column entry indicates the number of

samples with true label being i-th class

and predicted label being j-th class.

References

Examples

>>> from sklearn.metrics import confusion_matrix >>> y_true = [2, 0, 2, 2, 0, 1] >>> y_pred = [0, 0, 2, 2, 0, 2] >>> confusion_matrix(y_true, y_pred) array([[2, 0, 0], [0, 0, 1], [1, 0, 2]])

>>> y_true = ["cat", "ant", "cat", "cat", "ant", "bird"] >>> y_pred = ["ant", "ant", "cat", "cat", "ant", "cat"] >>> confusion_matrix(y_true, y_pred, labels=["ant", "bird", "cat"]) array([[2, 0, 0], [0, 0, 1], [1, 0, 2]])

In the binary case, we can extract true positives, etc. as follows:

>>> tn, fp, fn, tp = confusion_matrix([0, 1, 0, 1], [1, 1, 1, 0]).ravel() >>> (tn, fp, fn, tp) (0, 2, 1, 1)

Examples using sklearn.metrics.confusion_matrix¶

Evaluating the performance of classification models is crucial in machine learning, as it helps us understand how well our models are making predictions. One of the most effective ways to do this is by using a confusion matrix, a simple yet powerful tool that provides insights into the types of errors a model makes. In this tutorial, we will dive into the world of confusion matrices, exploring their components, the differences between binary and multi-class matrices, and how to interpret them.

By the end of this tutorial, you’ll have learned the following:

- What confusion matrices are and how to interpret them

- How to create them using Sklearn’s powerful functions

- How to create common confusion matrix metrics, such as accuracy and recall, using sklearn

- How to visualize a confusion matrix using Sklearn and Seaborn

Table of Contents

What You’ll Learn About a Confusion Matrix in Python

What is a Confusion Matrix?

Understand what it is first

Read More

Creating a Confusion Matrix

Learn how to create a confusion matrix in Sklearn

Read More

Visualizing a Confusion Matrix

Visualize your confusion matrix using Seaborn

Read More

The Quick Answer: Use Sklearn’s confusion_matrix

To easily create a confusion matrix in Python, you can use Sklearn’s confusion_matrix function, which accepts the true and predicted values in a classification problem.

# Creating a Confusion Matrix in Python with sklearn

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import confusion_matrix

# Create a Model

data = load_breast_cancer()

X_train, X_test, y_train, y_test = train_test_split(

data.data, data.target, test_size=0.2)

model = LogisticRegression()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

# Create a Confusion Matrix

print(confusion_matrix(y_test, y_pred))

# Returns:

# [[37 3]

# [ 1 73]]Understanding a Confusion Matrix

A confusion matrix, also known as an error matrix, is a powerful tool used to evaluate the performance of classification models. The matrix is a tabular format that shows predicted values against their actual values.

This allows us to understand whether the model is performing well or not. Similarly, it allows you to identify where the model is making mistakes.

Definition and Explanation of a Confusion Matrix

A confusion matrix is a table that displays the number of correct and incorrect predictions made by a classification model. The table is presented in such a way that:

- The rows represent the instances of the actual class, and

- The columns represent the instances of the predicted class.

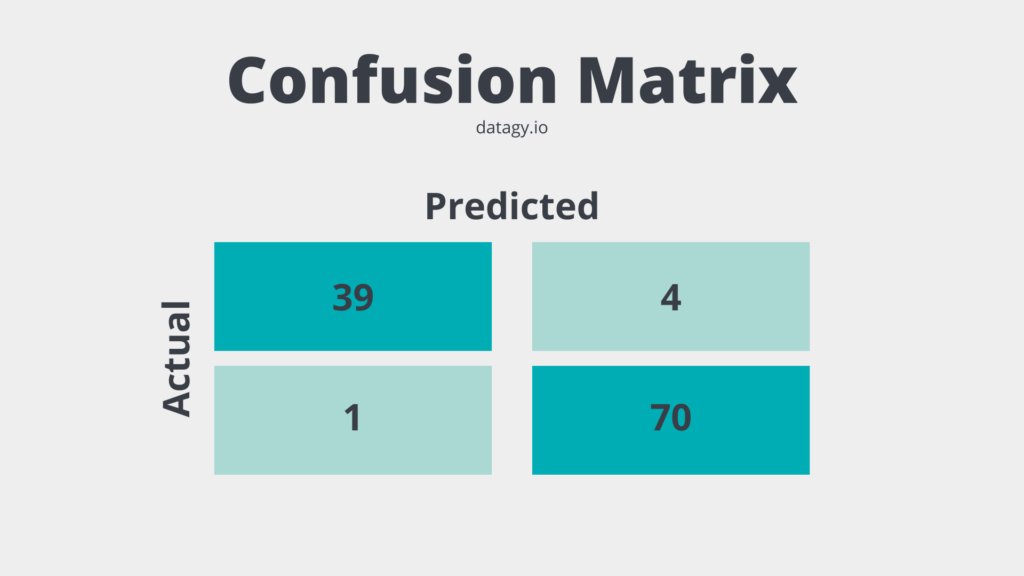

Take a look at the visualization below to see what a simple confusion matrix looks like:

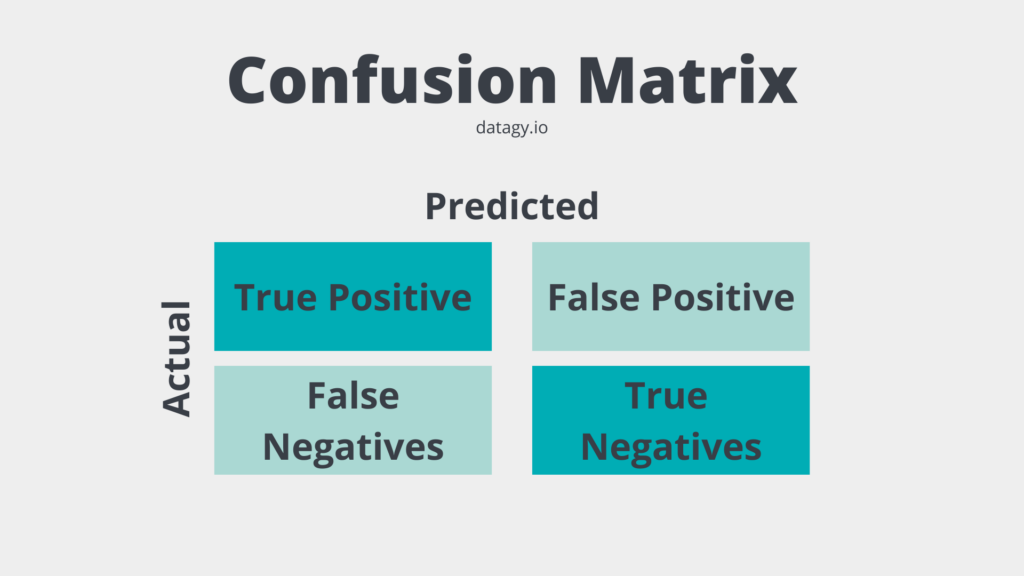

Let’s break down what these sections of a confusion matrix mean.

Components of a Confusion Matrix

Similar to the image above, a confusion matrix is made up of four main components:

- True Positives (TP): instances where the model correctly predicted the positive class.

- True Negatives (TN): instances where the model correctly predicted the negative class.

- False Positives (FP): instances where the model incorrectly predicted the positive class (also known as Type I error).

- False Negatives (FN): instances where the model incorrectly predicted the negative class (also known as Type II error).

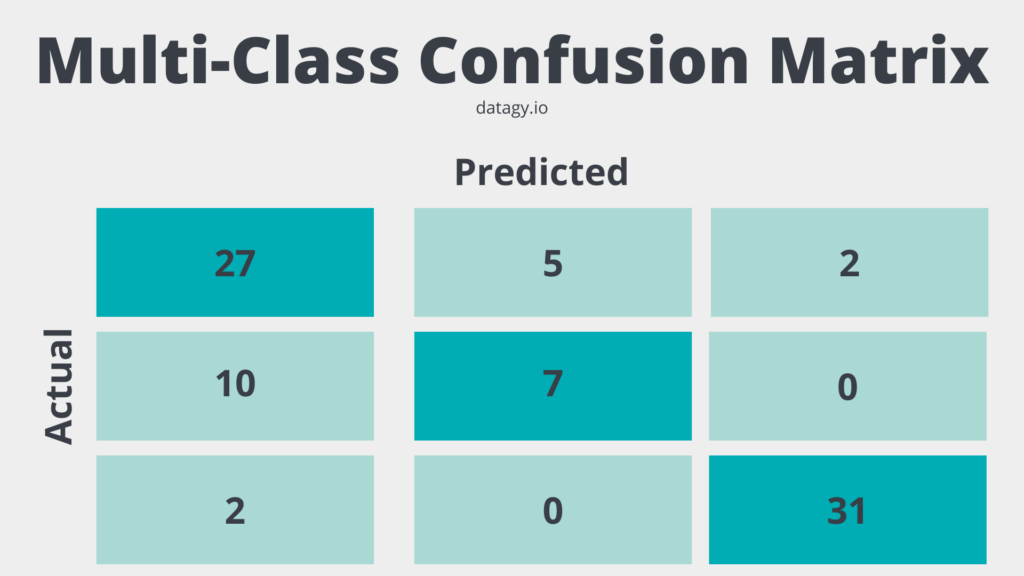

Understanding a Multi-Class Confusion Matrix

So far, we have discussed confusion matrices in the context of binary classification problems. This means that the model predicts something to either be one thing or not.

However, confusion matrices can also be used for multi-class classification problems, where there are more than two classes to predict. In this section, you’ll learn about the concept of multi-class confusion matrices and understand their components and differences from binary confusion matrices.

A multi-class confusion matrix builds on a simple, binary confusion matrix, designed to evaluate the performance of classification models with more than two classes. A multi-class confusion matrix is an n x n table, where n represents the number of classes in the problem.

Each row of the matrix corresponds to the instances of the actual class, and each column corresponds to the instances of the predicted class.

Components of a Multi-Class Confusion Matrix

A multi-class confusion matrix is different from a binary confusion matrix. Let’s explore how this is different:

- Diagonal elements: values along the diagonal represent the number of instances where the model correctly predicted the class. They are equivalent to True Positives (TP) in the binary case, but for each class.

- Off-diagonal elements: all values that aren’t on the diagonal represent the number of instances where the model incorrectly predicted the class. They correspond to False Positives (FP) and False Negatives (FN) in the binary case, but for each combination of classes.

In a multi-class confusion matrix, the sum of all diagonal elements gives the total number of correct predictions, and the sum of all off-diagonal elements gives the total number of incorrect predictions.

Differences and Similarities Between Binary and Multi-Class Confusion Matrices

While binary and multi-class confusion matrices serve the same purpose of evaluating classification models, there are some key differences and similarities between them:

- Structure: a binary confusion matrix is a 2 x 2 table, whereas a multi-class confusion matrix is a n x n table, where n is the number of classes.

- Components of a confusion matrix: Both binary and multi-class confusion matrices have diagonal elements representing correct predictions. Similarly, the off-diagonal elements represent incorrect predictions. However, in the multi-class case, there are multiple True Positives, False Positives, and False Negatives for each combination of classes.

Knowing how to work with both binary and multi-class confusion matrices will be essential in evaluating different types of machine learning models.

Importance of Using a Confusion Matrix for Classification Problems

A confusion matrix is useful for evaluating classification models by allowing you to understand the types of errors that a model is making. In particular, a classification matrix allows you to identify if a model is biased toward a particular class. Similarly, it allows you to better understand if a model is either too sensitive or too conservative.

How to Interpret a Confusion Matrix

Understanding the components of a confusion matrix is just the first step. In this section, you will learn how to interpret a confusion matrix. You’ll also learn how to calculate different performance metrics that can help us make informed decisions about your classification model.

Understanding the Components of a Confusion Matrix

As you learned earlier, a confusion matrix consists of four components: True Positives, True Negatives, False Positives, and False Negatives. To interpret a confusion matrix, we can examine these components and understand how they relate to the model’s performance.

Calculating Performance Metrics Using a Confusion Matrix

The values of a confusion matrix allow you to calculate a number of different performance metrics, including accuracy, precision, recall, and the F1 score. Let’s break these down a little bit more:

- Accuracy: The ratio of correct predictions (TP + TN) to the total number of predictions (TP + TN + FP + FN).

- Precision: The ratio of true positive predictions (TP) to the total number of positive predictions (TP + FP).

- Recall (Sensitivity): The ratio of true positive predictions (TP) to the total number of actual positive instances (TP + FN).

- F1 Score: The harmonic mean of precision and recall, which provides a balanced measure of the model’s performance.

Analyzing the Results and Making Informed Decisions

By calculating the performance metrics above, you’ll be able to better analyze how well your model is performing. By understanding the confusion matrix and the performance metrics, we can make informed decisions about our model, such as adjusting the classification threshold, balancing the dataset, or selecting a different algorithm to improve its performance.

For example, a model that shows high accuracy might indicate that the model is performing well. On the other hand, a model that has low precision or recall can indicate that a model may have issues in identifying classes correctly.

Creating a Confusion Matrix in Python

Now that you have learned how confusion matrices are valuable tools for evaluating classification problems in machine learning, let’s dive into how to create them using Python with sklearn. Sklearn is an invaluable tool for creating machine-learning models in Python.

Dataset Preparation and Model Training

For the purposes of this tutorial, we’ll be creating a confusion matrix using the sklearn breast cancer dataset, which identifies whether a tumor is malignant or benign. We won’t go through the model selection, creation, or prediction process in this tutorial. However, we’ll set up the baseline model so that we can create the confusion matrix.

# Loading a Binary Classification Model in Sklearn

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import confusion_matrix

data = load_breast_cancer()

X_train, X_test, y_train, y_test = train_test_split(data.data, data.target, test_size=0.2, random_state=42)

model = LogisticRegression()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)In the code block above, we imported a number of different functions and classes from Sklearn. In particular, we followed best practices by splitting our dataset into training and testing datasets using the train_test_split function.

Generating a Confusion Matrix Using Sklearn

Now that we have a model created, we can build our first confusion matrix. Let’s take a look at the function and see what parameters it offers. The sklearn.metrics.confusion_matrix is a function that computes a confusion matrix and has the following parameters:

y_true: true labels for the test data.y_pred: predicted labels for the test data.labels: optional, list of labels to index the matrix. This may be used to reorder or select a subset of labels. If None is given, all labels are used.sample_weight: optional, sample weights.normalize: If set to ‘true’, the rows of the confusion matrix are normalized so that they sum up to 1. If set to ‘pred’, the columns of the confusion matrix are normalized so that they sum up to 1. If set to ‘all’, all values in the confusion matrix are normalized so that they sum up to 1. If set to None, no normalization is performed (default).

The only required parameters are the y_true and y_pred parameters. We created these in our previous code block. Let’s see how we can create our first confusion matrix:

# Create a confusion matrix

print(confusion_matrix(y_test, y_pred))

# Returns:

# [[37 3]

# [ 1 73]]In this example, there were:

- 37 true positives (i.e., cases where the model correctly predicted that the patient had breast cancer),

- 3 false positives (i.e., cases where the model incorrectly predicted that the patient had breast cancer),

- 1 false negative (i.e., a case where the model incorrectly predicted that the patient did not have breast cancer), and

- 73 true negatives (i.e., cases where the model correctly predicted that the patient did not have breast cancer).

Let’s now take a look at how we can interpret the generated confusion matrix.

Interpreting the Generated Confusion Matrix

The way in which you interpret a confusion matrix is determined by how accurate your model needs to be. For example, in our example, we are predicting whether or not someone has cancer. In these cases, the accuracy of our model is incredibly important. Even infrequent misclassifications can have significant impacts.

On the other hand, working with datasets with less profound consequences, there may be a larger margin for error. In my experience, it’s important to focus on truly understand the sensitivity and importance of misclassifications.

We can use Sklearn to calculate the accuracy, precision, recall, and F1 scores to help interpret our confusion matrix. Let’s see how we can do this in Python using sklearn:

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# Calculate the accuracy

accuracy = accuracy_score(y_test, y_pred)

# Calculate the precision

precision = precision_score(y_test, y_pred)

# Calculate the recall

recall = recall_score(y_test, y_pred)

# Calculate the f1 score

f1 = f1_score(y_test, y_pred)

# Print the results

print("Accuracy:", accuracy)

print("Precision:", precision)

print("Recall:", recall)

print("F1 Score:", f1)

# Returns:

# Accuracy: 0.956140350877193

# Precision: 0.9459459459459459

# Recall: 0.9859154929577465

# F1 Score: 0.9655172413793103Recall that these scores represent the following:

- Accuracy: The ratio of correct predictions (TP + TN) to the total number of predictions (TP + TN + FP + FN).

- Precision: The ratio of true positive predictions (TP) to the total number of positive predictions (TP + FP).

- Recall (Sensitivity): The ratio of true positive predictions (TP) to the total number of actual positive instances (TP + FN).

- F1 Score: The harmonic mean of precision and recall, which provides a balanced measure of the model’s performance.

We can simplify printing these values even further by using the sklearn classification_report function, which takes the true and predicted values as input:

# Using classification_report to Print Scores

from sklearn.metrics import classification_report

print(classification_report(y_test, y_pred))

# Returns:

# precision recall f1-score support

# 0 0.97 0.91 0.94 43

# 1 0.95 0.99 0.97 71

# accuracy 0.96 114

# macro avg 0.96 0.95 0.95 114

# weighted avg 0.96 0.96 0.96 114Finally, let’s take a look at how we can visualize the confusion matrix in Python, using Seaborn.

Visualizing a Confusion Matrix in Python

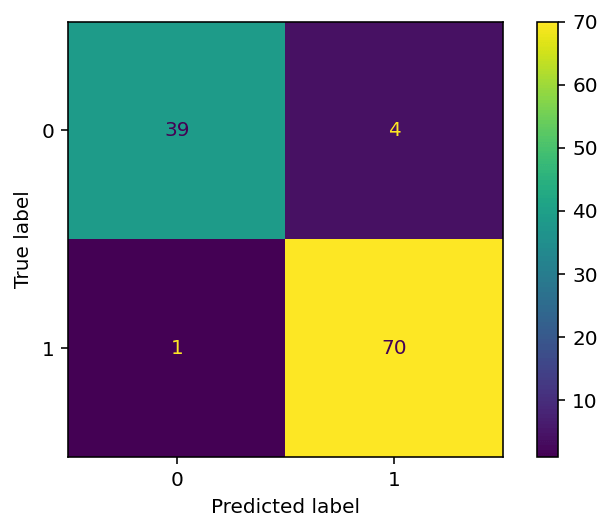

Sklearn provides a helpful class to help visualize a confusion matrix. While other tutorials will point you to the plot_confusion_matrix function, this function was recently deprecated. Because of this, it’s important to use the ConfusionMatrixDisplay class.

The ConfusionMatrixDisplay class lets you pass in a confusion matrix and the labels of your classes. You can then visualize the matrix by applying the .plot() method to your object. Take a look at what this looks like below:

# Plotting a Confusion Matrix with Sklearn

from sklearn.metrics import ConfusionMatrixDisplay

import matplotlib.pyplot as plt

conf_matrix = confusion_matrix(y_true=y_test, y_pred=y_pred)

vis = ConfusionMatrixDisplay(confusion_matrix=conf_matrix, display_labels=model.classes_)

vis.plot()

plt.show()In the code block above, we passed our confusion matrix into the ConfusionMatrixDisplay class constructor. We also included our display labels by accessing the classes. Finally, we applied the .plot() method and used the Matplotlib show() function to visualize the image below:

In the following section, you’ll learn how to plot a confusion matrix using Seaborn.

Using Seaborn to Plot a Confusion Matrix

Seaborn is a helpful Python data visualization library built on top of Matplotlib. Its mission is to make hard things easy, allowing you to create complex visualizations using a simple API.

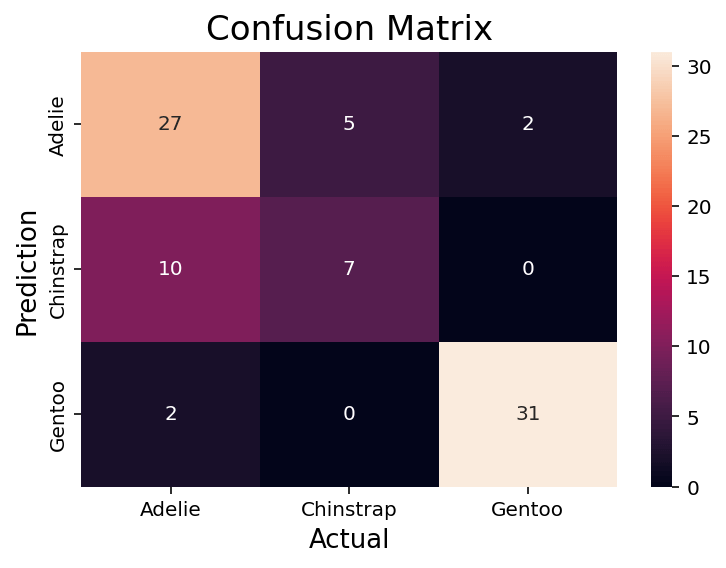

Plotting a confusion matrix is similar to plotting a heatmap in Seaborn, indicating where values are higher or lower visually. In order to do this, let’s plot a confusion matrix for another model, where we have more than a binary class.

If you’re unfamiliar with KNN in Python using Sklearn, you can follow along with the tutorial link here. That said, the end result of the code block is a model with three classes, rather than two:

# Creating a Model with 3 Classes

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import confusion_matrix

import seaborn as sns

df = sns.load_dataset('penguins')

df = df.dropna()

X = df.drop(columns = ['species', 'sex', 'island'])

y = df['species']

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state = 100)

clf = KNeighborsClassifier(p=1)

clf.fit(X_train, y_train)

predictions = clf.predict(X_test)In the code block above, we created a model that predicts three different classes. In order to plot the confusion matrix for this model, we can use the code below:

# Plotting a Confusion Matrix in Seaborn

conf_matrix = confusion_matrix(y_test, predictions, labels=clf.classes_)

sns.heatmap(conf_matrix,

annot=True,

fmt='g',

xticklabels=clf.classes_,

yticklabels=clf.classes_,

)

plt.ylabel('Prediction',fontsize=13)

plt.xlabel('Actual',fontsize=13)

plt.title('Confusion Matrix',fontsize=17)

plt.show()In the code block above, we used the heatmap function in Seaborn to plot our confusion matrix. We also modified the labels and titles using special functions.

This returned the following image:

We can see that this returns an image very similar to the Sklearn one. One benefit of this approach is how declarative and familiar it is. If you’re familiar with Seaborn or matplotlib, customizing the confusion matrix is quite simple.

Frequently Asked Questions

What is a confusion matrix in Python?

A confusion matrix in Python is a table that displays the number of correct and incorrect predictions made by a classification model. It helps in evaluating the performance of the model by comparing its predictions against the actual values. Python libraries like sklearn provide functions to create and visualize confusion matrices, making it easier to analyze and interpret the results.

What does a confusion matrix tell you?

A confusion matrix tells you how well a classification model is performing by showing the number of correct and incorrect predictions. It highlights the instances where the model correctly predicted the positive and negative classes (True Positives and True Negatives) and the instances where the model incorrectly predicted the positive and negative classes (False Positives and False Negatives). By analyzing the confusion matrix, you can identify the types of errors the model is making, and make informed decisions to improve its performance.

How can you use Sklearn confusion_matrix?

The sklearn library provides a function called confusion_matrix that can be used to create a confusion matrix for a classification model. To use it, you need to pass the true labels (y_true) and the predicted labels (y_pred) as arguments. The function returns a confusion matrix that can be printed or visualized using other libraries like matplotlib or Seaborn.

Can you use a confusion matrix for multi-class classification problems?

Yes, you can use a confusion matrix for multi-class classification problems. In the case of multi-class classification, the confusion matrix is an n x n table, where n represents the number of classes. Each row corresponds to the instances of the actual class, and each column corresponds to the instances of the predicted class. The diagonal elements represent correct predictions, while the off-diagonal elements represent incorrect predictions. The process of interpreting a multi-class confusion matrix is similar to that of a binary confusion matrix, with the main difference being the presence of multiple classes.

Conclusion

In this tutorial, we have explored the concept of confusion matrices and their importance in evaluating the performance of classification models. We’ve learned about the components of binary and multi-class confusion matrices, how to interpret them, and how to calculate various performance metrics such as accuracy, precision, recall, and F1 score. Additionally, we’ve demonstrated how to create and visualize confusion matrices in Python using sklearn and Seaborn.

As you continue to work on machine learning projects, understanding and utilizing confusion matrices will be an invaluable skill in assessing the performance of your classification models. By identifying the types of errors a model makes, you can make informed decisions to improve its performance, such as adjusting the classification threshold, balancing the dataset, or selecting a different algorithm. Keep practicing and experimenting with confusion matrices, and you’ll be well-equipped to tackle the challenges of evaluating classification models in your future projects.

To learn more about the Sklearn confusion_matrix function, check out the official documentation.

Матрица ошибок – это метрика производительности классифицирующей модели Машинного обучения (ML).

Когда мы получаем данные, то после очистки и предварительной обработки, первым делом передаем их в модель и, конечно же, получаем результат в виде вероятностей. Но как мы можем измерить эффективность нашей модели? Именно здесь матрица ошибок и оказывается в центре внимания.

Матрица ошибок – это показатель успешности классификации, где классов два или более. Это таблица с 4 различными комбинациями сочетаний прогнозируемых и фактических значений.

Давайте рассмотрим значения ячеек (истинно позитивные, ошибочно позитивные, ошибочно негативные, истинно негативные) с помощью «беременной» аналогии.

Истинно позитивное предсказание (True Positive, сокр. TP)

Вы предсказали положительный результат, и женщина действительно беременна.

Истинно отрицательное предсказание (True Negative, TN)

Вы предсказали отрицательный результат, и мужчина действительно не беременен.

Ошибочно положительное предсказание (ошибка типа I, False Positive, FN)

Вы предсказали положительный результат (мужчина беременен), но на самом деле это не так.

Ошибочно отрицательное предсказание (ошибка типа II, False Negative, FN)

Вы предсказали, что женщина не беременна, но на самом деле она беременна.

Давайте разберемся в матрице ошибок с помощью арифметики.

Пример. Мы располагаем датасетом пациентов, у которых диагностируют рак. Зная верный диагноз (столбец целевой переменной «Y на самом деле»), хотим усовершенствовать диагностику с помощью модели Машинного обучения. Модель получила тренировочные данные, и на тестовой части, состоящей из 7 записей (в реальных задачах, конечно, больше) и изображенной ниже, мы оцениваем, насколько хорошо прошло обучение.

Модель сделала свои предсказания для каждого пациента и записала вероятности от 0 до 1 в столбец «Предсказанный Y». Мы округляем эти числа, приводя их к нулю или единице, с помощью порога, равного 0,6 (ниже этого значения – ноль, пациент здоров). Результаты округления попадают в столбец «Предсказанная вероятность»: например, для первой записи модель указала 0,5, что соответствует нулю. В последнем столбце мы анализируем, угадала ли модель.

Теперь, используя простейшие формулы, мы рассчитаем Отзыв (Recall), точность результата измерений (Precision), точность измерений (Accuracy), и наконец поймем разницу между этими метриками.

Отзыв

Из всех положительных значений, которые мы предсказали правильно, сколько на самом деле положительных? Подсчитаем, сколько единиц в столбце «Y на самом деле» (4), это и есть сумма TP + FN. Теперь определим с помощью «Предсказанной вероятности», сколько из них диагностировано верно (2), это и будет TP.

$$Отзыв = \frac{TP}{TP + FN} = \frac{2}{2 + 2} = \frac{1}{2}$$

Точность результата измерений (Precision)

В этом уравнении из неизвестных только FP. Ошибочно диагностированных как больных здесь только одна запись.

$$Точность\spaceрезультата\spaceизмерений = \frac{TP}{TP + FP} = \frac{2}{2 + 1} = \frac{2}{3}$$

Точность измерений (Accuracy)

Последнее значение, которое предстоит экстраполировать из таблицы – TN. Правильно диагностированных моделью здоровых людей здесь 2.

$$Точность\spaceизмерений = \frac{TP + TN}{Всего\spaceзначений} = \frac{2 + 2}{7} = \frac{4}{7}$$

F-мера точности теста

Эти метрики полезны, когда помогают вычислить F-меру – конечный показатель эффективности модели.

$$F-мера = \frac{2 * Отзыв * Точность\spaceизмерений}{Отзыв + Точность\spaceизмерений} = \frac{2 * \frac{1}{2} * \frac{2}{3}}{\frac{1}{2} + \frac{2}{3}} = 0,56$$

Наша скромная модель угадывает лишь 56% процентов диагнозов, и такой результат, как правило, считают промежуточным и работают над улучшением точности модели.

SkLearn

С помощью замечательной библиотеки Scikit-learn мы можем мгновенно определить множество метрик, и матрица ошибок – не исключение.

from sklearn.metrics import confusion_matrix

y_true = [2, 0, 2, 2, 0, 1]

y_pred = [0, 0, 2, 2, 0, 2]

confusion_matrix(y_true, y_pred)Выводом будет ряд, состоящий из трех списков:

array([[2, 0, 0],

[0, 0, 1],

[1, 0, 2]])Значения диагонали сверху вниз слева направо [2, 0, 2] – это число верно предсказанных значений.

Фото: @opeleye

The confusion matrix is often used in machine learning to compute the accuracy of a classification algorithm.

It can be used in binary classifications as well as multi-class classification problems.

Confusion Matrix

A confusion matrix is a visual representation of the performance of a machine learning model. It summarizes the predicted and actual values of a classification model to identify misclassifications. The confusion matrix helps data scientists to fine-tune their models and improve their performance.

What the Confusion Matrix Measures?

It measures the quality of predictions from a classification model by looking athow many predictions are True and how many are False.

Specifically, it computes:

- True positives (TP)

- False positives (FP)

- True negatives (TN)

- False negatives (FN)

Understand the Confusion Matrix

Here, we will try to make sense of the true positive, true negative, false positive and false negative values mean.

True Positive

The model predicted true and it is true.

The model predicted that someone is sick and the person is sick.

True Negative

The model predicted false and it is false.

The model predicted that someone is not sick and the person is not sick.

False Positive

The model predicted True and it is false.

The model predicted that someone is sick and the person is not sick.

False Negative

The model predicted false and it is true.

The model predicted that someone is not sick and the person is sick.

How to Create a Confusion Matrix with Python in Scikit-learn?

In order to get a confusion matrix in scikit-learn:

- Run a classification algorithm

classifier.fit(X_train, y_train)

y_pred = classifier.predict(X_test) - Import metrics from the sklearn module

from sklearn.metrics import confusion_matrix

- Run the confusion matrix function on actual and predicted values

confusion_matrix(y_test, y_pred)

- Plot the confusion matrix

plot_confusion_matrix(classifier, X_test, y_test, cmap=plt.cm.Blues)

plt.show() - Inspect the classification report

print(classification_report(y_test, y_pred))

Run a Classification Algorithm in Python

In a previous article, we classified breast cancers using the k-nearest neighbors algorithm from scikit-learn.

I will not explain this part of the code, but you can look at the detail in the article on the k-nearest neighbors.

import pandas as pd

from sklearn.datasets import load_breast_cancer

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

def to_target(x):

"""Map targets to target names"""

return list(dataset.target_names)[x]

# Load data

dataset = load_breast_cancer()

df = pd.DataFrame(dataset.data,columns=dataset.feature_names)

df['target'] = pd.Series(dataset.target)

df['target_names'] = df['target'].apply(to_target)

# Define predictor and predicted datasets

X = df.drop(['target','target_names'], axis=1).values

y = df['target_names'].values

# split taining and test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42, stratify=y)

# train the model

knn = KNeighborsClassifier(n_neighbors=8)

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

# compute accuracy of the model

knn.score(X_test, y_test)

The result is an accuracy score of the model.

Create a Confusion Matrix in Python

Use the confusion_matrix method from sklearn.metrics to compute the confusion matrix.

from sklearn.metrics import confusion_matrix cm = confusion_matrix(y_test,y_pred) cm

The result is an array in which positions are the same as the quadrant we saw in the past.

array([[ 57, 7],

[ 5, 102]])

cm[0][0]= TPcm[1][1]= TNcm[0][1]= FPcm[1][0]= FN

Plot the Confusion Matrix in Scikit-Learn

You can use the plot_confusion_matrix method to visualize the confusion matrix.

import matplotlib.pyplot as plt

from sklearn.metrics import plot_confusion_matrix

color = 'white'

matrix = plot_confusion_matrix(knn, X_test, y_test, cmap=plt.cm.Blues)

matrix.ax_.set_title('Confusion Matrix', color=color)

plt.xlabel('Predicted Label', color=color)

plt.ylabel('True Label', color=color)

plt.gcf().axes[0].tick_params(colors=color)

plt.gcf().axes[1].tick_params(colors=color)

plt.show()

The result is your confusion matrix plot.

- Top left quadrant = True Positives = Number of benign labelled as benign

- Bottom right quadrant = True Negatives = Number of malignant labelled as malignant

- Top right quadrant = False Positives = Number of benign labelled as malignant

- Bottom left quadrant = False Negatives = Number of malignant labelled as benign

You may run into this error:

ImportError: cannot import name 'plot_confusion_matrix' from 'sklearn.metrics'

Or the following FutureWarning:

FutureWarning: Function plot_confusion_matrix is deprecated; Function `plot_confusion_matrix` is deprecated in 1.0 and will be removed in 1.2. Use one of the class methods: ConfusionMatrixDisplay.from_predictions or ConfusionMatrixDisplay.from_estimator. warnings.warn(msg, category=FutureWarning)

This is because plot_confusion_matrix was deprecated in some release.

The alternative is to use ConfusionMatrixDisplay.

import matplotlib.pyplot as plt from sklearn.metrics import ConfusionMatrixDisplay from sklearn.metrics import confusion_matrix cm = confusion_matrix(y_test, y_pred, labels=knn.classes_) color = 'white' disp = ConfusionMatrixDisplay(confusion_matrix=cm, display_labels=knn.classes_) disp.plot() plt.show()

Run the Classification Report in Python

With data from the confusion matrix, you can interpret the results by looking at the classification report.

from sklearn.metrics import classification_report print(classification_report(y_test, y_pred))

The report returns the metrics relevant to evaluating your classification model:

| Metric | What it is | Sklearn’s Metric Method |

|---|---|---|

| Accuracy | (true positive + true negative) / total predictions | metrics.precision_score(true, pred) |

| Precision | true positive / (true positive + false positive) | metrics.precision_score(true, pred) |

| Recall (sensitivity) | true positive / (true positive + false negative) | metrics.recall_score(true, pred) |

| F1-Score | 2 * (precision * recall) / (precision + recall) | metrics.f1_score(true, pred) |

| Specificity | true negative / (true negative + false positive) | metrics.recall_score(true, pred, pos_label=0) |

If you don’t understand the result above, make sure that you read the article that I wrote on the classification report.

Confusion Matrix for Multi-Class Classification

#Import the necessary libraries

from sklearn.datasets import load_wine

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import confusion_matrix

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.metrics import accuracy_score

# Load the wine dataset

X, y = load_wine(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y,test_size=0.25)

# Train the model

clf = RandomForestClassifier(random_state=23)

clf.fit(X_train, y_train)

# Predict using the test data

y_pred = clf.predict(X_test)

# Compute the confusion matrix

cm = confusion_matrix(y_test,y_pred)

# Plot the confusion matrix.

sns.heatmap(cm,

annot=True)

plt.ylabel('Prediction',fontsize=13)

plt.xlabel('Actual',fontsize=13)

plt.title('Confusion Matrix',fontsize=17)

plt.show()

# Calculate accuracy

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy :", accuracy)

Confusion Matrix Definitions

| Confusion Matrix | Quality measurement of predictions |

| Scikit-learn | Machine learning package in Python |

| True positive | Model correctly predicts the positive class |

| False positive | Model incorrectly predicts the positive class |

| True negative | Model correctly predicts the negative class |

| False negative | Model incorrectly predicts the negative class |

Cheatsheet

| Install sklearn | pip install -U scikit-learn |

| Python library import | from sklearn.metrics import confusion_matrix |

| Plot confusion matrix | sklearn metrics plot_confusion_matrix |

| Classification report | sklearn metrics classification_report |

Confusion Matrix Parameters

Here are the parameters that can be used with the confusion_matrix() function in Scikit-learn.

confusion_matrix(y_true, y_pred, *, labels=None, sample_weight=None, normalize=None)- y_true: Ground truth (correct) target values.

array-like of shape (n_samples,) - y_pred: Estimated targets as returned by a classifier.

array-like of shape (n_samples,) - labels: List of labels to index the matrix. This may be used to reorder or select a subset of labels. If

Noneis given, those that appear at least once iny_trueory_predare used in sorted order.array-like of shape (n_classes), default=None - sample_weight: Sample weights.

array-like of shape (n_samples,), default=None - normalize : Normalizes confusion matrix over the true (rows), predicted (columns) conditions or all the population. If

None, confusion matrix will not be normalized.{'true', 'pred', 'all'}, default=None

Methods that Can Be used with the Confusion Matrix

| all() | a.all(axis=None, out=None, keepdims=False, *, where=True) |

| any() | a.any(axis=None, out=None, keepdims=False, *, where=True) |

| argmax() | a.argmax(axis=None, out=None, *, keepdims=False) |

| argmin() | a.argmin(axis=None, out=None, *, keepdims=False) |

| argpartition() | a.argpartition(kth, axis=-1, kind=’introselect’, order=None) |

| argsort() | a.argsort(axis=-1, kind=None, order=None) |

| astype() | a.astype(dtype, order=’K’, casting=’unsafe’, subok=True, copy=True) |

| byteswap() | a.byteswap(inplace=False) |

| choose() | a.choose(choices, out=None, mode=’raise’) |

| clip() | a.clip(min=None, max=None, out=None, **kwargs) |

| compress() | a.compress(condition, axis=None, out=None) |

| conj() | a.conj() |

| conjugate() | a.conjugate() |

| copy() | a.copy(order=’C’) |

| cumprod() | a.cumprod(axis=None, dtype=None, out=None) |

| cumsum() | a.cumsum(axis=None, dtype=None, out=None) |

| diagonal() | a.diagonal(offset=0, axis1=0, axis2=1) |

| dot() | |

| dump() | a.dump(file) |

| dumps() | a.dumps() |

| fill() | a.fill(value) |

| flatten() | a.flatten(order=’C’) |

| getfield() | a.getfield(dtype, offset=0) |

| item() | a.item(*args) |

| itemset() | a.itemset(*args) |

| max() | a.max(axis=None, out=None, keepdims=False, initial=<no value>, where=True) |

| mean() | a.mean(axis=None, dtype=None, out=None, keepdims=False, *, where=True) |

| min() | a.min(axis=None, out=None, keepdims=False, initial=<no value>, where=True) |

| newbyteorder() | arr.newbyteorder(new_order=’S’, /) |

| nonzero() | a.nonzero() |

| partition() | a.partition(kth, axis=-1, kind=’introselect’, order=None) |

| prod() | a.prod(axis=None, dtype=None, out=None, keepdims=False, initial=1, where=True) |

| ptp() | a.ptp(axis=None, out=None, keepdims=False) |

| put() | a.put(indices, values, mode=’raise’) |

| ravel() | a.ravel([order]) |

| repeat() | a.repeat(repeats, axis=None) |

| reshape() | a.reshape(shape, order=’C’) |

| resize() | a.resize(new_shape, refcheck=True) |

| round() | a.round(decimals=0, out=None) |

| searchsorted() | a.searchsorted(v, side=’left’, sorter=None) |

| setfield() | a.setfield(val, dtype, offset=0) |

| setflags() | a.setflags(write=None, align=None, uic=None) |

| sort() | a.sort(axis=-1, kind=None, order=None) |

| squeeze() | a.squeeze(axis=None) |

| std() | a.std(axis=None, dtype=None, out=None, ddof=0, keepdims=False, *, where=True) |

| sum() | a.sum(axis=None, dtype=None, out=None, keepdims=False, initial=0, where=True) |

| swapaxes() | a.swapaxes(axis1, axis2) |

| take() | a.take(indices, axis=None, out=None, mode=’raise’) |

| tobytes() | a.tobytes(order=’C’) |

| tofile() | a.tofile(fid, sep=””, format=”%s”) |

| tolist() | a.tolist() |

| tostring() | a.tostring(order=’C’) |

| trace() | a.trace(offset=0, axis1=0, axis2=1, dtype=None, out=None) |

| transpose() | a.transpose(*axes) |

| var() | a.var(axis=None, dtype=None, out=None, ddof=0, keepdims=False, *, where=True) |

| view() | a.view([dtype][, type]) |

Confusion Matrix FAQs

How do you get a confusion matrix in scikit-learn?

Run a classification algorithm, import the confusion matrix function from the sklearn.metrics module, run function on test and prediction and plot the matrix.

Why use confusion matrix?

use the confusion matrix to evaluate the performance of a machine learning classification algorithm.

Is confusion matrix better than accuracy?

The confusion matrix provides more insights into a model’s performance than classification accuracy as it shows the number of correctly and incorrectly classified instances.

Can confusion matrix be used on continuous values (e.g. linear regression)?

Confusion matrices shows the accuracy of the prediction of classes. When trying to predict a number output like in the case of the continuous output of a regression model, confusion matrix should not be used.

What is confusion matrix used for?

The confusion matrix is used to evaluate the accuracy of a machine learning model that tries to predict classes (e.g. Classification).

How do you create a confusion matrix in Python?

Use the confusion_matrix function from the sklearn.metrics module.

Is confusion matrix only for binary classification problems?

No. Confusion matrix can be used for binary classification as well as multi-class classification problems.

Conclusion

This article was quite big to grasp.

All I want you to leave with is that it is super important to look at the confusion matrix to help you fine-tune your machine learning models.

This can modify the accuracy score quite heavily in some cases.

Good work on building your first confusion matrix in Scikit-learn.

SEO Strategist at Tripadvisor, ex- Seek (Melbourne, Australia). Specialized in technical SEO. In a quest to programmatic SEO for large organizations through the use of Python, R and machine learning.

Visualizations play an essential role in the exploratory data analysis activity of machine learning.

You can plot confusion matrix using the confusion_matrix() method from sklearn.metrics package.

Why Confusion Matrix?

After creating a machine learning model, accuracy is a metric used to evaluate the machine learning model. On the other hand, you cannot use accuracy in every case as it’ll be misleading. Because the accuracy of 99% may look good as a percentage, but consider a machine learning model used for Fraud Detection or Drug consumption detection.

In such critical scenarios, the 1% percentage failure can create a significant impact.

For example, if a model predicted a fraud transaction of 10000$ as Not Fraud, then it is not a good model and cannot be used in production.

In the drug consumption model, consider if the model predicted that the person had consumed the drug but actually has not. But due to the False prediction of the model, the person may be imprisoned for a crime that is not committed actually.

In such scenarios, you need a better metric than accuracy to validate the machine learning model.

This is where the confusion matrix comes into the picture.

In this tutorial, you’ll learn what a confusion matrix is, how to plot confusion matrix for the binary classification model and the multivariate classification model.

Table of Contents

What is Confusion Matrix?

Confusion matrix is a matrix that allows you to visualize the performance of the classification machine learning models. With this visualization, you can get a better idea of how your machine learning model is performing.

Creating Binary Class Classification Model

In this section, you’ll create a classification model that will predict whether a patient has breast cancer or not, denoted by output classes True or False.

The breast cancer dataset is available in the sklearn dataset library.

It contains a total number of 569 data rows. Each row includes 30 numeric features and one output class. If you want to manipulate or visualize the sklearn dataset, you can convert it into pandas dataframe and play around with the pandas dataframe functionalities.

To create the model, you’ll load the sklearn dataset, split it into train and testing set and fit the train data into the KNeighborsClassifier model.

After creating the model, you can use the test data to predict the values and check how the model is performing.

You can use the actual output classes from your test data and the predicted output returned by the predict() method to plot the confusion matrix and evaluate the model accuracy.

Use the below snippet to create the model.

Snippet

import numpy as np

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier as KNN

breastCancer = load_breast_cancer()

X = breastCancer.data

y = breastCancer.target

# Split the dataset into train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.4, random_state = 42)

knn = KNN(n_neighbors = 3)

# train the model

knn.fit(X_train, y_train)

print('Model is Created')The KNeighborsClassifier model is created for the breast cancer training data.

Output

Model is CreatedTo test the model created, you can use the test data obtained from the train test split and predict the output. Then, you’ll have the predicted values.

Snippet

y_pred = knn.predict(X_test)

y_predOutput

array([0, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 0, 0, 1, 1, 0, 0, 1,

0, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 1,

1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1,

0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 0,

1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 0, 1, 1,

0, 1, 1, 0, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0,

0, 1, 1, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 1,

1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1,

0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1,

0, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1,

0, 1, 0, 0, 1, 1, 0, 1])Now use the predicted classes and the actual output classes from the test data to visualize the confusion matrix.

You’ll learn how to plot the confusion matrix for the binary classification model in the next section.

Plot Confusion Matrix for Binary Classes

You can create the confusion matrix using the confusion_matrix() method from sklearn.metrics package. The confusion_matrix() method will give you an array that depicts the True Positives, False Positives, False Negatives, and True negatives.

** Snippet**

from sklearn.metrics import confusion_matrix

#Generate the confusion matrix

cf_matrix = confusion_matrix(y_test, y_pred)

print(cf_matrix)Output

[[ 73 7]

[ 7 141]]Once you have the confusion matrix created, you can use the heatmap() method available in the seaborn library to plot the confusion matrix.

Seaborn heatmap() method accepts one mandatory parameter and few other optional parameters.

data– A rectangular dataset that can be coerced into a 2d array. Here, you can pass the confusion matrix you already haveannot=True– To write the data value in the cell of the printed matrix. By default, this isFalse.cmap=Blues– This is to denote the matplotlib color map names. Here, we’ve created the plot using the blue color shades.

The heatmap() method returns the matplotlib axes that can be stored in a variable. Here, you’ll store in variable ax. Now, you can set title, x-axis and y-axis labels and tick labels for x-axis and y-axis.

- Title – Used to label the complete image. Use the set_title() method to set the title.

- Axes-labels – Used to name the

xaxis oryaxis. Use the set_xlabel() to set the x-axis label and set_ylabel() to set the y-axis label. - Tick labels – Used to denote the datapoints on the axes. You can pass the tick labels in an array, and it must be in ascending order. Because the confusion matrix contains the values in the ascending order format. Use the xaxis.set_ticklabels() to set the tick labels for x-axis and yaxis.set_ticklabels() to set the tick labels for y-axis.

Finally, use the plot.show() method to plot the confusion matrix.

Use the below snippet to create a confusion matrix, set title and labels for the axis, and set the tick labels, and plot it.

Snippet

import seaborn as sns

ax = sns.heatmap(cf_matrix, annot=True, cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labels\n\n');

ax.set_xlabel('\nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

Alternatively, you can also plot the confusion matrix using the ConfusionMatrixDisplay.from_predictions() method available in the sklearn library itself if you want to avoid using the seaborn.

Next, you’ll learn how to plot a confusion matrix with percentages.

Plot Confusion Matrix for Binary Classes With Percentage

The objective of creating and plotting the confusion matrix is to check the accuracy of the machine learning model. It’ll be good to visualize the accuracy with percentages rather than using just the number. In this section, you’ll learn how to plot a confusion matrix for binary classes with percentages.

To plot the confusion matrix with percentages, first, you need to calculate the percentage of True Positives, False Positives, False Negatives, and True negatives. You can calculate the percentage of these values by dividing the value by the sum of all values.

Using the np.sum() method, you can sum all values in the confusion matrix.

Then pass the percentage of each value as data to the heatmap() method by using the statement cf_matrix/np.sum(cf_matrix).

Use the below snippet to plot the confusion matrix with percentages.

Snippet

ax = sns.heatmap(cf_matrix/np.sum(cf_matrix), annot=True,

fmt='.2%', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labels\n\n');

ax.set_xlabel('\nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

Plot Confusion Matrix for Binary Classes With Labels

In this section, you’ll plot a confusion matrix for Binary classes with labels True Positives, False Positives, False Negatives, and True negatives.

You need to create a list of the labels and convert it into an array using the np.asarray() method with shape 2,2. Then, this array of labels must be passed to the attribute annot. This will plot the confusion matrix with the labels annotation.

Use the below snippet to plot the confusion matrix with labels.

Snippet

labels = ['True Neg','False Pos','False Neg','True Pos']

labels = np.asarray(labels).reshape(2,2)

ax = sns.heatmap(cf_matrix, annot=labels, fmt='', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labels\n\n');

ax.set_xlabel('\nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

Plot Confusion Matrix for Binary Classes With Labels And Percentages

In this section, you’ll learn how to plot a confusion matrix with labels, counts, and percentages.

You can use this to measure the percentage of each label. For example, how much percentage of the predictions are True Positives, False Positives, False Negatives, and True negatives

For this, first, you need to create a list of labels, then count each label in one list and measure the percentage of the labels in another list.

Then you can zip these different lists to create labels. Zipping means concatenating an item from each list and create one list. Then, this list must be converted into an array using the np.asarray() method.

Then pass the final array to annot attribute. This will create a confusion matrix with the label, count, and percentage information for each class.

Use the below snippet to visualize the confusion matrix with all the details.

Snippet

group_names = ['True Neg','False Pos','False Neg','True Pos']

group_counts = ["{0:0.0f}".format(value) for value in

cf_matrix.flatten()]

group_percentages = ["{0:.2%}".format(value) for value in

cf_matrix.flatten()/np.sum(cf_matrix)]

labels = [f"{v1}\n{v2}\n{v3}" for v1, v2, v3 in

zip(group_names,group_counts,group_percentages)]

labels = np.asarray(labels).reshape(2,2)

ax = sns.heatmap(cf_matrix, annot=labels, fmt='', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labels\n\n');

ax.set_xlabel('\nPredicted Values')

ax.set_ylabel('Actual Values ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['False','True'])

ax.yaxis.set_ticklabels(['False','True'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

This is how you can create a confusion matrix for the binary classification machine learning model.

Next, you’ll learn about creating a confusion matrix for a classification model with multiple output classes.

Creating Classification Model For Multiple Classes

In this section, you’ll create a classification model for multiple output classes. In other words, it’s also called multivariate classes.

You’ll be using the iris dataset available in the sklearn dataset library.

It contains a total number of 150 data rows. Each row includes four numeric features and one output class. Output class can be any of one Iris flower type. Namely, Iris Setosa, Iris Versicolour, Iris Virginica.

To create the model, you’ll load the sklearn dataset, split it into train and testing set and fit the train data into the KNeighborsClassifier model.

After creating the model, you can use the test data to predict the values and check how the model is performing.

You can use the actual output classes from your test data and the predicted output returned by the predict() method to plot the confusion matrix and evaluate the model accuracy.

Use the below snippet to create the model.

Snippet

import numpy as np

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier as KNN

iris = load_iris()

X = iris.data

y = iris.target

# Split dataset into train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.4, random_state = 42)

knn = KNN(n_neighbors = 3)

# train th model

knn.fit(X_train, y_train)

print('Model is Created')Output

Model is CreatedNow the model is created.

Use the test data from the train test split and predict the output value using the predict() method as shown below.

Snippet

y_pred = knn.predict(X_test)

y_predYou’ll have the predicted output as an array. The value 0, 1, 2 shows the predicted category of the test data.

Output

array([1, 0, 2, 1, 1, 0, 1, 2, 1, 1, 2, 0, 0, 0, 0, 1, 2, 1, 1, 2, 0, 2,

0, 2, 2, 2, 2, 2, 0, 0, 0, 0, 1, 0, 0, 2, 1, 0, 0, 0, 2, 1, 1, 0,

0, 1, 1, 2, 1, 2, 1, 2, 1, 0, 2, 1, 0, 0, 0, 1])Now, you can use the predicted data available in y_pred to create a confusion matrix for multiple classes.

Plot Confusion matrix for Multiple Classes

In this section, you’ll learn how to plot a confusion matrix for multiple classes.

You can use the confusion_matrix() method available in the sklearn library to create a confusion matrix. It’ll contain three rows and columns representing the actual flower category and the predicted flower category in ascending order.

Snippet

from sklearn.metrics import confusion_matrix

#Get the confusion matrix

cf_matrix = confusion_matrix(y_test, y_pred)

print(cf_matrix)Output

[[23 0 0]

[ 0 19 0]

[ 0 1 17]]The below output shows the confusion matrix for actual and predicted flower category counts.

You can use this matrix to plot the confusion matrix using the seaborn library, as shown below.

Snippet

import seaborn as sns

import matplotlib.pyplot as plt

ax = sns.heatmap(cf_matrix, annot=True, cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labels\n\n');

ax.set_xlabel('\nPredicted Flower Category')

ax.set_ylabel('Actual Flower Category ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

ax.yaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

Plot Confusion Matrix for Multiple Classes With Percentage

In this section, you’ll plot the confusion matrix for multiple classes with the percentage of each output class. You can calculate the percentage by dividing the values in the confusion matrix by the sum of all values.

Use the below snippet to plot the confusion matrix for multiple classes with percentages.

Snippet

ax = sns.heatmap(cf_matrix/np.sum(cf_matrix), annot=True,

fmt='.2%', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labels\n\n');

ax.set_xlabel('\nPredicted Flower Category')

ax.set_ylabel('Actual Flower Category ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

ax.yaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

Plot Confusion Matrix for Multiple Classes With Numbers And Percentages

In this section, you’ll learn how to plot a confusion matrix with labels, counts, and percentages for the multiple classes.

You can use this to measure the percentage of each label. For example, how much percentage of the predictions belong to each category of flowers.

For this, first, you need to create a list of labels, then count each label in one list and measure the percentage of the labels in another list.

Then you can zip these different lists to create concatenated labels. Zipping means concatenating an item from each list and create one list. Then, this list must be converted into an array using the np.asarray() method.

This final array must be passed to annot attribute. This will create a confusion matrix with the label, count, and percentage information for each category of flowers.

Use the below snippet to visualize the confusion matrix with all the details.

Snippet

#group_names = ['True Neg','False Pos','False Neg','True Pos','True Pos','True Pos','True Pos','True Pos','True Pos']

group_counts = ["{0:0.0f}".format(value) for value in

cf_matrix.flatten()]

group_percentages = ["{0:.2%}".format(value) for value in

cf_matrix.flatten()/np.sum(cf_matrix)]

labels = [f"{v1}\n{v2}\n" for v1, v2 in

zip(group_counts,group_percentages)]

labels = np.asarray(labels).reshape(3,3)

ax = sns.heatmap(cf_matrix, annot=labels, fmt='', cmap='Blues')

ax.set_title('Seaborn Confusion Matrix with labels\n\n');

ax.set_xlabel('\nPredicted Flower Category')

ax.set_ylabel('Actual Flower Category ');

## Ticket labels - List must be in alphabetical order

ax.xaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

ax.yaxis.set_ticklabels(['Setosa','Versicolor', 'Virginia'])

## Display the visualization of the Confusion Matrix.

plt.show()Output

This is how you can plot a confusion matrix for multiple classes with percentages and numbers.

Plot Confusion Matrix Without Classifier

To plot the confusion matrix without a classifier model, refer to this StackOverflow answer.

Conclusion

To summarize, you’ve learned how to plot a confusion matrix for the machine learning model with binary output classes and multiple output classes.

You’ve also learned how to annotate the confusion matrix with more details such as labels, count of each label, and percentage of each label for better visualization.

If you’ve any questions, comment below.

You May Also Like

- How to Save and Load Machine Learning Models in python

- How to Plot Correlation Matrix in Python