As others have pointed out, cron will email you the output of any program it runs (if there is any output). So, if you don’t get any output, there are basically three possibilities:

crondcould not even start a shell for running the program or sending emailcrondhad troubles mailing the output, or the mail was lost.- the program did not produce any output (including error messages)

Case 1. is very unlikely, but something should have been written in the cron logs. Cron has an own reserved syslog facility, so you should have a look into /etc/syslog.conf (or the equivalent file in your distro) to see where messages of facility cron are sent. Popular destinations include /var/log/cron, /var/log/messages and /var/log/syslog.

In case 2., you should inspect the mailer daemon logs: messages from the Cron daemon usually appear as from root@yourhost. You can use a MAILTO=... line in the crontab file to have cron send email to a specific address, which should make it easier to grep the mailer daemon logs. For instance:

MAILTO=my.offsite.email@example.org

00 15 * * * echo "Just testing if crond sends email"

In case 3., you can test if the program was actually run by appending another command whose effect you can easily check: for instance,

00 15 * * * /a/command; touch /tmp/a_command_has_run

so you can check if crond has actually run something by looking at

the mtime of /tmp/a_command_has_run.

How to easily log all output from any executable

…what I need right now is to learn how to

- Find log files about cron jobs

- Configure what gets logged

Summary

Put this magic line at the top of any script, including inside your scripts or wrapper scripts which get called as cron jobs, in order to have all stdout and stderr output thereafter get automatically logged!

exec > >(tee -a "$HOME/cronjob_logs/my_log.log") 2>&1

Details

Cron jobs are just scripts that get called at a fixed time or interval by a scheduler. They log only what their executables tell them to log.

But, what you can do is edit the call command as listed in your crontab -e file in such a way that it causes all output to get logged when you call it.

There are a variety of approaches, such as directing it to a file at call time, like this:

# `crontab -e` entry which gets called every day at 2am

0 2 * * * some_cmd > $HOME/cronjob_logs/some_cmd.log 2>&1

But, what if I want it to automatically log when I call it normally to test it, too? I’d like it to log every time I call some_cmd, and I’d still like it to show all output to the screen as well so that when I call the script manually to test it I still see all output! The best way I have come up with is this. It looks really weird, but it’s very powerful! Simply add these lines to the top of any script and all stdout or stderr output thereafter will get automatically logged to the specified file, named after the script you are running itself! So, if your script is called some_cmd, then all output will get logged to $HOME/cronjob_logs/some_cmd.log:

# See my ans: https://stackoverflow.com/a/60157372/4561887

FULL_PATH_TO_SCRIPT="$(realpath "${BASH_SOURCE[-1]}")"

SCRIPT_DIRECTORY="$(dirname "$FULL_PATH_TO_SCRIPT")"

SCRIPT_FILENAME="$(basename "$FULL_PATH_TO_SCRIPT")"

# See:

# 1. https://stackoverflow.com/a/49514467/4561887 and

# 2. https://superuser.com/a/569315/425838

mkdir -p "$HOME/cronjob_logs"

exec > >(tee -a "$HOME/cronjob_logs/${SCRIPT_FILENAME}.log") 2>&1 # <=MAGIC CMD

Here are the relevant references from above to understand it all:

- [my answer] How to obtain the full file path, full directory, and base filename of any script being run OR sourced…

- Explain the bash command

exec > >(tee $LOG_FILE) 2>&1— «process substitution» - How can I view results of my cron jobs? — shows something similar to the «process substitution» above

Let’s see this in full context, with a little beautification and a bunch of explanatory comments, where I am wrapping some_executable (any executable: bash, C, C++, Python, whatever) with a some_executable.sh bash wrapper to enable logging:

some_executable.sh:

# -------------- cron job automatic logger code START --------------

# See my ans: https://stackoverflow.com/a/60157372/4561887

FULL_PATH_TO_SCRIPT="$(realpath "${BASH_SOURCE[-1]}")"

SCRIPT_DIRECTORY="$(dirname "$FULL_PATH_TO_SCRIPT")"

SCRIPT_FILENAME="$(basename "$FULL_PATH_TO_SCRIPT")"

# Automatically log the output of this script to a file!

begin_logging() {

mkdir -p ~/cronjob_logs

# Redirect all future prints in this script from this call-point forward to

# both the screen and a log file!

#

# This is about as magic as it gets! This line uses `exec` + bash "process

# substitution" to redirect all future print statements in this script

# after this line from `stdout` to the `tee` command used below, instead.

# This way, they get printed to the screen *and* to the specified log file

# here! The `2>&1` part redirects `stderr` to `stdout` as well, so that

# `stderr` output gets logged into the file too.

# See:

# 1. *****+++ https://stackoverflow.com/a/49514467/4561887 -

# shows `exec > >(tee $LOG_FILE) 2>&1`

# 1. https://superuser.com/a/569315/425838 - shows `exec &>>` (similar)

exec > >(tee -a "$HOME/cronjob_logs/${SCRIPT_FILENAME}.log") 2>&1

echo ""

echo "====================================================================="

echo "Running cronjob \"$FULL_PATH_TO_SCRIPT\""

echo "on $(date)."

echo "Cmd: $0 $@"

echo "====================================================================="

}

# --------------- cron job automatic logger code END ---------------

# THE REST OF THE SCRIPT GOES BELOW THIS POINT.

# ------------------------------------------------------------------

main() {

some_executable

echo "= DONE."

echo ""

}

# ------------------------------------------------------------------------------

# main program entry point

# ------------------------------------------------------------------------------

begin_logging "$@"

time main "$@"

There you have it! Now isn’t that pretty and nice!? Just put whatever you want in the main() function, and all of the output there gets automatically logged into $HOME/cronjob_logs/some_executable.sh.log, whether you call this wrapper manually or whether it is called by a cron job! And, since the tee command is used, all output goes to the terminal too when you run it manually so you can see it live as well.

Each log entry gets prefixed with a nice header too.

Here is an example log entry:

=========================================================================================

Running cronjob "/home/gabriel/GS/dev/eRCaGuy_dotfiles/useful_scripts/cronjobs/repo_git_pull_latest.sh"

on Wed Aug 10 02:00:01 MST 2022.

Cmd: REMOTE_NAME="origin" MAIN_BRANCH_NAME="main" PATH_TO_REPO="/home/gabriel/GS/dev/random_repo" "/home/gabriel/GS/dev/eRCaGuy_dotfiles/useful_scripts/cronjobs/repo_git_pull_latest.sh"

=========================================================================================

= Checking to see if the git server is up and running (will time out after 40s)...

= git server is NOT available; exiting now

real 0m40.020s

user 0m0.012s

sys 0m0.003s

You can find and borrow this whole script from my eRCaGuy_dotfiles repo here: repo_git_pull_latest.sh.

See also my cronjob readme and notes here: https://github.com/ElectricRCAircraftGuy/eRCaGuy_dotfiles/tree/master/useful_scripts/cronjobs

cron already sends the standard output and standard error of every job it runs by mail to the owner of the cron job.

You can use MAILTO=recipient in the crontab file to have the emails sent to a different account.

For this to work, you need to have mail working properly. Delivering to a local mailbox is usually not a problem (in fact, chances are ls -l "$MAIL" will reveal that you have already been receiving some) but getting it off the box and out onto the internet requires the MTA (Postfix, Sendmail, what have you) to be properly configured to connect to the world.

If there is no output, no email will be generated.

A common arrangement is to redirect output to a file, in which case of course the cron daemon won’t see the job return any output. A variant is to redirect standard output to a file (or write the script so it never prints anything — perhaps it stores results in a database instead, or performs maintenance tasks which simply don’t output anything?) and only receive an email if there is an error message.

To redirect both output streams, the syntax is

42 17 * * * script >>stdout.log 2>>stderr.log

Notice how we append (double >>) instead of overwrite, so that any previous job’s output is not replaced by the next one’s.

As suggested in many answers here, you can have both output streams be sent to a single file; replace the second redirection with 2>&1 to say «standard error should go wherever standard output is going». (But I don’t particularly endorse this practice. It mainly makes sense if you don’t really expect anything on standard output, but may have overlooked something, perhaps coming from an external tool which is called from your script.)

cron jobs run in your home directory, so any relative file names should be relative to that. If you want to write outside of your home directory, you obviously need to separately make sure you have write access to that destination file.

A common antipattern is to redirect everything to /dev/null (and then ask Stack Overflow to help you figure out what went wrong when something is not working; but we can’t see the lost output, either!)

From within your script, make sure to keep regular output (actual results, ideally in machine-readable form) and diagnostics (usually formatted for a human reader) separate. In a shell script,

echo "$results" # regular results go to stdout

echo "$0: something went wrong" >&2

Some platforms (and e.g. GNU Awk) allow you to use the file name /dev/stderr for error messages, but this is not properly portable; in Perl, warn and die print to standard error; in Python, write to sys.stderr, or use logging; in Ruby, try $stderr.puts. Notice also how error messages should include the name of the script which produced the diagnostic message.

Asked

Viewed

6k times

I have 2 cron jobs that were running fine but now stopped running. I looked through my crontab and everything looks OK. How can I tell if these jobs are running? is there a place where cron stores errors? Where do I look for cron specific errors, or even a record of the cron jobs that ran prior.

asked Apr 1, 2015 at 15:01

2

Try directing the output to a log file. Just make sure that it exists and is writable by whatever user the cronjob is running as. (In my example, this would be cyphertite)

I also recommend running date somewhere at the beginning of your script so that you get the date in the log file.

0 15 * * * /home/andrew/daily-backup.sh >> /var/log/cyphertite.log

Additionally, cron logs are directed to /var/log/syslog if that helps

answered Apr 1, 2015 at 15:23

Andrew MeyerAndrew Meyer

1,43710 silver badges7 bronze badges

3

Not the answer you’re looking for? Browse other questions tagged

.

Not the answer you’re looking for? Browse other questions tagged

.

As a Linux user, you are probably already familiar with crontab. You can automate tasks by running commands and scripts at a predefined schedule. Want to automatically take backups? Crontab is your friend.

I am not going into the usage of crontab here. My focus is on showing you the different ways to check crontab logs.

It helps investigate whether your cronjobs ran as scheduled or not.

Method 1: Check the syslog for crontab logs

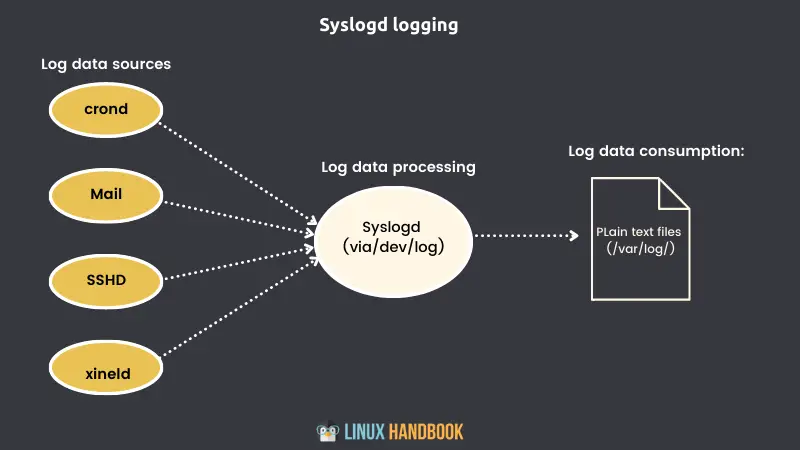

As per the Linux directory hierarchy, the /var/log directory in Linux stores logs from the system, services, and running applications.

While the cron logs are also in this directory, there is no standard file for these logs. Different distributions keep them in different files.

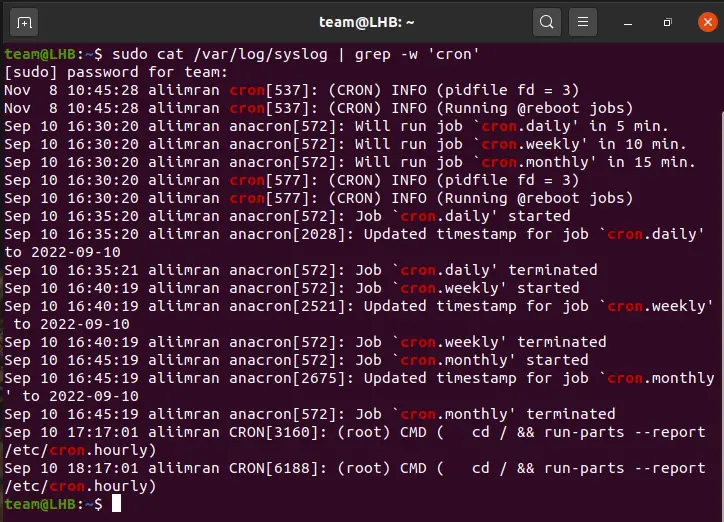

For Debian-based distributions, the file /var/log/syslog contains the logs from the cron job execution and should be consulted in case your cron jobs are not working:

cat /var/log/syslog | grep -w 'cron’You will see all cron jobs listed on your terminal when the above command is run. The grep command will filter the cron related messages apart from the rest.

For RedHat-based distros, the cron logs have dedicated file /var/log/cron.

In both cases, you will probably need to specify the sudo keyword or use the root account to access the logs.

Beginner’s Guide to Syslogs in Linux [Real World Examples]

The good old syslogs are still relevant in the systemd age of journal logs. Learn the basics of logging with syslogd in this guide.

Method 2: Use a custom log file (recommended)

Using a separate custom file for logging cron jobs is a recommended practice.

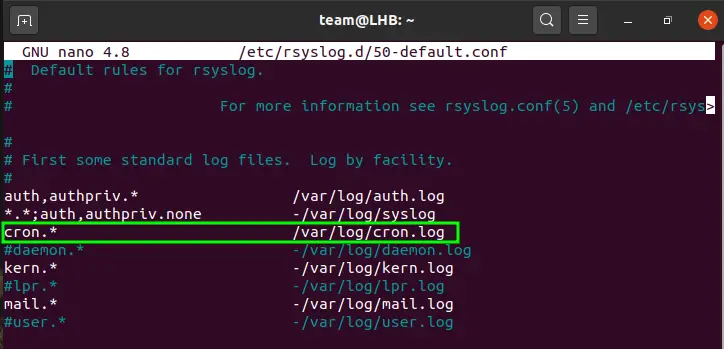

For this, you can configure ‘rsyslog’ to forward cron logs. Rsyslog is a Linux service that has features similar to Syslog logging.

Simply create a file cron.log under the directory /etc/rsyslog.d:

touch /var/log/cron.logNow open the file /etc/rsyslog.d/50-default.conf for editing:

nano /etc/rsyslog.d/50-default.conf and locate the line starting with #cron.* and remove the # at the start of the line.

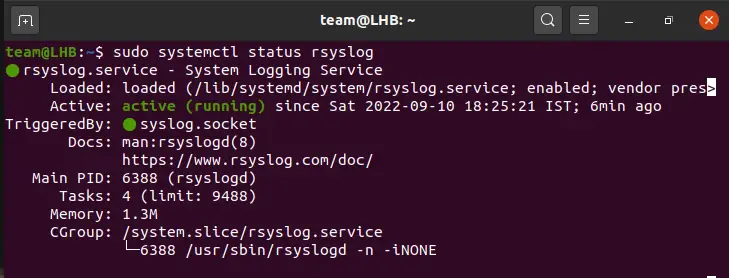

To make the changes work, save and close this file and finally restart the rsyslog service and check its status:

sudo systemctl restart rsyslogsudo systemctl status rsyslogThe status of the service should be highlighted as active (running).

Now, whenever you need to access the crontab logs, just read the content of this log file:

less /var/log/cron.logMethod 3: Use dedicated services like Cronitor monitor cron jobs

Cronitor is a service that can be deployed to monitor any type of cron job.

Many of the cron versions will start logging when the scheduled job executes or if there are any problems with the crontab. However, the output from the cron job or its exit status is not logged.

Here Cronitor comes in handy and works perfectly. It is a complete solution for all your crontab needs. It captures logs, metrics, and status from all the jobs and creates instant alerts for crashed or failed to start cron jobs.

You can see all this through a web-based interface.

For Cronitor or CronitorCLI installed on Kubernetes, logs can be captured for as high as 100MB for a single execution. Here, you can find the detailed installation steps on Linux for CronitorCLI.

Other monitoring tools and services like Better Uptime also provide the feature to monitor the cron jobs automatically.

Better Uptime

Radically better uptime monitoring platform with phone call alerts, status pages, and incident management built-in. Free plan included!

Free Web Monitoring & Status Page

Conclusion

System log files are very crucial for troubleshooting and diagnosing system-related issues, cron logs are thus no exception for this.

The Syslog keeps the logs related to crontab however, having a dedicated log file for cron is recommended. A web-based service like Cronitor, Better Uptime, and Uptime Robot also helps.