Introduction

If you are experiencing an issue with your Vault and look in your Operational logs, you may see errors that have `context deadline exceeded` in them. This article aims to explain this error a bit more and what the possible causes are.

What does `context deadline exceeded` mean?

Most HashiCorp software, including Vault, is built using Go. We use contexts for connections to provide a timeout or deadline mechanism. The error ‘context deadline exceeded’ means that we ran into a situation where a given action was not completed in an expected timeframe. For Vault this is typically going to be related to a network connection made to an external system such as a database or even a storage backend such as Consul.

Example Error:

2020-05-09T02:19:38.136-0500 [ERROR] expiration: failed to revoke lease: lease_id=auth/approle/login/<hashed_value> error="failed to revoke token: failed to revoke entry: failed to delete entry: context deadline exceeded"

Looking at the error above we can see there was a problem revoking a lease. In this particular case Vault was leveraging Consul as its backend, and was having an issue.

Possible Causes:

-

Network Latency

- Firewall Rules / Cloud Security Rules

- Resource Contention

-

Slow I/O

There are multiple issues that can lead to this error message. Most often it is a network related connection to an external database connection or Vault backend. This can be increased latency between Vault and the external system, or a misconfiguration of Firewall Rules or Cloud Security Rules/Groups. You can also see this error if there is slow I/O on the external system. During periods of high Vault usage (Token generation, Lease Expiration, etc.) I/O load on the underlying storage system will grow. Depending on the underlying infrastructure, this can be a limiting factor on how long a response can come back. Using Consul as an example, if Consul is experiencing high I/O load, then it may be slower to respond to a given request originating from Vault. This period of time can be longer than what Vault is expecting and you can see context deadline exceeded errors.

Solutions:

Before being able to solve the problem, you need to determine what is actually failing. For example if we look at the above error, we can make a determination that we aren’t relying on a network connection to an external database such as MySQL or Oracle as the lease was generated from an Approle Auth method. That means that we are looking at something internal to Vault itself. In this case since we know Vault is using Consul as its storage backend, we can begin investigating any potential communication issues between Vault and Consul.

- Investigate Network connectivity: Can Vault and the required systems communicate in general? Were there any changes made recently that could have impacted this communication? Are there any firewalls in place that can be preventing communication? If in the cloud, are the instances in the same VPC/Network? Do the instances belong to the correct/expected Security Groups?

- Resource Contention: Are we asking too much of the underlying provisioned infrastructure? What is our CPU/Memory usage?

- Slow I/O: Are we using an external storage backend? Are we seeing I/O wait? Are we in the cloud? Do we have enough IOPS provisioned for our storage?

Context Deadline Exceeded is an error occurring in Go when a context of an HTTP request has a deadline or a timeout set, i.e., the time after which the request should abort. This error is returned if the time of a server response is greater than the set timeout. Setting timeouts on requests is a good practice in a production environment to ensure that you always get a response (or error) in a finite time.

Context deadline exceeded example

package main

import (

"context"

"errors"

"log"

"net/http"

"os"

"time"

)

func slowServer(w http.ResponseWriter, r *http.Request) {

time.Sleep(10 * time.Second)

w.Write([]byte("Hello world!"))

}

func call() error {

client := &http.Client{}

req, err := http.NewRequest(http.MethodGet, "http://localhost:8080", nil)

if err != nil {

return err

}

ctx, cancel := context.WithTimeout(req.Context(), 1*time.Second)

defer cancel()

req = req.WithContext(ctx)

_, err = client.Do(req)

return err

}

func main() {

// run slow server

go func() {

http.HandleFunc("/", slowServer)

if err := http.ListenAndServe(":8080", nil); err != nil {

log.Fatal(err)

}

}()

time.Sleep(1 * time.Second) // wait for server to run

// call server

err := call()

if errors.Is(err, context.DeadlineExceeded) {

log.Println("ContextDeadlineExceeded: true")

}

if os.IsTimeout(err) {

log.Println("IsTimeoutError: true")

}

if err != nil {

log.Fatal(err)

}

}

Output:

2021/08/19 06:39:09 ContextDeadlineExceeded: true

2021/08/19 06:39:09 IsTimeoutError: true

2021/08/19 06:39:09 Get "http://localhost:8080": context deadline exceeded

exit status 1In the example above, we:

- run our slow server, whose task is to return a response after 10 seconds

- create a new request in the

call()function, which will be sent to the server - set a timeout of 1 second on this request, i.e., the value of time after the waiting for the server response is interrupted

- send the request to the server

Since a timeout for this request is set to 1 second and the server responds after 10 seconds, the HTTP client returns an error.

Handle Context deadline exceeded error

An HTTP client returns the context.DeadlineExceeded error when the set timeout is exceeded. This error can also be handled with the more general os.IsTimeout() function that checks if the error is known to report that a timeout occurred.

The timeout can be set not only at the level of a single HTTP request but also at the level of the entire HTTP client. In this case, each request made by such a client has the same timeout value. See the example of a call() function using the client timeout option:

func call() error {

client := &http.Client{Timeout: 1 * time.Second}

_, err := client.Get("http://localhost:8080")

return err

}

Output:

2021/08/19 07:35:14 IsTimeoutError: true

2021/08/19 07:35:14 Get "http://localhost:8080": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

exit status 1In this case, we get the context deadline exceeded (Client.Timeout exceeded while awaiting headers) error. Note that this is not an instance of context.DeadlineExceeded error.

Wondering how to resolve Kubernetes error “context deadline exceeded”? We can help you.

As part of our Server Management Services, we assist our customers with several Kubernetes queries.

Today, let us see how our Support techs proceed to resolve it.

How to resolve Kubernetes error “context deadline exceeded”?

The error ‘context deadline exceeded’ means that we ran into a situation where a given action was not completed in an expected timeframe.

Usually, issue occurs if Pods become stuck in Init status.

Typical error will look as shown below:

Warning FailedCreatePodSandBox 93s (x8 over 29m) kubelet, 97011e0a-f47c-4673-ace7-d6f74cde9934 Failed to create pod sandbox: rpc error: code = DeadlineExceeded desc = context deadline exceeded Normal SandboxChanged 92s (x8 over 29m) kubelet, 97011e0a-f47c-4673-ace7-d6f74cde9934 Pod sandbox changed, it will be killed and re-created.You would see errors for containers from kubelet.stderr.log.

Example:

E0114 14:57:13.656196 9838 remote_runtime.go:128] StopPodSandbox "ca05be4d6453ae91f63fd3f240cbdf8b34377b3643883075a6f5e05001d3646b" from runtime service failed: rpc error: code = DeadlineExceeded desc = context deadline exceeded

...

E0114 14:57:13.656256 9838 kuberuntime_manager.go:901] Failed to stop sandbox {"docker" "ca05be4d6453ae91f63fd3f240cbdf8b34377b3643883075a6f5e05001d3646b"}

...

W0114 14:57:30.151650 9838 cni.go:331] CNI failed to retrieve network namespace path: cannot find network namespace for the terminated container "ca05be4d6453ae91f63fd3f240cbdf8b34377b3643883075a6f5e05001d3646b"You can also validate the status of the node-agent-hyperbus by running the following nsxcli command from the node (as root):

sudo -i/var/vcap/jobs/nsx-node-agent/bin/nsxcli“at the nsx-cli prompt, enter”: get node-agent-hyperbus status

Expected output:

HyperBus status: HealthyIn this scenario you would see the following error instead:

% An internal error occurred

This causes a loop of DEL (delete) requests to the nsx-node-agent process

Today, let us see the simple steps followed by our Support techs to resolve it.

Restarting the nsx-node-agent process will workaround this issue:

— Use “bosh ssh” command to access the worker node

Then, run the below commands.

sudo -imonit restart nsx-node-agent— Wait for nsx-node-agent to restart: watch monit summary

This must resolve the issue.

[Stuck in between? We’d be glad to assist you]

Conclusion

In short, today we saw steps followed by our Support Techs resolve Kubernetes failed to start in docker desktop error.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

GET STARTED

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and

privacy statement. We’ll occasionally send you account related emails.

Already on GitHub?

Sign in

to your account

Closed

dictcp opened this issue

May 6, 2017

· 51 comments

· Fixed by #3715

Closed

Error: context deadline exceeded

#2409

dictcp opened this issue

May 6, 2017

· 51 comments

· Fixed by #3715

Comments

I tried to install latest Helm (v2.4.1) on my fresh created GKE cluster (with k8s 1.5.7). and it give out error whenever I install any chart. Attached are the logging of my previous command execution.

~ gcloud container clusters get-credentials minidock --zone xxxxxx --project xxxxxx

Fetching cluster endpoint and auth data.

kubeconfig entry generated for minidock.

~ helm init

$HELM_HOME has been configured at /home/dick/.helm.

Tiller (the helm server side component) has been installed into your Kubernetes Cluster.

Happy Helming!

~ kubectl get po --namespace kube-system

NAME READY STATUS RESTARTS AGE

fluentd-cloud-logging-gke-minidock-default-pool-9b0813ac-3zdz 1/1 Running 0 21m

heapster-v1.2.0.1-1244163626-jmtpv 2/2 Running 0 21m

kube-dns-3475745875-ngw5q 4/4 Running 0 22m

kube-dns-autoscaler-2835134359-1z3hg 1/1 Running 0 22m

kube-proxy-gke-minidock-default-pool-9b0813ac-3zdz 1/1 Running 0 22m

kubernetes-dashboard-3968242289-t3gpk 1/1 Running 0 22m

l7-default-backend-2001230086-vt3n0 1/1 Running 0 22m

tiller-deploy-3210876050-4p7sx 1/1 Running 0 20m

~ kubectl --namespace kube-system logs tiller-deploy-3210876050-4p7sx

Starting Tiller v2.4.1 (tls=false)

GRPC listening on :44134

Probes listening on :44135

Storage driver is ConfigMap

~ helm install --debug stable/wordpress

[debug] Created tunnel using local port: '34772'

[debug] SERVER: "localhost:34772"

[debug] Fetched stable/wordpress to wordpress-0.6.0.tgz

[debug] CHART PATH: /home/dick/wordpress-0.6.0.tgz

Error: context deadline exceeded

Could you get us the tiller logs for when this happened?

I’m also seeing exactly the same issue —

no logs at all from tiller, except the standard start up logs:

Starting Tiller v2.4.2 (tls=false)

GRPC listening on :44134

Probes listening on :44135

Storage driver is ConfigMap

I’m also seeing this with Tiller v2.4.2 with Helm v2.4.2. Let me know if there’s any other info you need…

Full logs attached — note: I’m running 1.6.2 not 1.5.x here and getting the same issue. Issue also occurs with Tiller 2.4.2 with Helm 2.3.1.

bash-4.3# kubectl get no NAME STATUS AGE VERSION gke-gs-staging-gke-default-pool-8a9f998e-ghwc Ready 2d v1.6.2 bash-4.3# kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE fluentd-gcp-v2.0-j2xff 1/1 Running 0 2d heapster-v1.3.0-3440173064-bz5jr 2/2 Running 0 2d kube-dns-3263495268-0hn0m 3/3 Running 0 2d kube-dns-autoscaler-2362253537-dv7d7 1/1 Running 0 2d kube-proxy-gke-gs-staging-gke-default-pool-8a9f998e-ghwc 1/1 Running 0 2d kubernetes-dashboard-490794276-9ddww 1/1 Running 0 2d l7-default-backend-3574702981-dhj0x 1/1 Running 0 2d tiller-deploy-1651596238-v57zs 1/1 Running 0 9m bash-4.3# kubectl logs -n kube-system tiller-deploy-1651596238-v57zs Starting Tiller v2.4.2 (tls=false) GRPC listening on :44134 Probes listening on :44135 Storage driver is ConfigMap bash-4.3# helm version --debug [debug] Created tunnel using local port: '36495' [debug] SERVER: "localhost:36495" Client: &version.Version{SemVer:"v2.4.2", GitCommit:"82d8e9498d96535cc6787a6a9194a76161d29b4c", GitTreeState:"clean"} [debug] context deadline exceeded Error: cannot connect to Tiller bash-4.3# kubectl logs -n kube-system tiller-deploy-1651596238-v57zs Starting Tiller v2.4.2 (tls=false) GRPC listening on :44134 Probes listening on :44135 Storage driver is ConfigMap

I’m actually seeing this with all clusters, not just GKE.

I’ve also noticed Helm works fine from my OS X box to the same cluster. It seems to not work when in an alpine docker container. If I try the same process with a Debian container, it seems to work fine. The dockerfile in question that produces a Helm that doesn’t work:

FROM alpine:3.5

RUN apk add --no-cache \

openssl \

curl \

bash \

python2 \

make \

graphviz \

jq \

ttf-ubuntu-font-family

# Install gcloud SDK

ENV GCLOUD_VERSION 153.0.0

ENV GCLOUD_HASH ade29e765f7847bf6081affb6eada69b45138d4abb443b1484e891312990e958

RUN cd /tmp && \

curl -L https://dl.google.com/dl/cloudsdk/channels/rapid/downloads/google-cloud-sdk-${GCLOUD_VERSION}-linux-x86_64.tar.gz > /tmp/gcloud.tar.gz && \

echo "${GCLOUD_HASH} /tmp/gcloud.tar.gz" | sha256sum -c && \

tar xvf /tmp/gcloud.tar.gz && \

/tmp/google-cloud-sdk/install.sh && \

rm -v /tmp/gcloud*

# Add gcloud binaries to PATH

ENV PATH /tmp/google-cloud-sdk/bin:$PATH

RUN gcloud components install -q kubectl

# Install terraform

ENV TERRAFORM_VERSION 0.9.4

ENV TERRAFORM_HASH cc1cffee3b82820b7f049bb290b841762ee920aef3cf4d95382cc7ea01135707

RUN cd /tmp && \

curl -L https://releases.hashicorp.com/terraform/${TERRAFORM_VERSION}/terraform_${TERRAFORM_VERSION}_linux_amd64.zip > /tmp/terraform.zip && \

echo "${TERRAFORM_HASH} /tmp/terraform.zip" | sha256sum -c && \

unzip /tmp/terraform.zip && \

mv terraform /usr/local/bin && \

rm -v /tmp/terraform*

# Install helm

ENV HELM_VERSION v2.4.1

RUN cd /tmp && \

curl -L https://storage.googleapis.com/kubernetes-helm/helm-${HELM_VERSION}-linux-amd64.tar.gz > /tmp/helm.tar.gz && \

tar xvf /tmp/helm.tar.gz && \

mv linux-amd64/helm /usr/local/bin && \

rm -rf /tmp/linux-amd64*

ENV HOME /root

# copy contents of the git

WORKDIR /terraform

ADD . /terraform

Can anyone else replicate this using the above image? The tiller server itself is not receiving any requests at all, however the local port does seem to be forwarded fine as I’m able to make a request using curl (to be presented with unreadable characters, presumably because curl isn’t speaking gRPC).

@munnerz I am seeing a few related issues in Kubernetes that appears to be related to Docker, possibly due to the number of pods running.

Ok, I’ll dig in to this bug next after I finish the one I am working on

@munnerz I tried this with a non-GKE k8s and didn’t have a problem. I don’t have a GKE cluster to test against right now though

Same error here on GKE:

[0] ~ >> helm version

Client: &version.Version{SemVer:"v2.5.0", GitCommit:"012cb0ac1a1b2f888144ef5a67b8dab6c2d45be6", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.5.0", GitCommit:"012cb0ac1a1b2f888144ef5a67b8dab6c2d45be6", GitTreeState:"clean"}

[0] ~ >> helm list thevotingapp

NAME REVISION UPDATED STATUS CHART NAMESPACE

thevotingapp 6 Wed Jun 28 12:38:44 2017 DEPLOYED thevotingapp-0.1.0 thevotingapp

[0] ~ >> helm get values thevotingapp

Error: context deadline exceeded

I’m seeing the same issue on Azure. helm list and helm get work just fine, but I hit the context deadline as soon as I try helm get manifest/values.

output:

><> helm list

NAME REVISION UPDATED STATUS CHART NAMESPACE

draft 2 Fri Jul 7 09:27:58 2017 FAILED draftd-canary kube-system

ingressctl 1 Thu Jul 6 15:16:48 2017 DEPLOYED nginx-ingress-0.6.0 kube-system

><> helm get draft

REVISION: 2

RELEASED: Fri Jul 7 09:27:58 2017

CHART: draftd-canary

[...]

><> helm get manifest draft

Error: context deadline exceeded

No tiller logs were available after the call to helm get manifest, but helm list and helm get shows regular logs:

[storage] 2017/07/07 18:46:54 listing all releases with filter

[storage] 2017/07/07 18:47:07 getting last revision of "draft"

[storage] 2017/07/07 18:47:07 getting release history for "draft"

This is just on a 2-node cluster (one master, one node). I’ll see if restarting the docker daemon on the node or just nuking the node from orbit will fix this issue.

I met this error in my cluster too, non-GKE.

This is my Environment:

Host:

Linux node01 4.4.0-81-generic #104-Ubuntu SMP Wed Jun 14 08:17:06 UTC 2017 x86_64 x86_64

x86_64 GNU/Linux

docker Version:

Client:

Version: 1.13.1

API version: 1.26

Go version: go1.7.5

Git commit: 092cba3

Built: Wed Feb 8 06:50:14 2017

OS/Arch: linux/amd64

Server:

Version: 1.13.1

API version: 1.26 (minimum version 1.12)

Go version: go1.7.5

Git commit: 092cba3

Built: Wed Feb 8 06:50:14 2017

OS/Arch: linux/amd64

Experimental: false

Error:

root@node01:~# helm version --debug

[debug] SERVER: "localhost:44134"

Client: &version.Version{SemVer:"v2.5.0", GitCommit:"012cb0ac1a1b2f888144ef5a67b8dab6c2d45be6", GitTreeState:"clean"}

[debug] context deadline exceeded

Error: cannot connect to Tiller

K8s Env:

Client Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.0", GitCommit:"58b7c16a52c03e4a849874602be42ee71afdcab1", GitTreeState:"clean", BuildDate:"2016-12-12T23:35:54Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.1", GitCommit:"82450d03cb057bab0950214ef122b67c83fb11df", GitTreeState:"clean", BuildDate:"2016-12-14T00:52:01Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}

Others:

When I request tillerPodIp:44134, tiller container print message like these:

root@node01:~# curl 10.244.0.5:44134

curl: (56) Recv failure: Connection reset by peer

���root@node01:docker logs 4d1cd134

[main] 2017/07/10 06:20:45 Starting Tiller v2.5.0 (tls=false)

[main] 2017/07/10 06:20:45 GRPC listening on :44134

[main] 2017/07/10 06:20:45 Probes listening on :44135

[main] 2017/07/10 06:20:45 Storage driver is ConfigMap

2017/07/10 06:42:31 transport: http2Server.HandleStreams received bogus greeting from client: "GET / HTTP/1.1\r\nHost: 10"

2017/07/10 06:47:51 transport: http2Server.HandleStreams received bogus greeting from client: "GET / HTTP/1.1\r\nHost: 10"

Same!

-> % helm get values excited-bison -a

Error: context deadline exceeded

-> % helm version

Client: &version.Version{SemVer:"v2.5.0", GitCommit:"012cb0ac1a1b2f888144ef5a67b8dab6c2d45be6", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.5.0", GitCommit:"012cb0ac1a1b2f888144ef5a67b8dab6c2d45be6", GitTreeState:"clean"}

Encountering the same problems on AWS with a kops-1.6.2 install using RBACs

helm get works, can install charts, but get manifest doesn’t work

$ helm version --debug

[debug] Created tunnel using local port: '1474'

[debug] SERVER: "localhost:1474"

Client: &version.Version{SemVer:"v2.5.0", GitCommit:"012cb0ac1a1b2f888144ef5a67b8dab6c2d45be6", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.5.0", GitCommit:"012cb0ac1a1b2f888144ef5a67b8dab6c2d45be6", GitTreeState:"clean"}

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"7", GitVersion:"v1.7.0", GitCommit:"d3ada0119e776222f11ec7945e6d860061339aad", GitTreeState:"clean", BuildDate:"2017-06-29T23:15:59Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"6", GitVersion:"v1.6.7", GitCommit:"095136c3078ccf887b9034b7ce598a0a1faff769", GitTreeState:"clean", BuildDate:"2017-07-05T16:40:42Z", GoVersion:"go1.7.6", Compiler:"gc", Platform:"linux/amd64"}

$ helm get manifest dunking-echidna

Error: context deadline exceeded

This issue was fixed in #2664 and is being shipped in v2.5.1.

I’m still having this issue even when using the latest canary release, which also seems to have some argument parsing errors.

Here’s my kubectl output

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"6", GitVersion:"v1.6.1", GitCommit:"b0b7a323cc5a4a2019b2e9520c21c7830b7f708e", GitTreeState:"clean", BuildDate:"2017-04-03T20:44:38Z", GoVersion:"go1.7.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"6", GitVersion:"v1.6.7+coreos.0", GitCommit:"c8c505ee26ac3ab4d1dff506c46bc5538bc66733", GitTreeState:"clean", BuildDate:"2017-07-06T17:38:33Z", GoVersion:"go1.7.6", Compiler:"gc", Platform:"linux/amd64"}

Helm output from latest canary release:

$ helm version --debug

unknown flag: --debug

Usage of homer:

--home string location of your Helm config. Overrides $HELM_HOME (default "$HOME/.helm")

Client: &version.Version{SemVer:"v2.5+unreleased", GitCommit:"1261f71faf1aa8b9a127a6e1759fd799a51d9528", GitTreeState:"clean"}

[debug] context deadline exceeded

Error: cannot connect to Tiller

Can you open a new issue for that, please?

You might also want to test #2682. It sounds like a fix may or may not be underway for v2.5.1.

this issue was fixed in v2.5.1. Closing!

I can confirm that this is an issue in helm 2.5.0

$ helm list --kube-context xyz.k8s.domain.net --host domain-helm-charts.s3-website-eu-west-1.amazonaws.com --all

Error: context deadline exceeded

Looks like we need to upgrade

I can reproduce this with 2.5.1 too

The issues I had above were caused by not having registered the node names in local DNS. The fix was to add --kubelet-preferred-address-types=InternalIP to the apiserver manifest.

I can confirm that this is a issue in helm 2.6.0

[root@k8s-master ~]# helm install -f config.yaml stable/redis —tiller-namespace default —host 192.168.8.33:44134 —debug

[debug] SERVER: «192.168.8.33:44134»

[debug] Original chart version: «»

[debug] Fetched stable/redis to /root/.helm/cache/archive/redis-0.9.0.tgz

[debug] CHART PATH: /root/.helm/cache/archive/redis-0.9.0.tgz

Error: context deadline exceeded

k8s version is :

Client Version: version.Info{Major:»1″, Minor:»7″, GitVersion:»v1.7.4″, GitCommit:»793658f2d7ca7f064d2bdf606519f9fe1229c381″, GitTreeState:»clean», BuildDate:»2017-08-17T08:48:23Z», GoVersion:»go1.8.3″, Compiler:»gc», Platform:»linux/amd64″}

Server Version: version.Info{Major:»1″, Minor:»7″, GitVersion:»v1.7.4″, GitCommit:»793658f2d7ca7f064d2bdf606519f9fe1229c381″, GitTreeState:»clean», BuildDate:»2017-08-17T08:30:51Z», GoVersion:»go1.8.3″, Compiler:»gc», Platform:»linux/amd64″}

Still an issue for me in 2.6.1 as well.

Is anyone experiencing this error after installing Helm with HomeBrew? I’m seeing success when installing directly with the «From Script» method in the wiki.

FYI @onlydole I made a comment above that might help give you more insight into the error.

I don’t think the difference between the CLI installed with homebrew and with the install script method will matter too much but it’s definitely an interesting data point since homebrew builds from source whereas the install script uses the pre-built binary CircleCI releases every tag.

If anyone is able to check and verify if homebrew vs. install script makes any difference, that would be helpful to know!

I get this error too. My configuration is below

K8s version: 1.7.7

helm version —host 10.245.112.10 —debug

[debug] SERVER: «10.245.112.10»

Client: &version.Version{SemVer:»v2.7.0″, GitCommit:»08c1144f5eb3e3b636d9775617287cc26e53dba4″, GitTreeState:»clean»}

[debug] context deadline exceeded

Error: cannot connect to Tiller

@veeresh1982 can you check the tiller pod’s status using kubectl?

Ok I did below to resolve : I am going to try @philchristensen solution too and update.

PREP on all master nodes:

On all master nodes following link: #1455, updated /etc/hosts

10.245.112.12 cbu-dev-k8s-node0

10.245.112.11 cbu-dev-k8s-node1

10.245.112.13 cbu-dev-k8s-node2

B)From my another remote ubuntu machine:10.67.141.140

Logged in as root:

export KUBECONFIG=/root/.kube/config

rm -rf /root/.helm

./helm init -c —debug

root@veeresh-spinnakerbuild:/home/ubuntu# helm ls —debug

[debug] Created tunnel using local port: ‘46624’

[debug] SERVER: «localhost:46624»

root@veeresh-spinnakerbuild:/home/ubuntu# helm version —debug

[debug] Created tunnel using local port: ‘44653’

[debug] SERVER: «localhost:44653»

Client: &version.Version{SemVer:»v2.7.0″, GitCommit:»08c1144f5eb3e3b636d9775617287cc26e53dba4″, GitTreeState:»clean»}

Server: &version.Version{SemVer:»v2.7.0″, GitCommit:»08c1144f5eb3e3b636d9775617287cc26e53dba4″, GitTreeState:»clean»}

Followed this link: https://hackernoon.com/the-missing-ci-cd-kubernetes-component-helm-package-manager-1fe002aac680: to install a sample hello-world app using helm.

cd /home/ubuntu/hello-world

helm install . —debug

root@veeresh-spinnakerbuild:/home/ubuntu/hello-world# helm list —debug

[debug] Created tunnel using local port: ‘35190’

[debug] SERVER: «localhost:35190»

NAME REVISION UPDATED STATUS CHART NAMESPACE

knobby-donkey 1 Mon Nov 13 21:12:42 2017 DEPLOYED hello-world-1.0.0 kube-system

Logged into cbu-dev master0 node

cbu-dev-k8s-master0 core # ./kubectl get po —all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system fluentd-23hk7 1/1 Running 0 4d

kube-system fluentd-5t7rh 1/1 Running 0 4d

kube-system fluentd-jr22g 1/1 Running 0 4d

kube-system hello-world-2541921211-vfzxt 1/1 Running 0 1m

NAMESPACE NAME READY STATUS RESTARTS AG

kube-system tiller-deploy-2318886584-1km4j 1/1 Running 0 41m

Tested @philchristensen solution. It works too and with this no need to update /etc/hosts file on the master side.

Just as another input to this: I was experiencing this issue intermittently when deploying. Whenever the context deadline error appeared, I could try again and usually by the second or third try it would work. We have a VPN directly into our cluster, so I tried adding the --host [tiller service].default.svc.cluster.local flag to helm upgrade and I haven’t ever gotten the error since.

I’m seeing same issue only when running helm in alpine container.

Hi — I’m seeing Error: context deadline exceeded error when trying helm install . This is only happening when running installer/helm on alpine container . It runs fine from linux box or mac . I tried to install grpc and excipliiitly define export HELM_HOST=127.0.0.1:44134 instead of localhost and kubeconfig . Passing —host not working as well . The version I’m using is v2.7 .

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Happy Helming!

Also, tiller pod and service is running fine .

[main] 2018/01/09 00:49:22 Starting Tiller v2.7.0 (tls=false)

[main] 2018/01/09 00:49:22 GRPC listening on :44134

[main] 2018/01/09 00:49:22 Probes listening on :44135

[main] 2018/01/09 00:49:22 Storage driver is ConfigMap

[main] 2018/01/09 00:49:22 Max history per release is 0

Any suggestion ! @munnerz I saw you had same issue!

I resolved the above issue by setting NO_PROXY in alpine container. It seems because go libraries don’t honor no_proxy . Example : NO_PROXY=localhost,127.0.0.1,169.254.169.254,localaddress,.localdomain.com,.<company_domain>.com .

@sefm Setting NO_PROXY environment variable in the shell of the Alpine Linux container doesn’t work for me. helm still fails with the same error. ‘kubectl get all’ works on the other hand.

I was running into this issue as well running Helm from Ubuntu 16.04LTS outside of a bare-metal cluster. I’m running Calico for networking and am exporting the svc cluster IPs so I can reach those directly. I wasn’t seeing any kind of network connection created at all. Turns out, I had to add a port to HELM_HOST, e.g. export HELM_HOST=10.10.254.5:44134.

Now I’m up and running.

$ helm version

Client: &version.Version{SemVer:"v2.7.2", GitCommit:"8478fb4fc723885b155c924d1c8c410b7a9444e6", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.7.2", GitCommit:"8478fb4fc723885b155c924d1c8c410b7a9444e6", GitTreeState:"clean"}

This didn’t work for me.

$ helm version --debug --host 10.10.254.5

[debug] SERVER: "10.10.254.5"

Client: &version.Version{SemVer:"v2.7.2", GitCommit:"8478fb4fc723885b155c924d1c8c410b7a9444e6", GitTreeState:"clean"}

[debug] context deadline exceeded

Error: cannot connect to Tiller

This works:

$ helm version --debug --host 10.10.254.5:44134

[debug] SERVER: "10.10.254.5:44134"

Client: &version.Version{SemVer:"v2.7.2", GitCommit:"8478fb4fc723885b155c924d1c8c410b7a9444e6", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.7.2", GitCommit:"8478fb4fc723885b155c924d1c8c410b7a9444e6", GitTreeState:"clean"}

Still seeing the original issue with v2.8.2 running kubernetes in docker-for-mac edge.

$ helm version

Client: &version.Version{SemVer:"v2.8.2", GitCommit:"a80231648a1473929271764b920a8e346f6de844", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.8.2", GitCommit:"a80231648a1473929271764b920a8e346f6de844", GitTreeState:"clean"}

$ helm get manifest curatrix-int-tests --debug

[debug] Created tunnel using local port: '54054'

[debug] SERVER: "127.0.0.1:54054"

Error: context deadline exceeded

Regular helm get, helm get values, and helm get hooks all work just fine.

Nothing exceptional in the tiller logs:

[main] 2018/03/17 15:52:02 Starting Tiller v2.8.2 (tls=false)

[main] 2018/03/17 15:52:02 GRPC listening on :44134

[main] 2018/03/17 15:52:02 Probes listening on :44135

[main] 2018/03/17 15:52:02 Storage driver is ConfigMap

[main] 2018/03/17 15:52:02 Max history per release is 0

Facing this with 2.8.2 as well.

root@ubuntu:/home# helm get manifest vigilant-tapir

Error: context deadline exceeded

root@ubuntu:/home# helm version

Client: &version.Version{SemVer:"v2.8.2", GitCommit:"a80231648a1473929271764b920a8e346f6de844", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.8.2", GitCommit:"a80231648a1473929271764b920a8e346f6de844", GitTreeState:"clean"}

No related logs within tiller container.

cc @bacongobbler Thanks!

Same issue here, with helm 2.8.2 on Azure AKS (Kubernetes 1.8.7) both using a brew installation and «from script»

#3715 is the fix for this. Could one of you please try that branch and see if it works for you? Thanks!

@bacongobbler awesome! The patch works for me!

root@ubuntu:/home/cloud/helmtmp# ./helm version

Client: &version.Version{SemVer:"v2.8+unreleased", GitCommit:"87c64e7987f348c0a99a4f6e12d6ec187723b7cc", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.8+unreleased", GitCommit:"87c64e7987f348c0a99a4f6e12d6ec187723b7cc", GitTreeState:"clean"}

root@ubuntu:/home/cloud/helmtmp# ./helm install stable/linkerd

NAME: existing-bison

LAST DEPLOYED: Tue Mar 20 11:15:34 2018

.......

root@ubuntu:/home/cloud/helmtmp# ./helm get manifest existing-bison

---

# Source: linkerd/templates/config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

......

clux

mentioned this issue

Apr 12, 2018

Reproduce:

/scripts/helm_mac get manifest cf.20180507.130920 --debug

[debug] Created tunnel using local port: '58010'

[debug] SERVER: "127.0.0.1:58010"

Error: context deadline exceeded

IBMMasterMBP:bcf-deployer bjxzi$ ./scripts/helm_mac version --debug

[debug] Created tunnel using local port: '58046'

[debug] SERVER: "127.0.0.1:58046"

Client: &version.Version{SemVer:"v2.8.2", GitCommit:"a80231648a1473929271764b920a8e346f6de844", GitTreeState:"clean"}

Kubernetes: &version.Info{Major:"1", Minor:"8+", GitVersion:"v1.8.11-2+fa6873d3e386d7", GitCommit:"fa6873d3e386d7ead42923b24aea3b76e74395a3", GitTreeState:"clean", BuildDate:"2018-04-17T08:10:40Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

Server: &version.Version{SemVer:"v2.8.2", GitCommit:"a80231648a1473929271764b920a8e346f6de844", GitTreeState:"clean"}

@edwardstudy This was fixed in helm version 2.9.0. You need to upgrade.

@rabbitfang Hi, I upgraded helm but got same error:

bjxzi$ ./scripts/helm_mac get manifest cf.20180516.143139 --debug

[debug] Created tunnel using local port: '49509'

[debug] SERVER: "127.0.0.1:49509"

Error: context deadline exceeded

bjxzi$ helm version

Client: &version.Version{SemVer:"v2.9.1", GitCommit:"20adb27c7c5868466912eebdf6664e7390ebe710", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.9.1", GitCommit:"20adb27c7c5868466912eebdf6664e7390ebe710", GitTreeState:"clean"}

Is it possible ./scripts/helm_mac is using a different client? You call both that script and helm directly in that example.

Yes. ./scripts/helm_mac is 2.8.2 and helm command is 2.9.1. I use two versions in our script.

That makes sense then… As it’s been mentioned a few times in this thread now, the Helm 2.8.2 client does not have this fix. You need to use the 2.9 client if you want to use helm get manifest. The fix was purely client-side.

@bacongobbler Sorry, I missed that get manifest command was using v2.8.2.

Время на прочтение

5 мин

Количество просмотров 9.4K

Привет, меня зовут Иван, я занимаюсь бэкенд-разработкой в Ozon — пишу микросервисы для личного кабинета продавца. Сегодня я расскажу небольшую историю проблемы, вызванную неправильной настройкой пула соединений: что случилось, как мы это обнаружили и как исправлять такие проблемы в микросервисах на Go.

Проблема скрывалась внутри нашего API Gateway. Это сервис, который реализует паттерн “Фасад” и предоставляет единственное торчащее наружу окно к микросервисам.

В упрощенном виде его работу можно представить так:

-

Проверить аутентификацию и авторизацию с помощью HTTP-запроса в сервис аутентификации

-

Спроксировать запрос в нужный сервис, который определяем по пути и методу запроса пользователя

Конец декабря – время роста нагрузок и числа ошибок

Настал конец декабря. Вместе с ним к нам в поддержку стали приходить вот такие обращения:

При работе в ЛК возникают постоянные ошибки системные по 10-20 раз на дню и больше. Просьба исправить и наладить работу площадки.

Ошибки возникали на стороне API Gateway. Мы полезли в логи за подробностями и увидели ошибки, похожие на таймауты обращения к сервису аутентификацию:

{err_type: context.deadlineExceededError, err: context deadline exceeded}

{err_type: *errors.errorString, err: context canceled}Трейсы в Jaeger показали ровно такую же картину — мы не дожидались ответа от сервиса аутентификации за 2 секунды. Поэтому между нами и разработчиками сервиса аутентификации произошёл примерно такой диалог:

— Ребята, кажется, вы таймаутите. Вот трейс, на котором видно, что мы не дождались от вас ответа за 2 секунды.

— Ничего подобного, у нас все норм — мы за 200 миллисекунд отвечаем в 99% запросов. А вот вы по какой-то причине часто преждевременно обрываете соединение.

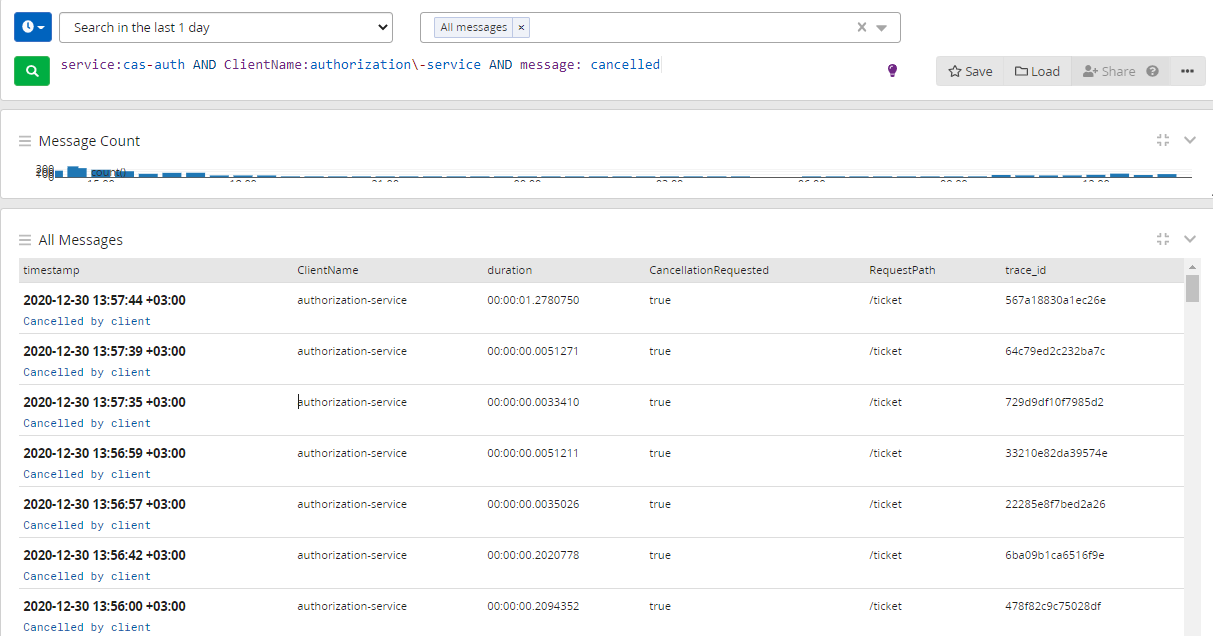

В качестве подтверждения своей правоты, разработчики сервиса аутентификации скинули нам скриншот логов. На нём видно много ошибок об отмене запроса со стороны вызывающей стороны:

Итого, мы имеем:

-

Используемый нами сервис аутентификации стабильно отрабатывает за 200 миллисекунд.

-

Многие наши обращения к этому сервису таймаутят за 2 секунды.

Причина проблемы: дефолтные настройки в Go

В это время один из авторов нашего API Gateway отметил, что очень давно заметил аномалию: сервис открывает неожиданно много соединений к удаленным портам. При запуске команды из-под контейнера видно:

$ ss -natp state time-wait | awk '{print $4}' | sort -nr | uniq -c | sort -nr | head

1053 10.20.49.117:80

1030 10.20.49.92:80

1016 10.20.49.91:80

1014 10.20.54.129:80

1013 10.20.53.213:80

1008 10.20.53.173:80

969 10.20.53.172:80Эта команда показывает количество TCP-сокетов в состоянии TIME_WAIT до разных удалённых портов. Если коротко, то состояние TIME_WAIT — это де-факто закрытое клиентом соединение. Linux по возможности предотвращает повторное использование этих пар на протяжении 60 секунд, чтобы защититься от того, что старые пакеты помешают вновь установленному TCP-соединению.

Но для нас важно другое. Само существование TCP-соединения означает, что соединение установилось и закрылось. Если такая ситуация происходит массово, то мы имеем дело с накладными расходами на DNS-резолвинг и установку соединения. В результате этого время HTTP-запроса может увеличиваться. Избежать эту проблему помогают пулы соединений. В Go для этой цели используется абстракция http.Transport.

Здесь мы вплотную приближаемся к истокам проблемы. Мы для всех клиентских запросов использовали http.DefaultTransport. Он обладает следующими параметрами:

var DefaultTransport RoundTripper = &Transport{

Proxy: ProxyFromEnvironment,

DialContext: (&net.Dialer{

Timeout: 30 * time.Second,

KeepAlive: 30 * time.Second,

}).DialContext,

ForceAttemptHTTP2: true,

MaxIdleConns: 100,

IdleConnTimeout: 90 * time.Second,

TLSHandshakeTimeout: 10 * time.Second,

ExpectContinueTimeout: 1 * time.Second,

}Среди перечисленных выше параметров к настройке пула соединений имеют отношения два:

-

MaxIdleConns— число соединений, которое разрешается иметь в состоянии Idle (т.е. открытых TCP-соединений, которые в данный момент не используются); -

IdleConnTimeout— время, через которое закрываются такие неактивные соединения.

Однако в DefaultTransport совершенно не указан другой ключевой параметр — MaxIdleConnsPerHost. Он отвечает за то, сколько неактивных TCP-соединений допускается устанавливать на один хост.

При этом если MaxIdleConnsPerHost не указан, тогда используется значение по умолчанию:

const DefaultMaxIdleConnsPerHost = 2Поскольку мы использовали именно http.DefaultTransport для всех запросов, мы получили следующую проблему.

Представим, что нам одновременно понадобилось установить 10 соединений до сервиса аутентификации. Тогда хотя бы для 8 из них будут открыты и вскоре тут же закрыты TCP-соединения, из-за ограничения MaxIdleConnsPerHost. Если такая ситуация будет повторяться часто, у нас будет больше накладных расходов на один HTTP-запрос, поскольку для него понадобится новое соединение. Из-за этого вероятность таймаутов возрастает.

Решение: отдельный транспорт с особенными настройками

Чтобы решить проблему, мы сделали следующее:

-

Выделили отдельный транспорт под сервисы аутентификации и авторизации, чтобы транспорт под сервис аутентификации не вытеснял остальные сервисы.

-

Утилизировали выделенный пул на полную — сделали так, чтобы значение

MaxIdleConnsPerHostсоответствовало значениюMaxIdleConns:

func createOneHostTransport() *http.Transport {

result := http.DefaultTransport.(*http.Transport).Clone()

result.MaxIdleConnsPerHost = result.MaxIdleConns

return result

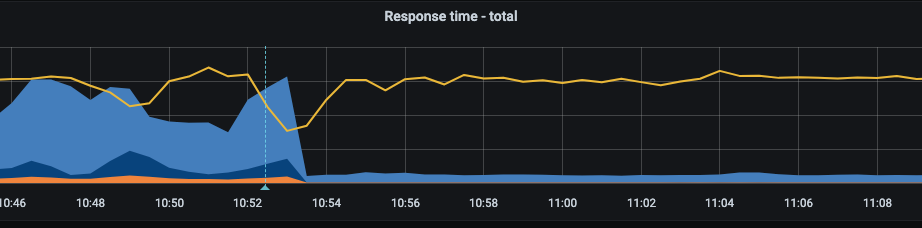

}Тут видно значительное уменьшение 0.99-квантиля по времени обращения графиков (голубой цвет) — с 2-3 секунд до менее 300 миллисекунд. Должен признать, даже после этого мы изредка видели таймауты при обращении к сервису аутентификации. Но теперь мы хотя бы видели эти же таймауты на графиках другого сервиса.

Но почему в Go такие настройки по умолчанию?

Возможно, у вас сейчас возник вопрос: зачем делать такие настройки, которые потом приходится исправлять? Неужели разработчики языка Go и библиотек к нему не подумали о том, как это будет использоваться на практике?

Я считаю так: настройки по умолчанию имеют смысл в том случае, когда мы имеем дело с множеством хостов и не очень большим числом запросов. В таком случае значение MaxIdleConnsPerHost предохраняет нас от того, чтобы в ситуации всплеска запросов один из хостов исчерпал оставшийся ресурс свободных соединений и не позволил другому сервису создать хотя бы одно долгоживущее соединение.

Если бы приложения могли говорить…

Чтобы лучше понять предназначение параметра MaxIdleConnsPerHost, представим, что компоненты системы научились говорить друг с другом. Тогда если бы мы выставили значение MaxIdleConnsPerHost равным MaxIdleConns, между нашим приложением и пулом соединений мог бы произойти такой диалог:

Приложение: http.Transport, привет! Нам тут сотня пользователей пришла одновременно и им всем вдруг срочно понадобилось посмотреть инфу о своём профиле. Установи, пожалуйста, сотню соединений к

user-servicehttp.Transport: Конечно, вот – получи сотню установленных соединений под запросы! Но я их не буду ещё полторы минуты закрывать, вдруг пригодятся .

Приложение: Всё сработало, спасибо! Только вот теперь им всем вдруг захотелось посмотреть информацию о товаре. Пожалуйста, установи соединение с

product-service.http.Transport: Да, не вопрос – держи. Но только я их у себя в пуле соединений хранить не буду, поскольку он уже полностью забит соединениями до

user-service, которые тебе больше не нужны.Приложение: (┛ಠ_ಠ)┛彡┻━┻

Разбираемся с непонятными таймаутами в Go: чеклист

Если вдруг вы сталкиваетесь с непонятными таймаутами, попробуйте следующее:

-

Проверьте метрики по скорости выполнения запросов к сторонним сервисам по HTTP. Если таких метрик нет, заведите – пригодятся.

-

Если видите расхождения в таймаутах клиента и сервера, проверьте количество соединений TIME_WAIT.

-

Если вы обнаружили много соединений в состоянии TIME_WAIT, это с высокой вероятностью означает, что пул соединений настроен неверно. Обратите внимание на то, что настройки по умолчанию в Go не очень хорошо подходят под большое количество запросов к ограниченному набору сервисов.

-

Для хоста с неоправданно большим числом запросов к нему рассмотрите вариант заведения отдельного транспорта.

Что ещё почитать по теме

-

Gotchas in the Go Network Packages Defaults: какие ещё подводные камни есть в настройках сети в Golang;

-

Coping with the TCP TIME-WAIT state on busy Linux servers: подробнее о TIME_WAIT и зачем оно нужно;

-

демо на Github: наглядно об описанной проблеме в миниатюре